作者:鹰击长空1943 | 来源:互联网 | 2023-06-27 13:12

在我们人生的路途中,找工作是每个人都会经历的阶段,小编曾经也是苦苦求职大军中的一员。怀着对以后的规划和想象,我们在找工作的时候,会看一些招聘信息,然后从中挑选合适的岗位。不过招聘的岗位每个公司都有不少的需求,我们如何从中获取数据,来进行针对岗位方面的查找呢?

大致流程如下:

1.从代码中取出pid

2.根据pid拼接网址 => 得到 detail_url,使用requests.get,防止爬虫挂掉,一旦发现爬取的detail重复,就重新启动爬虫

3.根据detail_url获取网页html信息 => requests - > html,使用BeautifulSoup

若爬取太快,就等着解封

if html.status_code!=200 print("status_code if {}".format(html.status_code))

4.根据html得到soup => soup

5.从soup中获取特定元素内容 => 岗位信息

6.保存数据到MongoDB中

代码:

# @author: limingxuan

# @contect: limx2011@hotmail.com

# @blog: https://www.jianshu.com/p/a5907362ba72

# @time: 2018-07-21

import requests

from bs4 import BeautifulSoup

import time

from pymongo import MongoClient

headers = {

"accept": "application/json, text/Javascript, */*; q=0.01",

"accept-encoding": "gzip, deflate, br",

"accept-language": "zh-CN,zh;q=0.9,en;q=0.8",

"content-type": "application/x-www-form-urlencoded; charset=UTF-8",

"COOKIE": "JSESSIOnID=""; __c=1530137184; sid=sem_pz_bdpc_dasou_title; __g=sem_pz_bdpc_dasou_title; __l=r=https%3A%2F%2Fwww.zhipin.com%2Fgongsi%2F5189f3fadb73e42f1HN40t8~.html&l=%2Fwww.zhipin.com%2Fgongsir%2F5189f3fadb73e42f1HN40t8~.html%3Fka%3Dcompany-jobs&g=%2Fwww.zhipin.com%2F%3Fsid%3Dsem_pz_bdpc_dasou_title; Hm_lvt_194df3105ad7148dcf2b98a91b5e727a=1531150234,1531231870,1531573701,1531741316; lastCity=101010100; toUrl=https%3A%2F%2Fwww.zhipin.com%2Fjob_detail%2F%3Fquery%3Dpython%26scity%3D101010100; Hm_lpvt_194df3105ad7148dcf2b98a91b5e727a=1531743361; __a=26651524.1530136298.1530136298.1530137184.286.2.285.199",

"origin": "https://www.zhipin.com",

"referer": "https://www.zhipin.com/job_detail/?query=python&scity=101010100",

"user-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36"

}

cOnn= MongoClient("127.0.0.1",27017)

db = conn.zhipin_jobs

def init():

items = db.Python_jobs.find().sort("pid")

for item in items:

if "detial" in item.keys(): #当爬虫挂掉时,跳过已爬取的页

continue

detail_url = "https://www.zhipin.com/job_detail/{}.html".format(item["pid"]) #单引号和双引号相同,str.format()新格式化方式

#第一阶段顺利打印出岗位页面的url

print(detail_url)

#返回的html是 Response 类的结果

html = requests.get(detail_url,headers = headers)

if html.status_code != 200:

print("status_code is {}".format(html.status_code))

break

#返回值soup表示一个文档的全部内容(html.praser是html解析器)

soup = BeautifulSoup(html.text,"html.parser")

job = soup.select(".job-sec .text")

print(job)

#???

if len(job)<1:

item["detail"] = job[0].text.strip() #职位描述

location = soup.select(".job-sec .job-location .location-address")

item["location"] = location[0].text.strip() #工作地点

item["updated_at"] = time.strftime("%Y-%m-%d %H:%M:%S",time.localtime()) #实时爬取时间

#print(item["detail"])

#print(item["location"])

#print(item["updated_at"])

res = save(item) #调用保存数据结构

print(res)

time.sleep(40)#爬太快IP被封了24小时==

#保存数据到MongoDB中

def save(item):

return db.Python_jobs.update_one({"_id":item["_id"]},{"$set":item}) #why item &#63;&#63;&#63;

# 保存数据到MongoDB

if __name__ == "__main__":

init()

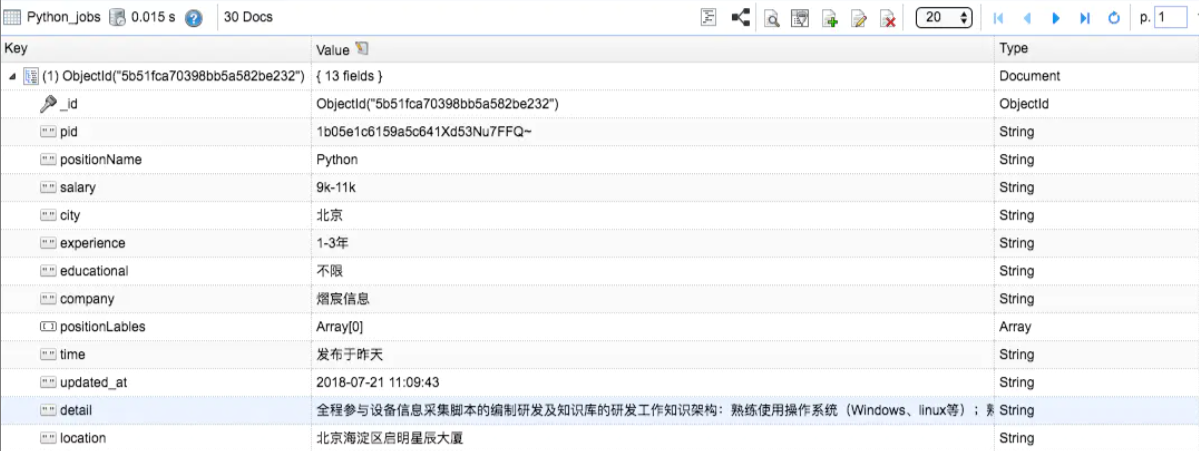

最终结果就是在MongoBooster中看到新增了detail和location的数据内容

到此这篇关于python爬取招聘要求等信息实例的文章就介绍到这了,更多相关python爬虫获取招聘要求的代码内容请搜索编程笔记以前的文章或继续浏览下面的相关文章希望大家以后多多支持编程笔记!

原文链接:https://www.py.cn/jishu/jichu/21038.html