小编给大家分享一下如何使用jar包安装部署Hadoop2.6+jdk8,相信大部分人都还不怎么了解,因此分享这篇文章给大家参考一下,希望大家阅读完这篇文章后大有收获,下面让我们一起去了解一下吧!

Hadoop的安装部署可以分为三类:

一. 自动安装部署

Ambari:http://ambari.apache.org/,它是有Hortonworks开源的。

Minos:https://github.com/XiaoMi/minos,中国小米公司开源(为的是把大家的手机变成分布式集群,哈哈。。)

Cloudera Manager(收费,但是当节点数非常少的时候是免费的。很好的策略!并且非常好用)

二. 使用RPM包安装部署

Apache Hadoop不提供

HDP和CDH提供

三. 使用JAR包安装部署

各版本均提供。

这种方式是最灵活的,可以任意更换所需要的版本,但是缺点是需要人的很多参与,不够自动化。

Hadoop 2.0安装部署流程

步骤1:准备硬件(linux操作系统,本人的机器是Fedora 21 WorkStation,CentOS适用)

步骤2:准备软件安装包,并安装基础软件(主要是JDK,本人用的是最新的jdk8)

步骤3:将Hadoop安装包分发到各个节点的同一个目录下,并解压

步骤4:修改配置文件(关键!!)

步骤5:启动服务(关键!!)

步骤6:验证是否启动成功

Hadoop各个发行版:

Apache Hadoop

最原始版本,所有其他发行版均基于该发行版实现的

0.23.x:非稳定版

2.x:稳定版

HDP

Hortonworks公司的发行版

CDH

Cloudera公司的的Hadoop发行版

包含CDH4和CDH5两个版本

CDH4:基于Apache Hadoop 0.23.0版本开发

CDH5:基于Apache Hadoop 2.2.0版本开发

不同发行版兼容性

架构、部署和使用方法一致,不同之处仅在若干内部实现。

CDH的安装方法可以参照下面的步骤:详细参见官网。

Ideal for trying Cloudera enterprise data hub, the installer will download Cloudera Manager from Cloudera's website and guide you through the setup process.

Pre-requisites: multiple, Internet-connected Linux machines, with SSH access, and significant free space in /var and /opt.

$ wget http://archive.cloudera.com/cm5/installer/latest/cloudera-manager-installer.bin $ chmod u+x cloudera-manager-installer.bin $ sudo ./cloudera-manager-installer.bin

Users setting up Cloudera enterprise data hub for production use are encouraged to follow the installation instructions in our documentation. These instructions suggest explicitly provisioning the databases used by Cloudera Manager and walk through explicitly which packages need installation.

本文的将要重点介绍的还是适用apache hadoop2.6的安装配置方法:

1. 首先,jdk要安装好,注意:请选择oracle的jdk,我这里用的jdk8.

千万别用fedora和opensuse系统自带的openjdk。貌似jps都没有。

2. 从apache官网下在最新的hadoop2.6,然后解压:

[neil@neilhost Servers]$ tar zxvf hadoop-2.6.0.tar.gz hadoop-2.6.0/ hadoop-2.6.0/etc/ hadoop-2.6.0/etc/hadoop/ hadoop-2.6.0/etc/hadoop/hdfs-site.xml hadoop-2.6.0/etc/hadoop/hadoop-metrics2.properties hadoop-2.6.0/etc/hadoop/container-executor.cfg ... ... tar: 归档文件中异常的 EOF tar: 归档文件中异常的 EOF tar: Error is not recoverable: exiting now

解压最后包了个错误,听说其他人也有类似的情况,但不影响后面的使用。

3. 配置/etc/hosts

增加一行 127.0.1.1 YARN001。配置成127.0.0.1也是可以的。

127.0.0.1 localhost.localdomain localhost ::1 localhost6.localdomain6 localhost6 127.0.1.1 YARN001

4. 修改hadoop里的各个配置文件:

4.1 解压包etc/hadoop/hadoop-en.sh

配置JAVA_HOME,将jdk路径配置上

# The java implementation to use.

export JAVA_HOME=/usr/java/jdk1.8.0_40/

#${JAVA_HOME}4.2增加一个解压包etc/hadoop/mapred-site.xml

哎呀!这个文件应该怎么写呢?不要着急,etc/hadoop/mapred-site.xml.template。基本格式只要复制里面的就可以了。

然后需要在etc/hadoop/mapred-site.xml需要增加一个configuration节点,里面增加一个property,然后的然后如下:

mapreduce.framework.name yarn

4.3 解压目录etc/hadoop/core-site.xml

增加内容如下:

fs.default.name hdfs://YARN001:8020

注意:这里的value里的YARN001就是前面在系统/etc/hosts文件里增加的YARN001.

如果没有设置,这里可以写成hdfs://localhost:8020或hdfs://127.0.0.1:8020,或者换为本机的IP都是可以的。

后面的端口可以配置成任意开放的端口,这里我配置成8020,配置成其他如9001等也是可以的。

4.4 解压目录下的etc/hadoop/hdfs-site.xml

第一个配置dfs.replication,即配置副本数量。这里配置成1,因为这里是单机版的。默认是3,如果用默认3的话,这里回报错。

第二个和第三个配置的是namenode和datanode。这里需要指定两个路径,如果不设置,会默认设置为系统/tmp目录下,这样,如果你用的是虚拟机模拟系统环境,那么每次重启虚拟机之后,/tmp会被清空,里面的信息也就没了,所以这里建议设置这里两个目录,目录可以不存在,hadoop运行时会自动按照这里的配置信息生成这两个目录。

dfs.replication 1 dfs.namenode.name.dir /home/neil/Servers/hadoop-2.6.0/dfs/name dfs.datanode.data.dir /home/neil/Servers/hadoop-2.6.0/dfs/data

4.5 解压目录下的etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle

4.6 还有一个可以改也可以不改的,就是解压目录下etc/hadoop/slave

可以将localhost改为之前设置的YARN001,也可以改为127.0.0.1

5.开始正式操作。

启动hadoop的方法有很多,在sbin目录下有很多脚本命令。其中,有一步到位的命令脚本start-all.sh,但是不建议这么做。虽然这样做比较方便,能够自动启动dfs、yarn等,但是很可能中间某几步启动失败,造成整个服务启动不完整。

另外,sbin中的其他脚本如start-dfs.sh,start-yarn.sh等也不能完全解决这样的问题。例如,启动dfs包括启动namenode和datanode,如果当中有节点启动失败,就很麻烦了。所以,我建议一步一步启动。

5.0 首先第一次使用hadoop之前,需要对namenode进行格式化。

[neil@neilhost hadoop-2.6.0]$ ll bin 总用量 440 -rwxr-xr-x. 1 neil neil 159183 11月 14 05:20 container-executor -rwxr-xr-x. 1 neil neil 5479 11月 14 05:20 hadoop -rwxr-xr-x. 1 neil neil 8298 11月 14 05:20 hadoop.cmd -rwxr-xr-x. 1 neil neil 11142 11月 14 05:20 hdfs -rwxr-xr-x. 1 neil neil 6923 11月 14 05:20 hdfs.cmd -rwxr-xr-x. 1 neil neil 5205 11月 14 05:20 mapred -rwxr-xr-x. 1 neil neil 5949 11月 14 05:20 mapred.cmd -rwxr-xr-x. 1 neil neil 1776 11月 14 05:20 rcc -rwxr-xr-x. 1 neil neil 201659 11月 14 05:20 test-container-executor -rwxr-xr-x. 1 neil neil 11380 11月 14 05:20 yarn -rwxr-xr-x. 1 neil neil 10895 11月 14 05:20 yarn.cmd [neil@neilhost hadoop-2.6.0]$ bin/hadoop namenode -format DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. 15/04/01 20:57:32 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = neilhost.neildomain/192.168.1.101 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath = /home/neil/Servers/hadoop-2.6.0/etc/hadoop:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-math4-3.1.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/home/neil/Servers/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_40 ************************************************************/ 15/04/01 20:57:32 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 15/04/01 20:57:32 INFO namenode.NameNode: createNameNode [-format] 15/04/01 20:57:32 WARN common.Util: Path /home/neil/Servers/hadoop-2.6.0/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 15/04/01 20:57:32 WARN common.Util: Path /home/neil/Servers/hadoop-2.6.0/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-fb38ac3b-414f-4643-b62d-2e9897b5db27 15/04/01 20:57:32 INFO namenode.FSNamesystem: No KeyProvider found. 15/04/01 20:57:33 INFO namenode.FSNamesystem: fsLock is fair:true 15/04/01 20:57:33 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 15/04/01 20:57:33 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 15/04/01 20:57:33 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 15/04/01 20:57:33 INFO blockmanagement.BlockManager: The block deletion will start around 2015 四月 01 20:57:33 15/04/01 20:57:33 INFO util.GSet: Computing capacity for map BlocksMap 15/04/01 20:57:33 INFO util.GSet: VM type = 64-bit 15/04/01 20:57:33 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 15/04/01 20:57:33 INFO util.GSet: capacity = 2^21 = 2097152 entries 15/04/01 20:57:33 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 15/04/01 20:57:33 INFO blockmanagement.BlockManager: defaultReplication = 1 15/04/01 20:57:33 INFO blockmanagement.BlockManager: maxReplication = 512 15/04/01 20:57:33 INFO blockmanagement.BlockManager: minReplication = 1 15/04/01 20:57:33 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 15/04/01 20:57:33 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 15/04/01 20:57:33 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 15/04/01 20:57:33 INFO blockmanagement.BlockManager: encryptDataTransfer = false 15/04/01 20:57:33 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 15/04/01 20:57:33 INFO namenode.FSNamesystem: fsOwner = neil (auth:SIMPLE) 15/04/01 20:57:33 INFO namenode.FSNamesystem: supergroup = supergroup 15/04/01 20:57:33 INFO namenode.FSNamesystem: isPermissionEnabled = true 15/04/01 20:57:33 INFO namenode.FSNamesystem: HA Enabled: false 15/04/01 20:57:33 INFO namenode.FSNamesystem: Append Enabled: true 15/04/01 20:57:33 INFO util.GSet: Computing capacity for map INodeMap 15/04/01 20:57:33 INFO util.GSet: VM type = 64-bit 15/04/01 20:57:33 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 15/04/01 20:57:33 INFO util.GSet: capacity = 2^20 = 1048576 entries 15/04/01 20:57:33 INFO namenode.NameNode: Caching file names occuring more than 10 times 15/04/01 20:57:33 INFO util.GSet: Computing capacity for map cachedBlocks 15/04/01 20:57:33 INFO util.GSet: VM type = 64-bit 15/04/01 20:57:33 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 15/04/01 20:57:33 INFO util.GSet: capacity = 2^18 = 262144 entries 15/04/01 20:57:33 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 15/04/01 20:57:33 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 15/04/01 20:57:33 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 15/04/01 20:57:33 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 15/04/01 20:57:33 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 15/04/01 20:57:33 INFO util.GSet: Computing capacity for map NameNodeRetryCache 15/04/01 20:57:33 INFO util.GSet: VM type = 64-bit 15/04/01 20:57:33 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 15/04/01 20:57:33 INFO util.GSet: capacity = 2^15 = 32768 entries 15/04/01 20:57:33 INFO namenode.NNConf: ACLs enabled? false 15/04/01 20:57:33 INFO namenode.NNConf: XAttrs enabled? true 15/04/01 20:57:33 INFO namenode.NNConf: Maximum size of an xattr: 16384 15/04/01 20:57:33 INFO namenode.FSImage: Allocated new BlockPoolId: BP-546681589-192.168.1.101-1427893053846 15/04/01 20:57:34 INFO common.Storage: Storage directory /home/neil/Servers/hadoop-2.6.0/dfs/name has been successfully formatted. 15/04/01 20:57:34 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 15/04/01 20:57:34 INFO util.ExitUtil: Exiting with status 0 15/04/01 20:57:34 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at neilhost.neildomain/192.168.1.101 ************************************************************/ [neil@neilhost hadoop-2.6.0]$

这里需要万分注意:这一步仅限于第一部署新集群时用,它会清空所有dfs上的数据。如果在线上环境下,你手贱了,吃不了兜着走!!!!

这时候,你会发现,目录下多了一个dfs目录,dfs下有个name,这是我们之前配置的etc/hadoop/hdfs-site.xml

[neil@neilhost hadoop-2.6.0]$ ll dfs 总用量 4 drwxrwxr-x. 3 neil neil 4096 4月 1 20:57 name

5.1 启动namenode

使用sbin下的hadoop-daemon.sh启动namenode。

启动之后,可以用jdk的jps命令来进行查看JVM进程。注意:我用的fedora/centos系列,所以用rpm安装jdk后一切都配置好,如果你用的ubuntu或你下载的是解压版的jdk需要按全路径输入命令,不然也配置一下jdk环境变量吧。

[neil@neilhost hadoop-2.6.0]$ ^C [neil@neilhost hadoop-2.6.0]$ sbin/hadoop-daemon.sh start namenode starting namenode, logging to /home/neil/Servers/hadoop-2.6.0/logs/hadoop-neil-namenode-neilhost.neildomain.out [neil@neilhost hadoop-2.6.0]$ jps 4192 Jps 4117 NameNode

我们可以看到NameNode成功启动了。

注意:如果没有启动成功,请去查看logs目录下的namenode.log日志文件。

[neil@neilhost hadoop-2.6.0]$ ll logs 总用量 36 -rw-rw-r--. 1 neil neil 31591 4月 1 21:19 hadoop-neil-namenode-neilhost.neildomain.log -rw-rw-r--. 1 neil neil 715 4月 1 21:13 hadoop-neil-namenode-neilhost.neildomain.out -rw-rw-r--. 1 neil neil 0 4月 1 21:13 SecurityAuth-neil.audit

5.2 启动datanode。

[neil@neilhost hadoop-2.6.0]$ sbin/hadoop-daemon.sh start datanode starting datanode, logging to /home/neil/Servers/hadoop-2.6.0/logs/hadoop-neil-datanode-neilhost.neildomain.out [neil@neilhost hadoop-2.6.0]$ jps 4276 DataNode 4117 NameNode 4351 Jps

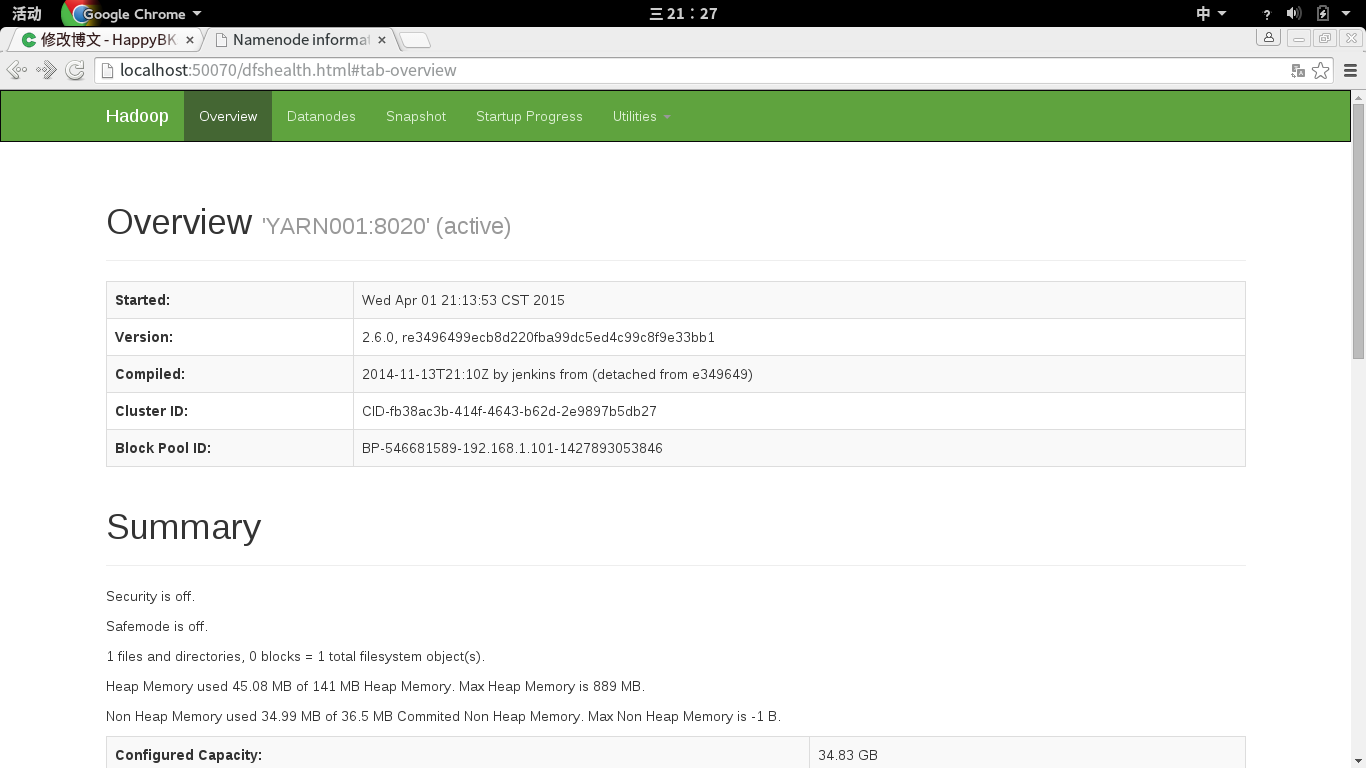

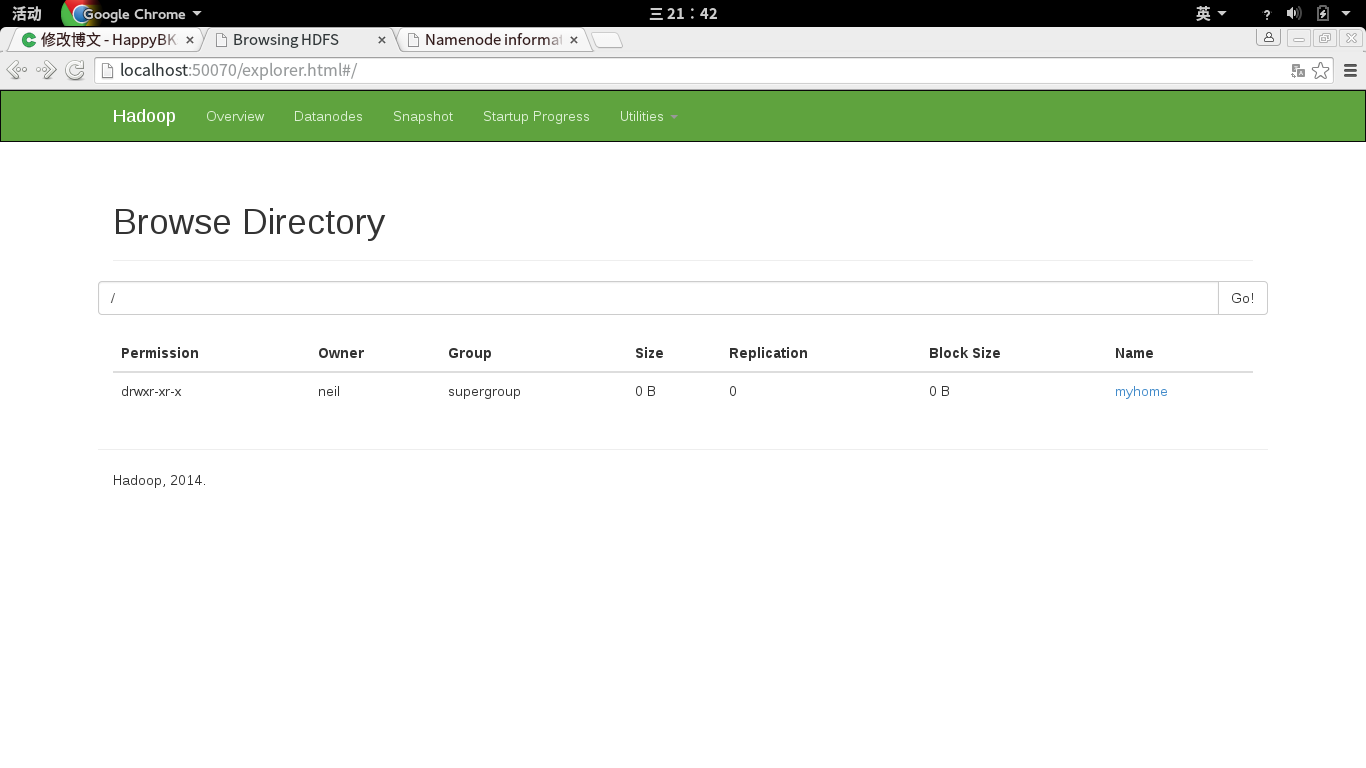

这时候,我们还可以通过网页http访问dfs。dfs默认端口50070.

输入http://yarn001:50070/

http://127.0.0.1:50070/

http://127.0.1.1:50070/

http://localhost:50070/

都是可以的。

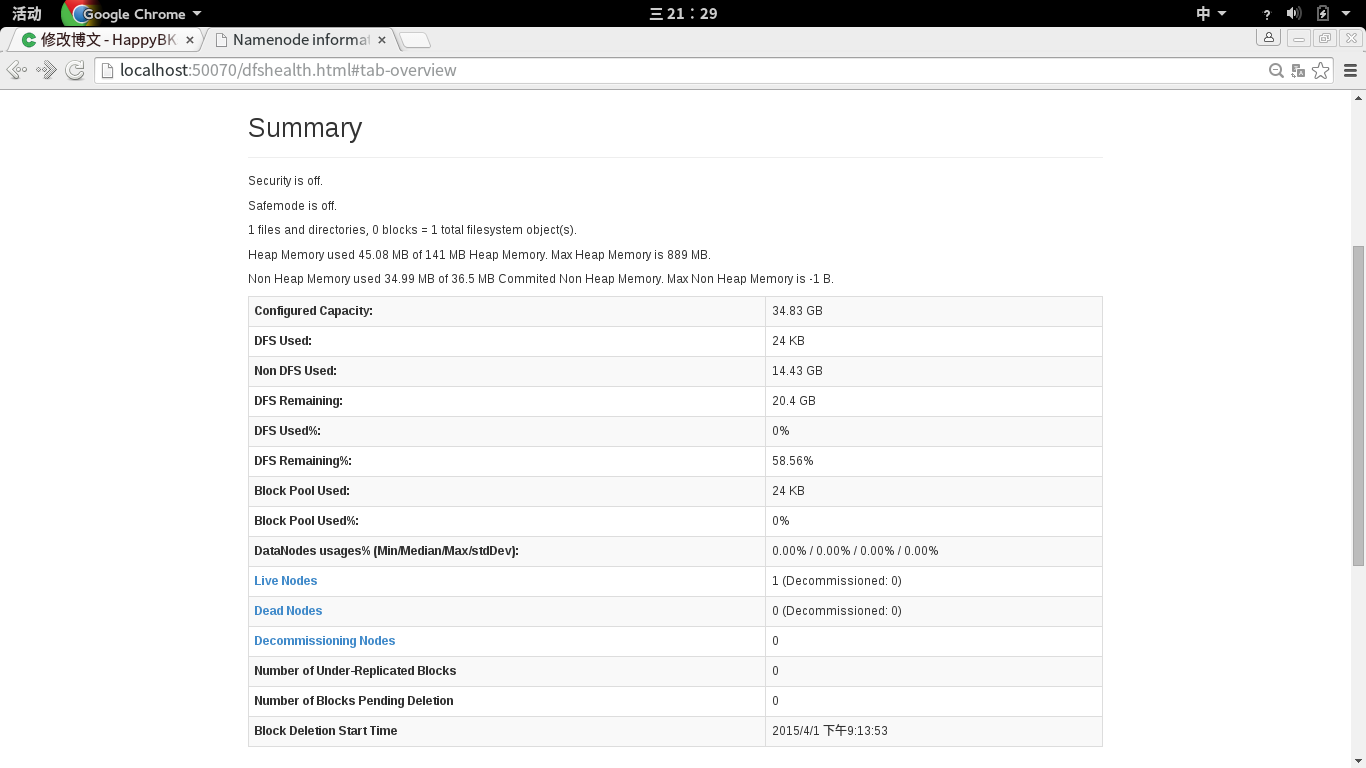

里面有当前状态的综述:

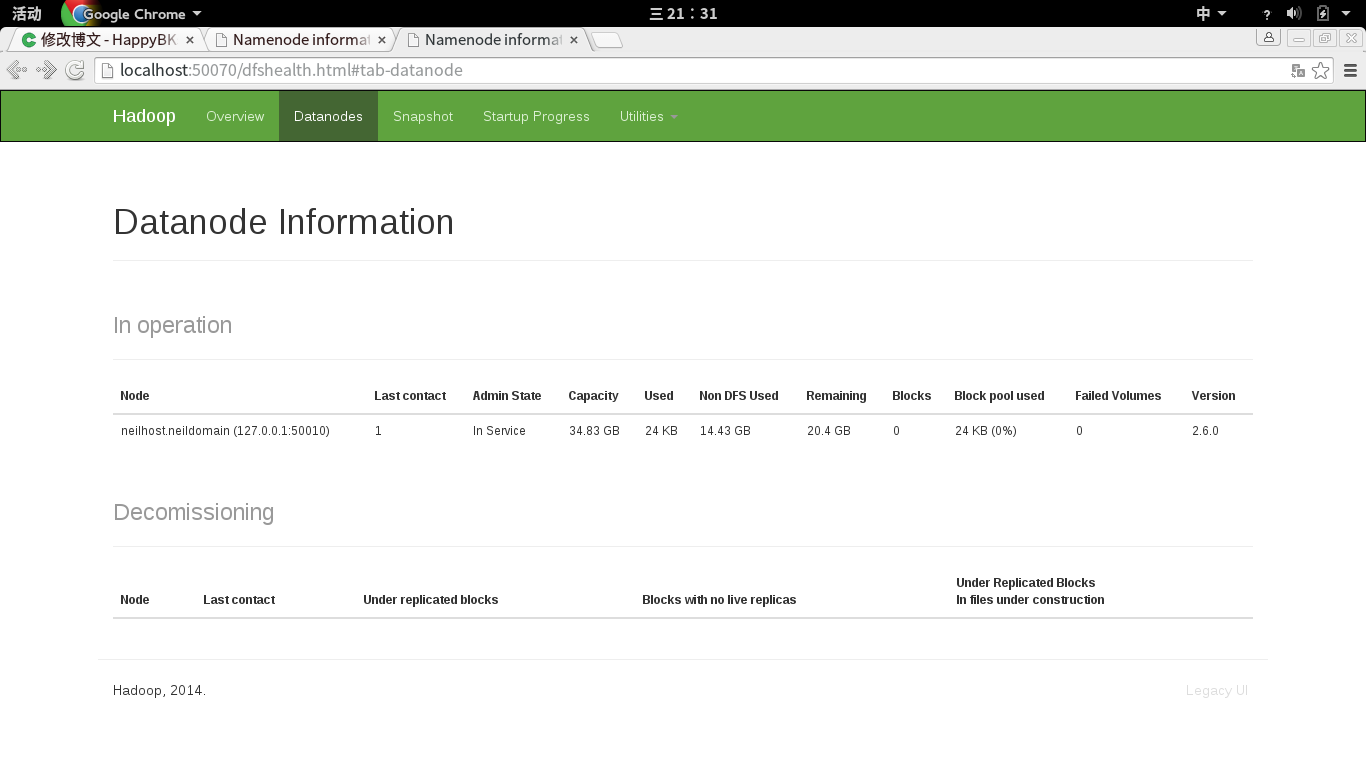

可以看到livenode这时候是1.

这里,livenode可以点击链接进去查看:

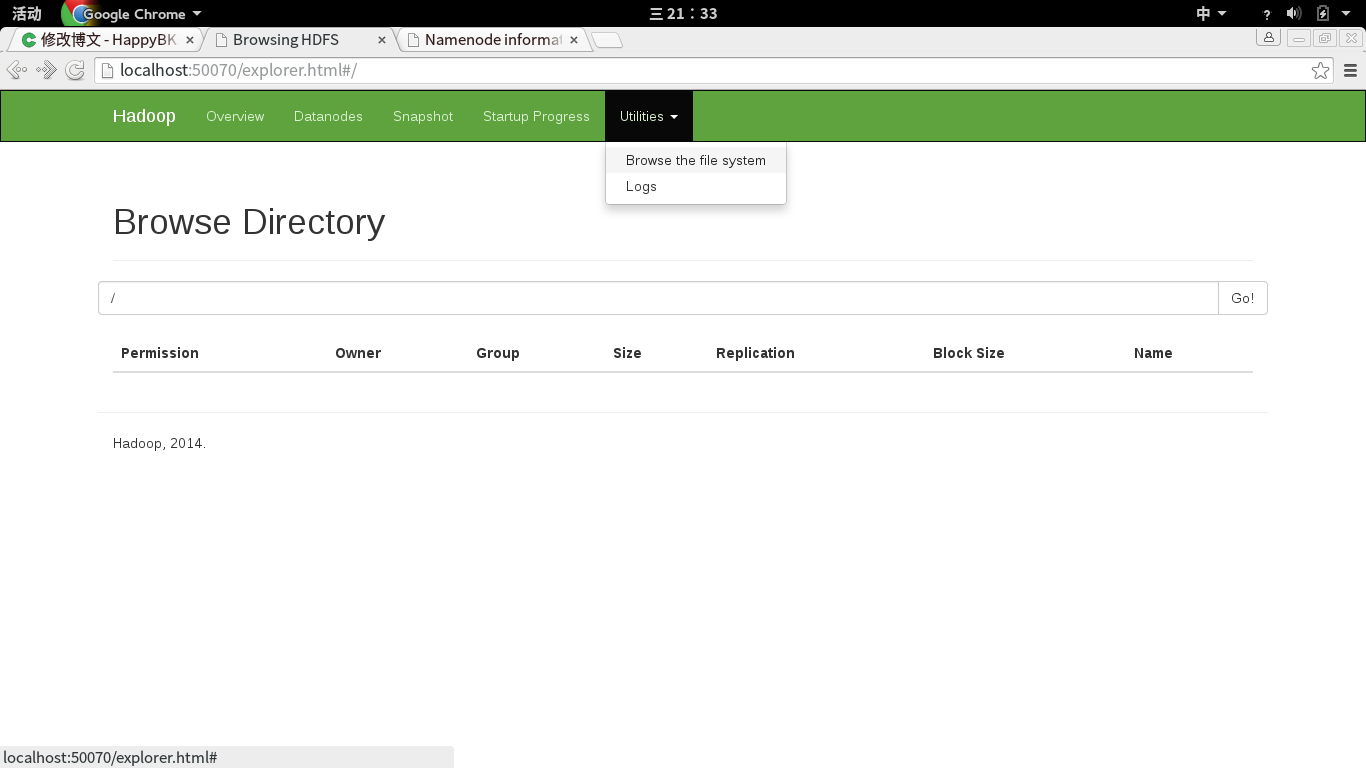

另外,还可以查看hdfs上的文件有哪些:

方法是点击上面菜单Utilities中的Browse the file system

(本文初期oschina的用户HappyBKs的博客,转载请在醒目位置声明出处!http://my.oschina.net/u/1156339/blog/396128)

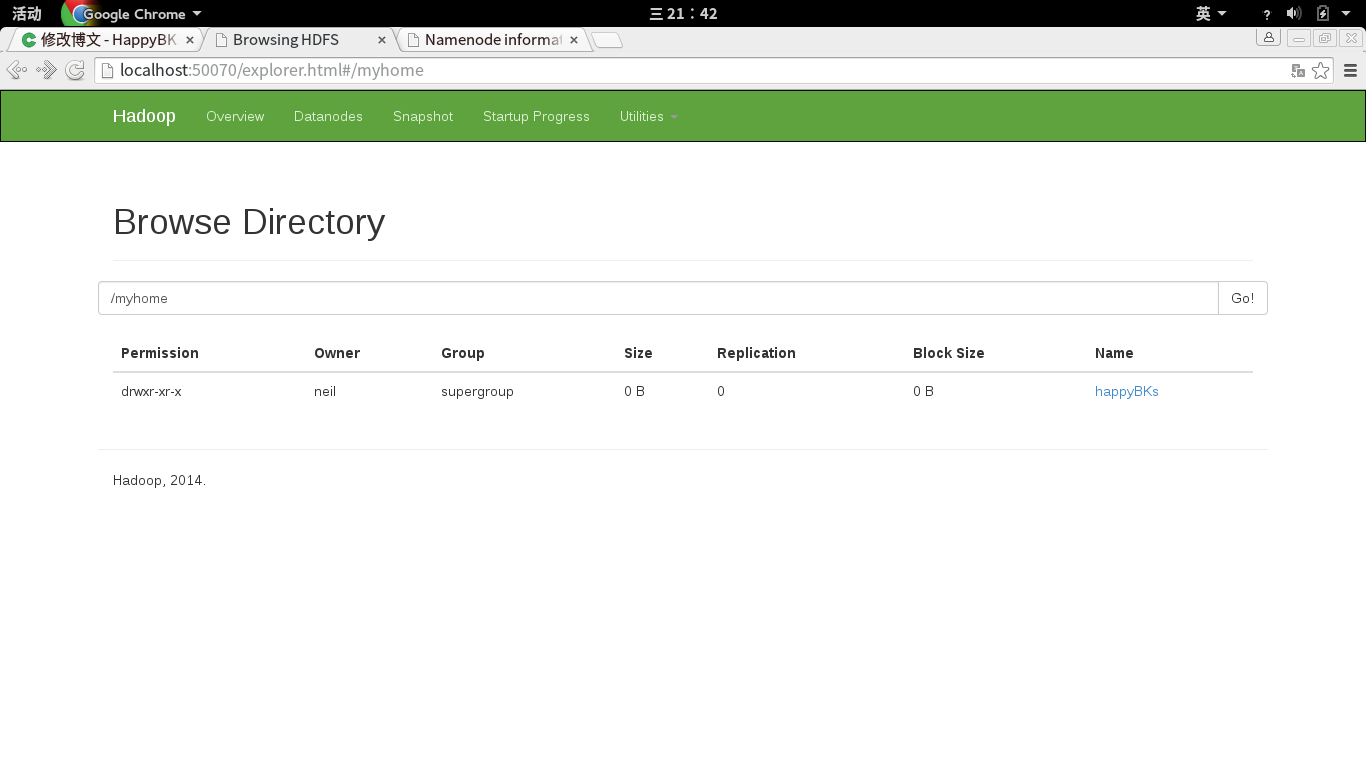

5.4 那么现在,我们尝试向hdfs中添加目录和文件。

[neil@neilhost hadoop-2.6.0]$ bin/hadoop fs -mkdir /myhome [neil@neilhost hadoop-2.6.0]$ bin/hadoop fs -mkdir /myhome/happyBKs [neil@neilhost hadoop-2.6.0]$

再次查看Utilities中的Browse the file system。

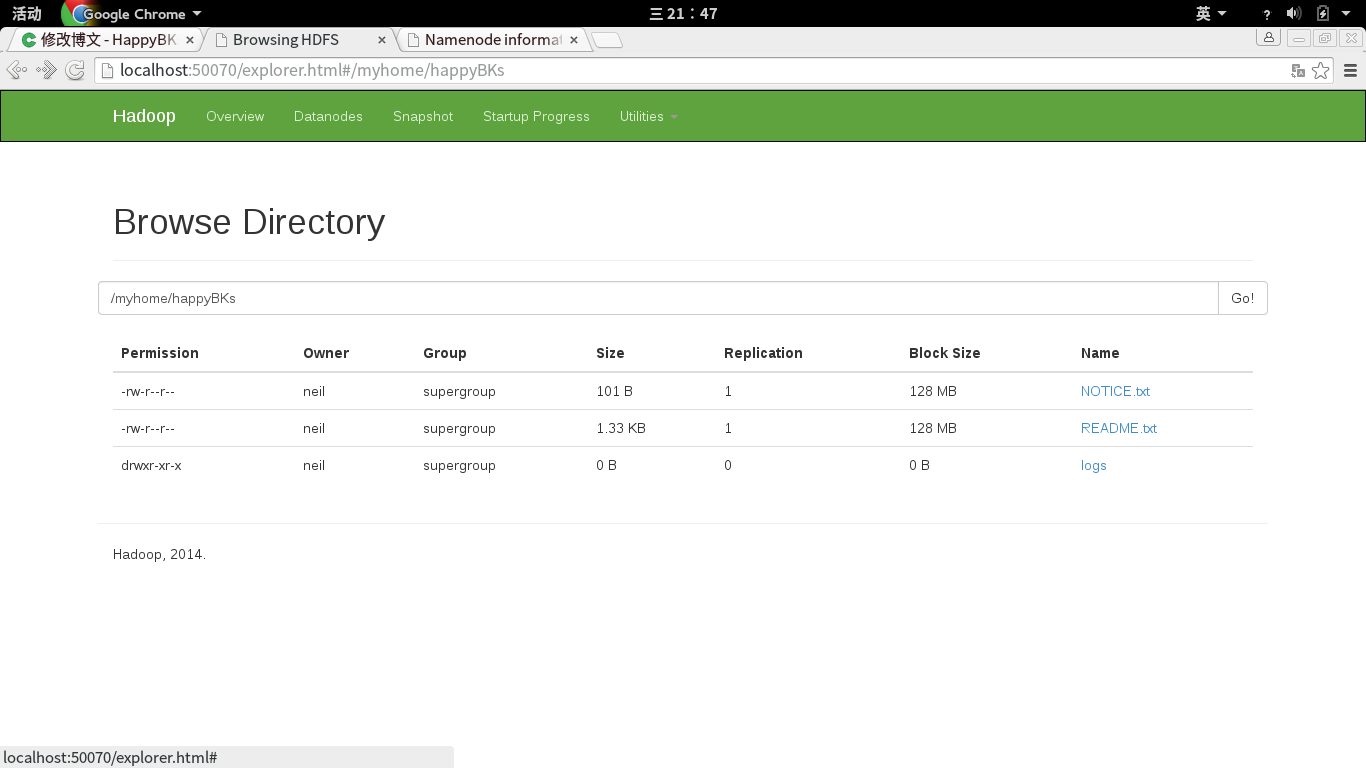

接下来,我们试着添加文件。

这里,我一次性添加两个文件和一整个文件夹。

[neil@neilhost hadoop-2.6.0]$ bin/hadoop fs -put README.txt NOTICE.txt logs/ /myhome/happyBKs

我们查看hdfs。

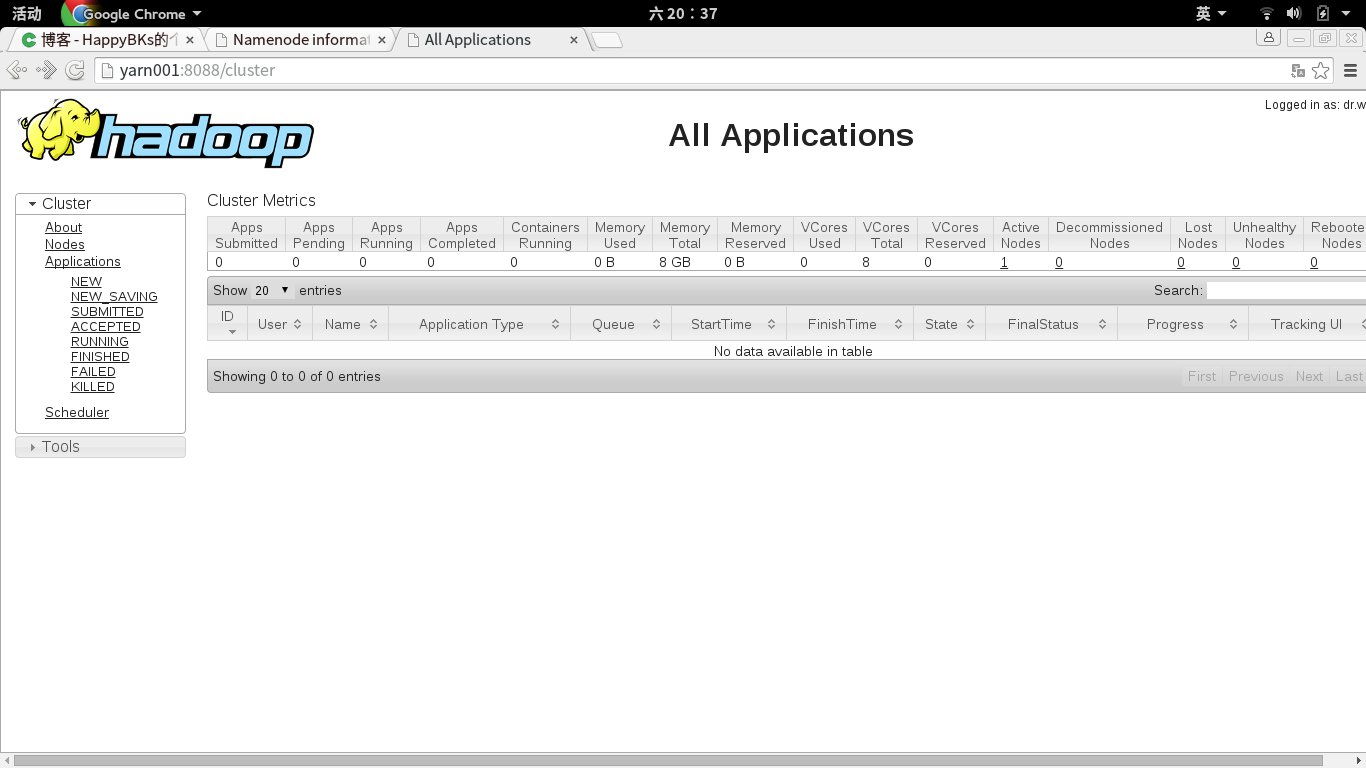

6. 前面5我们已经启动了dfs。现在开始启动yarn。yarn不像dfs那样对数据直接影响,我们可以使用一次性启动,也可以使用sbin下的yarn-daemon.sh start resourcemanager和yarn-daemon.sh start nodemanager来分别启动。

这里我直接一次启动,使用start-yarn.sh

[neil@neilhost hadoop-2.6.0]$ sbin/start-yarn.sh starting yarn daemons starting resourcemanager, logging to /home/neil/Servers/hadoop-2.6.0/logs/yarn-neil-resourcemanager-neilhost.neildomain.out localhost: ssh: connect to host localhost port 22: Connection refused [neil@neilhost hadoop-2.6.0]$ sudo sbin/start-yarn.sh [sudo] password for neil: starting yarn daemons starting resourcemanager, logging to /home/neil/Servers/hadoop-2.6.0/logs/yarn-root-resourcemanager-neilhost.neildomain.out localhost: ssh: connect to host localhost port 22: Connection refused [neil@neilhost hadoop-2.6.0]$

可以看到,这里启动被拒绝了,原因是ssh访问被拒。即使我用su权限也是一样。

sudo yum install openssh-server

网上给出了几个在ubuntu上的解决方法。

http://asyty.iteye.com/blog/1440141 http://blog.sina.com.cn/s/blog_573a052b0102dwxn.html

结果输入之后都失败了

/etc/init.d/ssh -start

bash: /etc/init.d/ssh: 没有那个文件或目录

net start sshd

Invalid command: net start

最后解决方法:

[neil@neilhost hadoop-2.6.0]$ service sshd start Redirecting to /bin/systemctl start sshd.service [neil@neilhost hadoop-2.6.0]$ pstree -p | grep ssh |-sshd(7937) [neil@neilhost hadoop-2.6.0]$ ssh locahost ssh: Could not resolve hostname locahost: Name or service not known [neil@neilhost hadoop-2.6.0]$ ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. ECDSA key fingerprint is 88:17:a4:f2:dd:87:6f:ce:b4:04:07:d5:6c:ca:6c:b1. Are you sure you want to continue connecting (yes/no)? y Please type 'yes' or 'no': yes Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts. neil@localhost's password: [neil@neilhost ~]$

之后,我们再尝试启动yarn。

[neil@neilhost hadoop-2.6.0]$ sbin/start-yarn.sh starting yarn daemons resourcemanager running as process 5115. Stop it first. neil@localhost's password: localhost: starting nodemanager, logging to /home/neil/Servers/hadoop-2.6.0/logs/yarn-neil-nodemanager-neilhost.neildomain.out [neil@neilhost hadoop-2.6.0]$ jps [neil@neilhost hadoop-2.6.0]$ jps 10113 Jps 7875 NameNode 9974 NodeManager 8936 ResourceManager 8136 DataNode 8430 SecondaryNameNode

发现,ResourceManager正式启动了。(这里因为本文我不是一天写的,所以前后pid会不一样)

这时候,我们输入yarn001:8088.进入下面的UI。刚进去可能Active Node为0,等一段时间刷新就出现1了。

注意:如果始终为0,用jps看看nodemanager是否已经启动,如果没有启动,用sbin/start-yarn.sh start nodemanager来试试。

以上是“如何使用jar包安装部署Hadoop2.6+jdk8”这篇文章的所有内容,感谢各位的阅读!相信大家都有了一定的了解,希望分享的内容对大家有所帮助,如果还想学习更多知识,欢迎关注编程笔记行业资讯频道!

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有