2019独角兽企业重金招聘Python工程师标准>>>

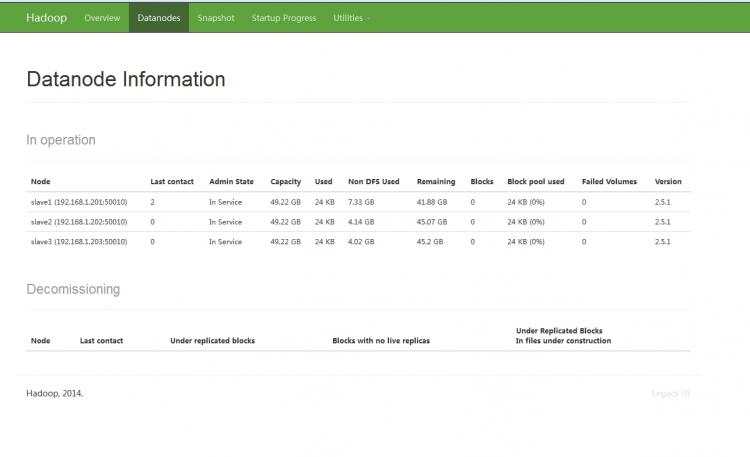

| IP | Namenode | SecondaryNamenode | DataNode | |

| master | 192.168.1.200 | 1 | ||

| slave1 | 192.168.1.201 | 1 | 1 | |

| slave2 | 192.168.1.202 | 1 | ||

| slave3 | 192.168.1.203 | 1 |

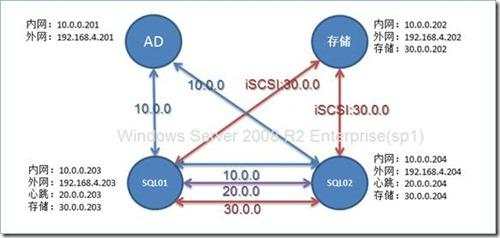

网络配置 hosts 防火墙关闭

#vim /etc/hosts

192.168.1.200 master

192.168.1.201 slave1

192.168.1.202 slave2

192.168.1.203 slave3#window hosts文件修改

192.168.1.200 master

192.168.1.201 slave1

192.168.1.202 slave2

192.168.1.203 slave3service iptables stop

chkconfig iptables off

时间同步(NN SNN DN 同步时间)

### s1a.time.du.cn 时间同步服务器

ntpdate s1a.time.du.cn

免密钥设置

ssh-keygen -t rsa(master主机上)

ssh-copy-id -i ~/.ssh/id_rsa.pub root@master(master主机上)

ssh-copy-id -i ~/.ssh/id_rsa.pub root@slave1(master主机上)

ssh-copy-id -i ~/.ssh/id_rsa.pub root@slave2(master主机上)

ssh-copy-id -i ~/.ssh/id_rsa.pub root@slave3(master主机上)###测试访问

ssh slave1

jdk 配置环境变量

#cd /tmp/

#tar xf hadoop-2.5.1_x64.tar.gz

#mv hadoop-2.5.1 /opt/hadoop

#vim /etc/profile 添加(hadoop环境变量所有机器都需要)

export JAVA_HOME=/usr/java/default

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/opt/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$PATH:$HADOOP_HOME/sbin

#source /etc/profile

测试

#echo $JAVA_HOME

#echo $HADOOP_HOME

修改各项配置文件

etc/hadoop/core-site.xml:

slave1#vim etc/hadoop/slaves-手动创建

slave1

slave2

slave3

同步配置文件然后格式化

###同步master机器上hadoop所有配置文件(*)

scp -r /opt/hadoop-2.5.1/ root@slave1:/opt/

scp -r /opt/hadoop-2.5.1/ root@slave2:/opt/

scp -r /opt/hadoop-2.5.1/ root@slave3:/opt/###格式化namenode(master主机上)

hdfs namenode -formatstart-dfs.sh

通过浏览器访问:http://192.168.1.200:50070

京公网安备 11010802041100号

京公网安备 11010802041100号