import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

#将警告不显示

import warnings

warnings.filterwarnings('ignore')#导入测试集和训练集

train_df=pd.read_csv('train.csv')

# train_df.head()

test_df=pd.read_csv('test.csv')

combine=[train_df,test_df]PassengerId=test_df['PassengerId']#因为后续会将该列删除 所以先对这一列数据进行备份

特征分析

分析存活率与几个因素之间的关系

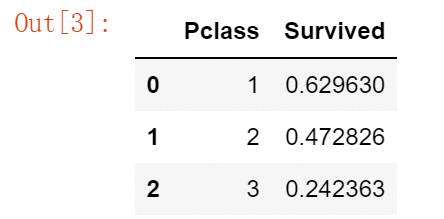

#pclass与survived的关系

train_df[['Pclass','Survived']].groupby(['Pclass'],as_index=False).mean().sort_values(by='Survived',ascending=False)

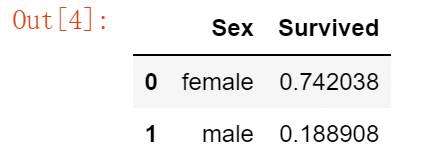

#Sex与survived的关系

train_df[['Sex','Survived']].groupby(['Sex'],as_index=False).mean().sort_values(by='Survived',ascending=False)

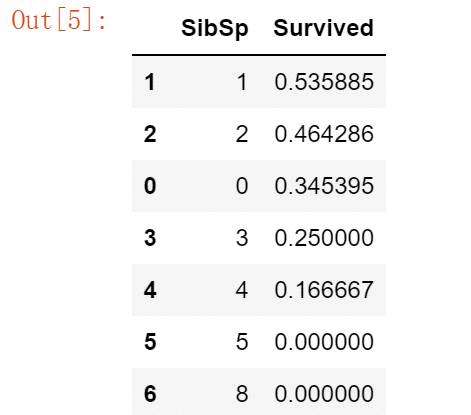

#SibSp与Survived的关系

train_df[['SibSp','Survived']].groupby(['SibSp'],as_index=False).mean().sort_values(by='Survived',ascending=False)

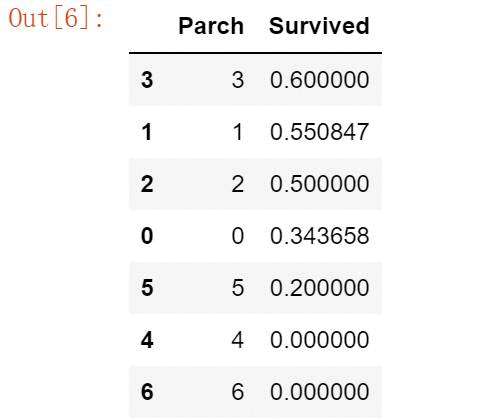

#Parch与Survived的关系

train_df[['Parch','Survived']].groupby(['Parch'],as_index=False).mean().sort_values(by='Survived',ascending=False)

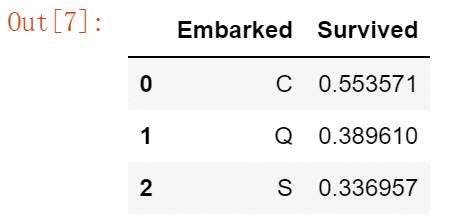

#Embarked与Survived的关系

train_df[['Embarked','Survived']].groupby(['Embarked'],as_index=False).mean().sort_values(by='Survived',ascending=False)

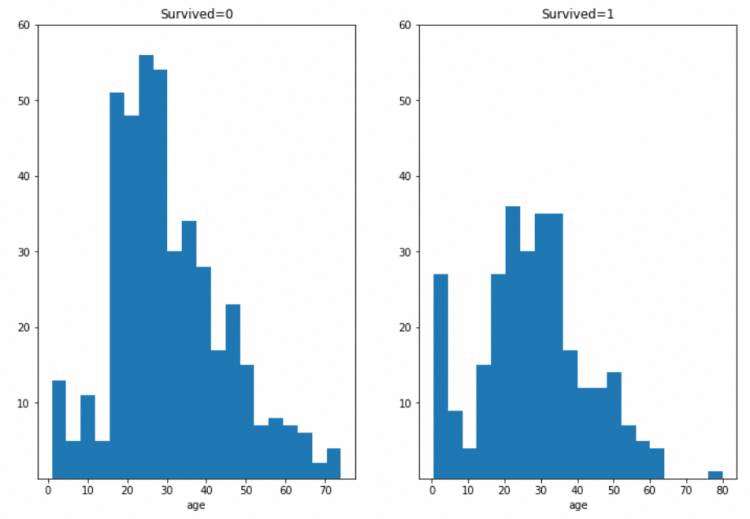

#绘制年龄与死亡率、存活率的条形图

fig=plt.figure(figsize=(12,8))ax1=fig.add_subplot(1,2,1)

ax2=fig.add_subplot(1,2,2)age1=train_df.Age[train_df['Survived']==0]

age2=train_df.Age[train_df['Survived']==1]ax1.set_title('Survived=0')

ax1.hist(age1,bins=20)

ax1.set_xlabel('age')

ax1.set_yticks([10,20,30,40,50,60])ax2.set_title('Survived=1')

ax2.hist(age2,bins=20)

ax2.set_xlabel('age')

ax2.set_yticks([10,20,30,40,50,60])

#定义同一规格的y轴 方便查看死亡与存货的区别plt.show()

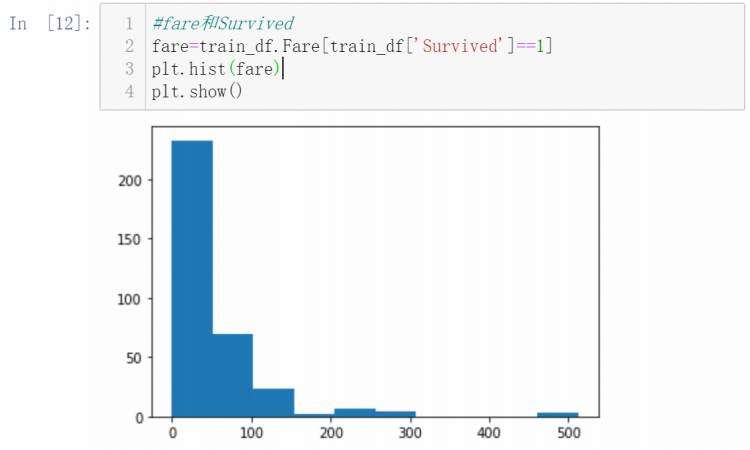

#fare和Survived 绘制图像

fare=train_df.Fare[train_df['Survived']==1]

plt.hist(fare)

plt.show()

数据清理

#删除无用特征

train_df=train_df.drop(['Ticket','Cabin'],axis=1)

test_df=test_df.drop(['Ticket','Cabin'],axis=1)

combine=[train_df,test_df]

train_df.head()

#创建新特征train_df.Name.str.extract(' ([a-zA-Z]+)\.',expand=False)

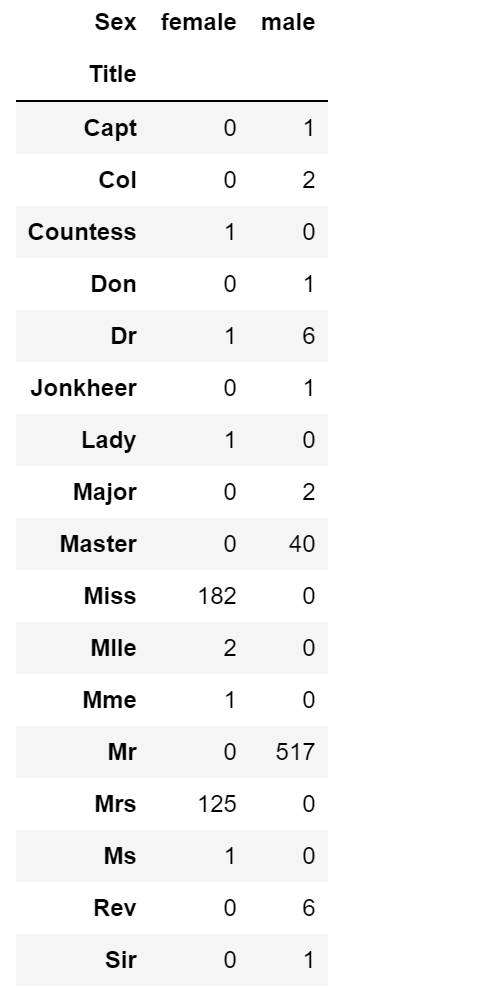

#通过正则表达式 将姓名中与性别有关的称呼截取出来 创建为新的特征for database in combine:database['Title']=database.Name.str.extract(' ([a-zA-Z]+)\.',expand=False)pd.crosstab(train_df['Title'],train_df['Sex'])

#crosstab 交叉提取列 两个参数(列,行)

#分类title 归为几个大类

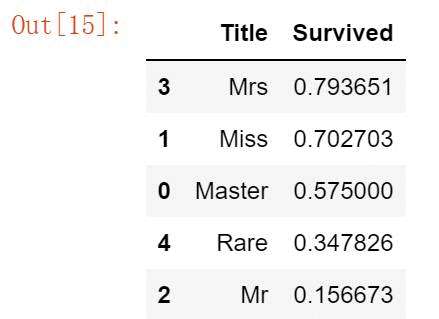

for database in combine:database['Title']=database['Title'].replace(['Lady','Countess','Capt','Col','Don','Dr','Major','Rev','Sir','Jonkheer','Dona'],'Rare')#将出现次数少的归类为Rare一类database['Title']=database['Title'].replace('Mlle','Miss')#将表达为同一意思的称呼归为一类database['Title']=database['Title'].replace('Ms','Miss')database['Title']=database['Title'].replace('Mme','Mrs')

#replace函数 两个参数(被替换内容,替换后内容) train_df[['Title','Survived']].groupby(['Title'],as_index=False).mean().sort_values(by='Survived',ascending=False)

#删除无用两列数据

train_df.drop(['PassengerId','Name'],axis=1,inplace=True)

test_df.drop(['PassengerId','Name'],axis=1,inplace=True)

combine=[train_df,test_df]

train_df

数据填充

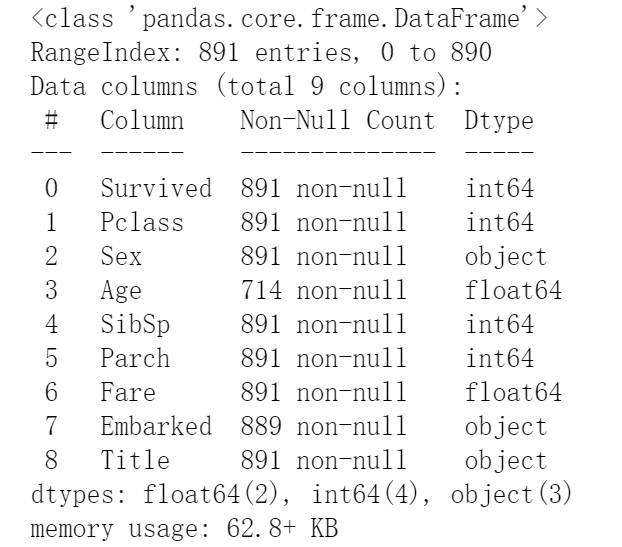

#快速查找缺失值

train_df.info()

从图中会发现 Age 和 Embarked 都有缺失值

#填充Embarked特征(缺失值较少 利用众数进行填充)

freq=train_df.Embarked.dropna().mode()[0]

#mode 显示数量最多的数for database in combine:database.Embarked=database.Embarked.fillna(freq)

#填充Age(缺失值较多 不可以用众数填充 需通过其他特征填充值)

grp = train_df.groupby(['Pclass','Sex','Title'])['Age'].mean().reset_index()#定义一个新函数 填充缺失值

def fill_age(x):return grp[(grp['Pclass']==x['Pclass']) & (grp['Sex']==x['Sex']) & (grp['Title']==x['Title'])].Age.values[0]#如果缺失值的三个条件满足平均的三个条件,即填充对应的Age值#用lambda 定义另一个简单的新的函数 判断Age值是否缺失

train_df['Age'] = train_df.apply(lambda x: fill_age(x) if np.isnan(x['Age']) else x['Age'] ,axis=1)

test_df['Age'] = test_df.apply(lambda x: fill_age(x) if np.isnan(x['Age']) else x['Age'] ,axis=1)

combine = [train_df,test_df]

处理分类特征

将数据根据分类转化为数字 0.1.2.3…

#处理sex 将数据转化为1和0

for database in combine:database['Sex'] = database['Sex'].map({'female':1,'male':0}).astype(int)

#处理Title

title_mapping = {'Mr':1 ,'Miss':2 ,'Mrs':3 ,'Master':4 ,'Rare':5}

for database in combine:database['Title'] = database['Title'].map(title_mapping).astype(int)

将连续特征转化为离散特征

将连续的数据 分为几组\几类

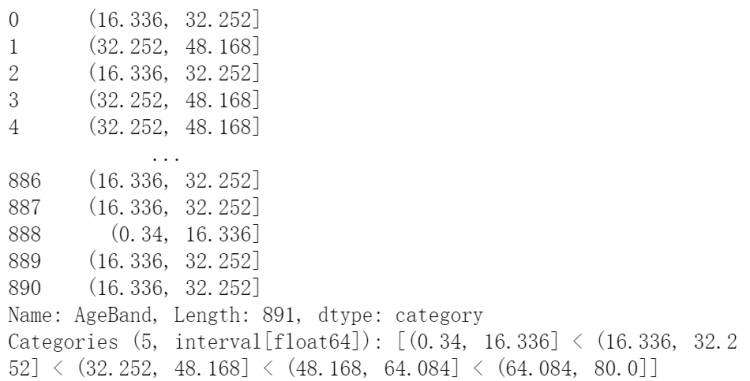

#处理Age离散化#将age分为五组 准确分组

train_df['AgeBand'] =pd.cut(train_df['Age'],5)

train_df['AgeBand']

通过切割数据 可以将Age准确的分为五个组 并将不同组用1.2.3.4.5代替

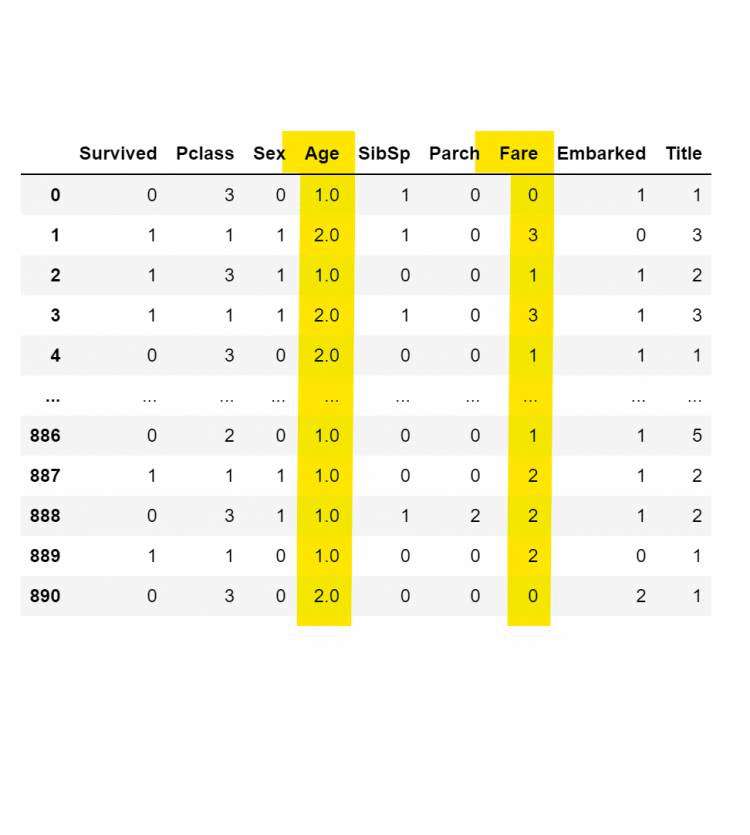

for database in combine:database.loc[ database[&#39;Age&#39;] <16,&#39;Age&#39;]&#61;0database.loc[(database[&#39;Age&#39;] >&#61;16)&(database[&#39;Age&#39;] <32),&#39;Age&#39;]&#61;1database.loc[(database[&#39;Age&#39;] >&#61;32)&(database[&#39;Age&#39;] <48),&#39;Age&#39;]&#61;2database.loc[(database[&#39;Age&#39;] >&#61;48)&(database[&#39;Age&#39;] <64),&#39;Age&#39;]&#61;3database.loc[ database[&#39;Age&#39;] >&#61;64,&#39;Age&#39;]&#61;4train_df.drop([&#39;AgeBand&#39;],axis&#61;1,inplace&#61;True)

combine&#61;[train_df,test_df]

train_df

利用qcut处理Fare的数据

#处理Fare &#xff08;不可用cut均分的方法 使用qcut 根据数量均等分&#xff09;

train_df[&#39;FareBand&#39;]&#61;pd.qcut(train_df[&#39;Fare&#39;],4,duplicates&#61;&#39;drop&#39;)#处理test_df中的缺失值&#xff08;因为缺失1个&#xff0c;所以选择平均值填充&#xff09;

test_df[&#39;Fare&#39;] &#61; test_df[&#39;Fare&#39;].fillna(test_df[&#39;Fare&#39;].mean())for dataset in combine:dataset.loc[ dataset[&#39;Fare&#39;] <&#61;7.91,&#39;Fare&#39;]&#61;0dataset.loc[(dataset[&#39;Fare&#39;] >7.91)&(dataset[&#39;Fare&#39;] <&#61;14.454),&#39;Fare&#39;]&#61;1dataset.loc[(dataset[&#39;Fare&#39;] >14.454)&(dataset[&#39;Fare&#39;] <&#61;31),&#39;Fare&#39;]&#61;2dataset.loc[dataset[&#39;Fare&#39;] >31,&#39;Fare&#39;]&#61;3dataset[&#39;Fare&#39;] &#61; dataset[&#39;Fare&#39;].astype(int)train_df.drop([&#39;FareBand&#39;],axis&#61;1,inplace&#61;True)

combine&#61;[train_df,test_df]

train_df

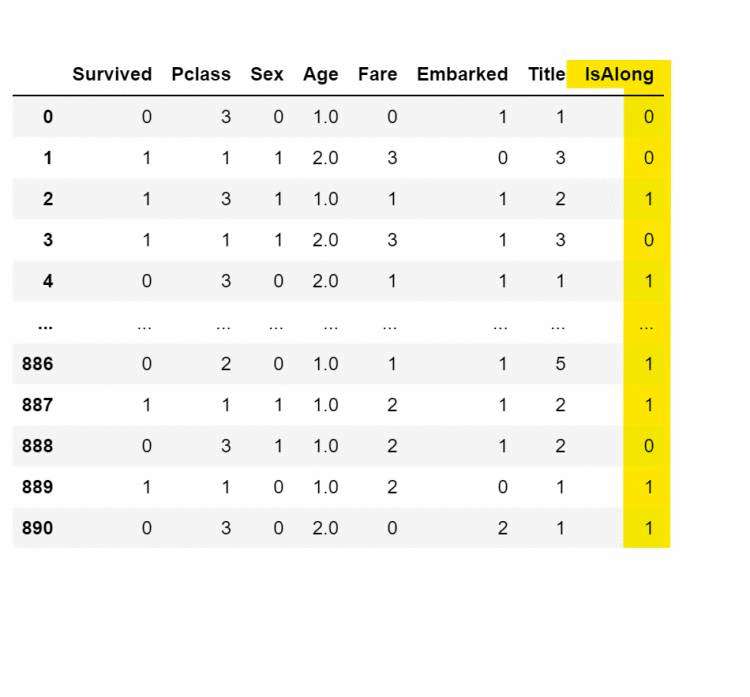

合并特征

将SibSp(兄弟姐妹配偶)和Parch(父母)两组数据合并为一组Family

后借助family判断是否为一个人出行IsAlong

for database in combine:database[&#39;Family&#39;]&#61;database[&#39;SibSp&#39;] &#43; database[&#39;Parch&#39;] &#43; 1# train_df.head()for database in combine:database[&#39;IsAlong&#39;]&#61;1database.loc[database[&#39;Family&#39;]>1,&#39;IsAlong&#39;]&#61;0train_df.drop([&#39;SibSp&#39;,&#39;Parch&#39;,&#39;Family&#39;],inplace&#61;True,axis&#61;1)

test_df.drop([&#39;SibSp&#39;,&#39;Parch&#39;,&#39;Family&#39;],inplace&#61;True,axis&#61;1)train_df

制作模型

#选择变量

X_train &#61; train_df.drop(&#39;Survived&#39;,axis&#61;1)

Y_train &#61; train_df[&#39;Survived&#39;]

#逻辑回归模型

from sklearn.linear_model import LogisticRegressionlogreg &#61; LogisticRegression()#实例化 调用

logreg.fit(X_train,Y_train)#fit拟合模型

Y_pred &#61; logreg.predict(test_df)#利用predict进行预测

acc_log &#61; round(logreg.score(X_train,Y_train)*100)#round函数--四舍五入 保留两位小数#支持向量机 SVC

from sklearn.svm import SVCsvc &#61; SVC()

svc.fit(X_train,Y_train)

Y_prd &#61; svc.predict(test_df)

acc_svc &#61; round(svc.score(X_train,Y_train)*100,2)#决策树

from sklearn.tree import DecisionTreeClassifierdecision_tree &#61; DecisionTreeClassifier()

decision_tree.fit(X_train,Y_train)

Y_pred &#61; decision_tree.predict(test_df)

acc_decision_tree &#61; round(decision_tree.score(X_train,Y_train)*100,2)#随机森林

from sklearn.ensemble import RandomForestClassifierrandom_forest &#61; RandomForestClassifier()

random_forest.fit(X_train,Y_train)

Y_pred &#61; random_forest.predict(test_df)

acc_random_forest &#61; round(random_forest.score(X_train,Y_train)*100,2)#K阶近邻

from sklearn.neighbors import KNeighborsClassifierknn &#61; KNeighborsClassifier()

knn.fit(X_train,Y_train)

Y_pred &#61; knn.predict(test_df)

acc_knn &#61; round(knn.score(X_train,Y_train)*100,2)#朴素贝叶斯

from sklearn.naive_bayes import GaussianNBgaussian &#61; GaussianNB()

gaussian.fit(X_train,Y_train)

Y_pred &#61; gaussian.predict(test_df)

acc_gaussian &#61; round(gaussian.score(X_train,Y_train)*100,2)

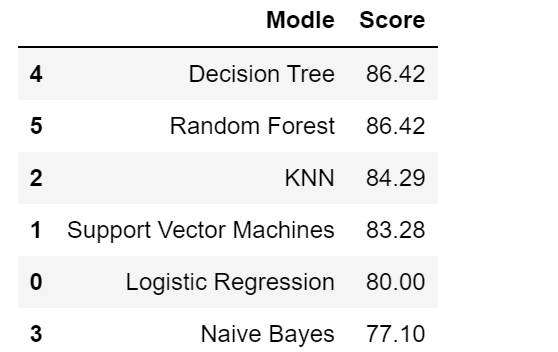

将模型根据评分进行排名

models &#61; pd.DataFrame({ #采用字典&#39;Modle&#39;:[&#39;Logistic Regression&#39;,&#39;Support Vector Machines&#39;,&#39;KNN&#39;,&#39;Naive Bayes&#39;,&#39;Decision Tree&#39;,&#39;Random Forest&#39;],&#39;Score&#39;:[acc_log,acc_svc,acc_knn,acc_gaussian,acc_decision_tree,acc_random_forest]

})

models.sort_values(by&#61;&#39;Score&#39;,ascending&#61;False)

模型预测

有以上模型的排名情况 可选择决策树对数据进行预测

#决策树

from sklearn.tree import DecisionTreeClassifierdecision_tree &#61; DecisionTreeClassifier()

decision_tree.fit(X_train,Y_train)

Y_pred &#61; decision_tree.predict(test_df)

acc_decision_tree &#61; round(decision_tree.score(X_train,Y_train)*100,2)#定义一个新的变量 为预测后的值

submission &#61; pd.DataFrame({&#39;PassengerId&#39;:PassengerId,&#39;Survived&#39;:Y_pred

})#将结果输出为文件

submission.to_csv(&#39;submission.csv&#39;,index&#61;False)

submission

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有