本范例的代码主要都是 学习OpenCV——通过KeyPoints进行目标定位这篇博客提供的,然后在它的基础上稍加修改,检测keypoints点的检测器是SURF,获取描述子也是用到SURF来描述,而用到的匹配器是FlannBased,匹配的方式是Knn方式,最后通过findHomography寻找单映射矩阵,perspectiveTransform获得最终的目标,在这个过程中还通过单映射矩阵来进一步去除伪匹配,这里只是贴出代码和代码解析,至于原理还没弄得特别明白,希望接下来可以继续学习,学懂了算法原理再来补充。

1、代码实现 #include "stdafx.h"

#include "opencv2/opencv.hpp"

#include

#include using namespace cv;

using namespace std;

Mat src,frameImg;

int width;

int height;

vector srcCorner(4);

vector dstCorner(4); static bool createDetectorDescriptorMatcher( const string& detectorType, const string& descriptorType, const string& matcherType, Ptr& featureDetector, Ptr& descriptorExtractor, Ptr& descriptorMatcher )

{ cout <<"" <" <} bool refineMatchesWithHomography(const std::vector& queryKeypoints, const std::vector& trainKeypoints, float reprojectionThreshold, std::vector& matches, cv::Mat& homography )

{ const int minNumberMatchesAllowed &#61; 4; if (matches.size() queryPoints(matches.size()); std::vector trainPoints(matches.size()); for (size_t i &#61; 0; i inliersMask(matches.size()); homography &#61; cv::findHomography(queryPoints, trainPoints, CV_FM_RANSAC, reprojectionThreshold, inliersMask); std::vector inliers; for (size_t i&#61;0; i minNumberMatchesAllowed; } bool matchingDescriptor(const vector& queryKeyPoints,const vector& trainKeyPoints, const Mat& queryDescriptors,const Mat& trainDescriptors, Ptr& descriptorMatcher, bool enableRatioTest &#61; true)

{ vector> m_knnMatches; vectorm_Matches; if (enableRatioTest) { cout<<"KNN Matching"<knnMatch(queryDescriptors,trainDescriptors,m_knnMatches,2); for (size_t i&#61;0; i BFMatcher(new cv::BFMatcher(cv::NORM_HAMMING, true)); BFMatcher->match(queryDescriptors,trainDescriptors, m_Matches ); } Mat homo; float homographyReprojectionThreshold &#61; 1.0; bool homographyFound &#61; refineMatchesWithHomography( queryKeyPoints,trainKeyPoints,homographyReprojectionThreshold,m_Matches,homo); if (!homographyFound) return false; else { if (m_Matches.size()>10){std::vector obj_corners(4);obj_corners[0] &#61; cvPoint(0,0); obj_corners[1] &#61; cvPoint( src.cols, 0 );obj_corners[2] &#61; cvPoint( src.cols, src.rows ); obj_corners[3] &#61; cvPoint( 0, src.rows );std::vector scene_corners(4);perspectiveTransform( obj_corners, scene_corners, homo);line(frameImg,scene_corners[0],scene_corners[1],CV_RGB(255,0,0),2); line(frameImg,scene_corners[1],scene_corners[2],CV_RGB(255,0,0),2); line(frameImg,scene_corners[2],scene_corners[3],CV_RGB(255,0,0),2); line(frameImg,scene_corners[3],scene_corners[0],CV_RGB(255,0,0),2); return true; }return true;} }

int main()

{ string filename &#61; "box.png"; src &#61; imread(filename,0); width &#61; src.cols; height &#61; src.rows; string detectorType &#61; "SIFT"; string descriptorType &#61; "SIFT"; string matcherType &#61; "FlannBased"; Ptr featureDetector; Ptr descriptorExtractor; Ptr descriptorMatcher; if( !createDetectorDescriptorMatcher( detectorType, descriptorType, matcherType, featureDetector, descriptorExtractor, descriptorMatcher ) ) { cout<<"Creat Detector Descriptor Matcher False!"< queryKeypoints; Mat queryDescriptor; featureDetector->detect(src,queryKeypoints); descriptorExtractor->compute(src,queryKeypoints,queryDescriptor); VideoCapture cap(0); // open the default camera cap.set( CV_CAP_PROP_FRAME_WIDTH,320);cap.set( CV_CAP_PROP_FRAME_HEIGHT,240 );if(!cap.isOpened()) // check if we succeeded { cout<<"Can&#39;t Open Camera!"< trainKeypoints; Mat trainDescriptor; Mat frame,grayFrame;char key&#61;0; // frame &#61; imread("box_in_scene.png"); while (key!&#61;27) { cap>>frame; if (!frame.empty()){frame.copyTo(frameImg);printf("%d,%d\n",frame.depth(),frame.channels());grayFrame.zeros(frame.rows,frame.cols,CV_8UC1);cvtColor(frame,grayFrame,CV_BGR2GRAY); trainKeypoints.clear(); trainDescriptor.setTo(0); featureDetector->detect(grayFrame,trainKeypoints); if(trainKeypoints.size()!&#61;0) { descriptorExtractor->compute(grayFrame,trainKeypoints,trainDescriptor); bool isFound &#61; matchingDescriptor(queryKeypoints,trainKeypoints,queryDescriptor,trainDescriptor,descriptorMatcher); imshow("foundImg",frameImg); } }key &#61; waitKey(1); } cap.release();return 0;

}

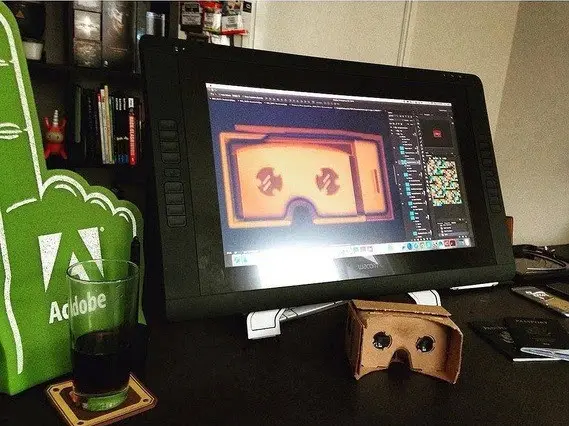

2、运行结果

那么点i则被考虑为内部点&#xff0c;如果srcPoints和dstPoints是以像素为单位&#xff0c;通常把参数设置为1-10范围内

mask &#xff1a;可选的输出掩码( CV_RANSAC or CV_LMEDS ). 输入的掩码值被忽略. &#xff08;存储inliers的点&#xff09;

这个函数的作用是在原平面和目标平面之间返回一个单映射矩阵

因此反投影误差 是最小的。

是最小的。

如果参数被设置为0&#xff0c;那么这个函数使用所有的点和一个简单的最小二乘算法来计算最初的单应性估计&#xff0c;但是&#xff0c;如果不是所有的点对都完全符合透视变换&#xff0c;那么这个初始的估计会很差&#xff0c;在这种情况下&#xff0c;你可以使用两个robust算法中的一个。 RANSAC 和LMeDS , 使用坐标点对生成了很多不同的随机组合子集&#xff08;每四对一组&#xff09;&#xff0c;使用这些子集和一个简单的最小二乘法来估计变换矩阵&#xff0c;然后计算出单应性的质量&#xff0c;最好的子集被用来产生初始单应性的估计和掩码。

RANSAC方法几乎可以处理任何异常&#xff0c;但是需要一个阈值&#xff0c; LMeDS 方法不需要任何阈值&#xff0c;但是只有在inliers大于50%时才能计算正确&#xff0c;最后&#xff0c;如果没有outliers和噪音非常小&#xff0c;则可以使用默认的方法。

PerspectiveTransform

功能&#xff1a;向量数组的透视变换

结构&#xff1a;

void perspectiveTransform(InputArray src, OutputArray dst, InputArray m)

src &#xff1a;输入两通道或三通道的浮点数组&#xff0c;每一个元素是一个2D/3D 的矢量转换

dst &#xff1a;输出和src同样的size和type

m &#xff1a;3x3 或者4x4浮点转换矩阵

转换方法为&#xff1a;