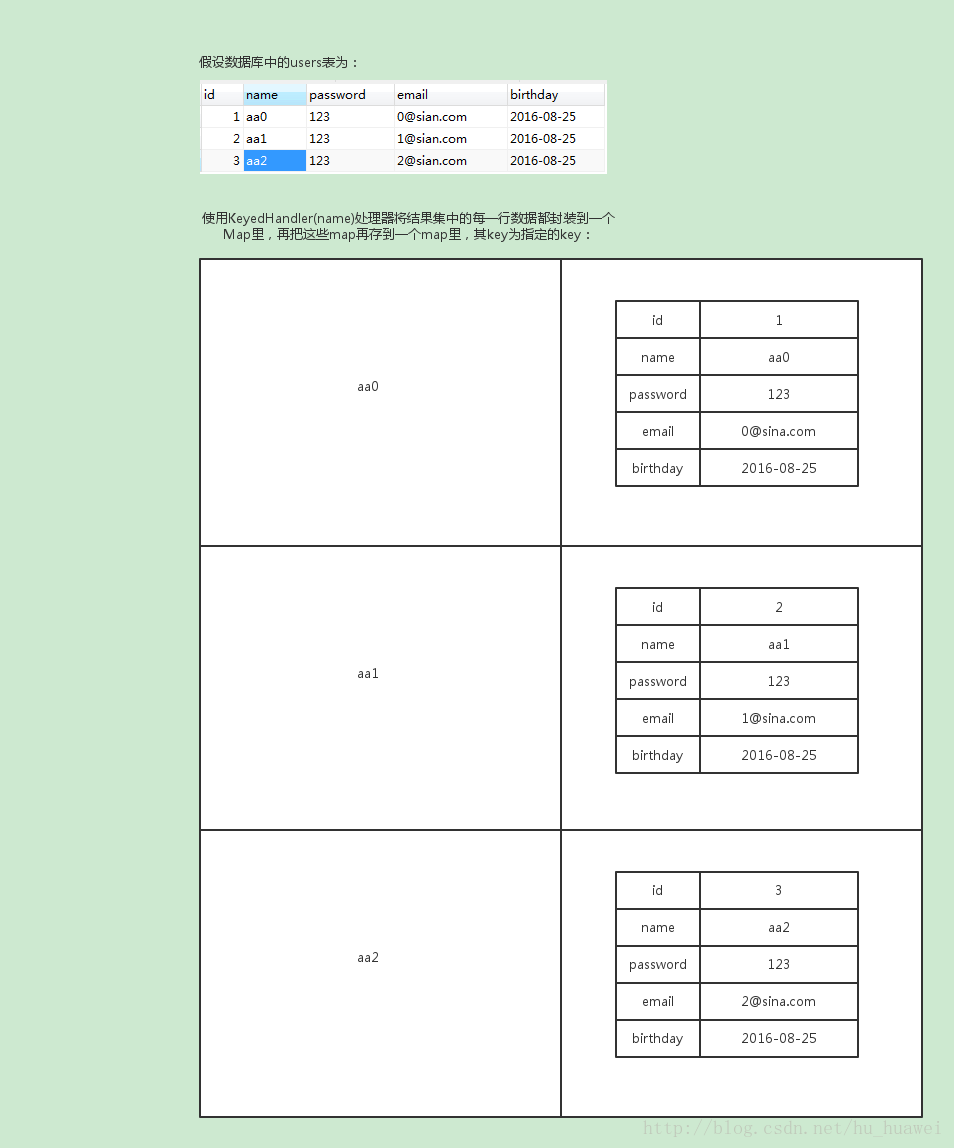

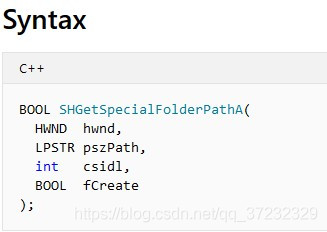

一、Sqoop概述1&#xff09;官网http://sqoop.apache.org/2&#xff09;场景传统型缺点&#xff0c;分布式存储。把传统型数据库数据迁移。Apache Sqoop&#xff08;TM&#xff09;是一种用于在Apache Hadoop和结构化数据存储&#xff08;如关系数据库&#xff09;之间高效传输批量数据的工具。二、Sqoop安装部署1&#xff09;下载安装包2&#xff09;解压tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz3)修改配置 mv sqoop-1.4.7.bin__hadoop-2.6.0 sqoopcd sqoop/confmv sqoop-env-template.sh sqoop-env.shvi sqoop-env.shexport HADOOP_COMMON_HOME&#61;/root/hd/hadoop-2.8.4export HADOOP_MAPRED_HOME&#61;/root/hd/hadoop-2.8.4export HIVE_HOME&#61;/root/hd/hiveexport ZOOCFGDIR&#61;/root/hd/zookeeper-3.4.10/conf4&#xff09;发送mysql驱动到sqoop/lib下5&#xff09;检测是否安装成功bin/sqoop help三、Sqoop的import命令执行mysql -uroot -proot进入mysql命令行准备数据&#xff1a;create database sq;use sq;create table user(id int primary key auto_increment,name varchar(20),addr varcher(200));insert into user(id,name,addr) values(1,"zhangsan","beijing");insert into user(id,name,addr) values(2,"lisi","shanghai");select * from user;create table user1(id int primary key auto_increment,name varchar(20));insert into user1(id,name) values(1,"haha");insert into user1(id,name) values(2,"hongqigong");select * from user1;1&#xff09;数据从mysql中导入到hdfs当中bin/sqoop import --connect jdbc:mysql://hd09-1:3306/sq --username root --password root --table user --target-dir /sqoop/datas--delete-target-dir --num-mappers 1 --fields-terminated-by "\t"2&#xff09;数据mysql中导入到hdfs当中进行筛选bin/sqoop import --connect jdbc:mysql://hd09-1:3306/sq --username root --password root --target-dir /sqoop/selectdemo --delete-target-dir--num-mappers 1 --fields-terminated-by "\t" --query &#39;select * from user where id<&#61;1 and $CONDITIONS&#39;3&#xff09;通过where筛选bin/sqoop import --connect jdbc:mysql://hd09-1:3306/sq --username root --password root --target-dir /sqoop/selectdemo2 --delete-target-dir--num-mappers 1 --fields-terminated-by "\t" --table user --where "id<&#61;1"4&#xff09;mysql导入到hive需要先创建hive表&#xff1a; create table user_sqoop(id int,name string) row format delimited fields terminated by &#39;\t&#39;;bin/sqoop import --connect jdbc:mysql://hd09-1:3306/sq --username root --password root --table user1 --num-mappers 1--hive-import --fields-terminated-by "\t" --hive-overwrite --hive-table user_sqoop四、问题&#xff1a;hiveconf解决&#xff1a;vi ~/.bash_profileexport HADOOP_CLASSPATH&#61;$HADOOP_CLASSPATH:/root/hd/hive/lib/*

mysql权限问题&#xff1a;grant all privileges on *.* to root&#64;&#39;%&#39; identified by "root";flush privileges;五、Sqoop的export命令需求&#xff1a;Hive/hdfs的数据导出到mysql1&#xff09;根据hive中的字段创建mysql表create table user1(id int primary key auto_increment,name varchar(20));2&#xff09;编写sqoop启动命令bin/sqoop export --connect jdbc:mysql://hd09-1:3306/sq --username root --password root --table user1 --num-mappers 1 --export-dir /user/hive/warehouse/user_sqoop --input-fields-terminated-by "\t"3)mysql中查看数据是否导入select * from user1;六、Sqoop打包脚本的使用1&#xff09;创建文件夹mkdir sqoopjob2&#xff09;创建文件脚本vi job_hdfs2mysql.optexport--connectjdbc:mysql://hd09-1:3306/sq--usernameroot--passwordroot--tableuser1--num-mappers1--export-dir/user/hive/warehouse/user_sqoop--input-fields-terminated-by"\t"注意&#xff1a;一行命令 一行值3&#xff09;执行脚本文件bin/sqoop --options-file /root/sqoopjob/job_hdfs2mysql.opt七、sqoop常用命令命令 说明import 将数据导入到集群export 将集群数据导出codegen 将某数据库中表生成javaBean并打包为jareval 查看sql执行结果createhivetable 创建hive表importalltables 导入某个数据库中所有表到hdfs中listtables 列出某个数据库下的所有表merge 将hdfs中不同目录下的数据合并在一起version V 查看sqoop版本help 查看帮助信息八、sqoop常用参数参数 说明–connect 连接关系型数据库URL–connectionmanager 指定连接管理类–driver JDBC的driver class–username 连接数据库的用户名–password 连接数据库的密码–verbose 在控制台中打印详细信息–help 查看帮助–hiveimport 将关系型数据库导入到hive表中–hiveoverwrite 覆盖掉hive表中已存在的数据–createhivetable 创建hive表–hivetable 接入hive表–table 指定关系型数据库的表名

京公网安备 11010802041100号

京公网安备 11010802041100号