python 原创 转载注明出处 代码仅为教学演示 请勿恶意使用

Scrapy是一个功能强大的python爬虫框架,非常适合搭建工程。

Scrapy支持CSS和Xpath的语法,能够很方便地查找选取结点元素,从而提取结构化的数据。

同时,Scrapy支持非同步请求,也支持代理、COOKIE管理等高阶操作。因此,在上限和性能方面都相较另一个知名的包beautifulsoup更高。

对于一般数据提取的需求,通常只需在Spider类的基础上定义个人的爬虫即可完成目标。

文本主要展示使用scrapy爬取网站评论的过程,想要深入了解scrapy其他功能,可以阅读:官方教程 及相关文档。

命令行安装:

pip install scrapy

目标网站:myanimelist.net

任务:爬取用户的评分和评论

待提取项:用户名,评论提交时间,观看进度,整体评分,小项评分,文本评论

示例页面:灼眼的夏娜评论页

步骤:

scrapy shell

fetch("https://myanimelist.net/anime/355/Shakugan_no_Shana/reviews")

view(response) # 用默认浏览器打开网页

F12可进入开发者模式,观察网页的大致结构。

善用右键菜单Inspect Element,分析需要提取的数据结构。

注意到用户的评论数据都在

class="borderDark"做筛选。几个需要提取的项,用户名、上传时间都比较好看出,在

标签下可以提取。一个难点是文本评论信息,网站对长评论进行了缩略,完整的评论需要通过read more展开

image.png

image.png

image.png

image.png

由于这里涉及到child string的遍历,直接用scrapy的SelectorList是比较无力的,考虑使用beautifulsoup

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text)

reviews = soup.find_all("div", class_="borderDark") # 注意不能直接用class

# 查看第一个评论body内的string元素

reviews[0].text

image.png

image.png

可以看到前面所要提取的几个数据也在里面,因此在strings或者stripped_strings返回的迭代器列表化之后,就能够利用下标统一地提取列表中不同位置的数据。

list(reviews[0].stripped_strings)

image.png

image.png

例如时间是第1项,观看进度是第2项,用户名是第5项……依此类推,下面就可以开始建立具体的工程了。

- 工程初始化

# 自行决定工作路径

scrapy startproject MyanimelistSpider

- 创建爬虫脚本

cd MyanimelistSpider

scrapy genspider myanimelist "https://myanimelist.net/anime/355/Shakugan_no_Shana/reviews"

- 定义Item类

# items.py

from scrapy.item import Item,Field

class MyanimelistReview(Item):

username = Field()

submission = Field()

progress = Field()

rating_overall = Field()

rating_story = Field()

rating_animation = Field()

rating_sound = Field()

rating_character = Field()

rating_enjoyment = Field()

helpful = Field()

review = Field()

- 定义Spider类

# spiders/myanimelist.py

import scrapy

from bs4 import BeautifulSoup

from WebSpiders.items import MyanimelistReview

class MyanimelistSpider(scrapy.Spider):

name = "myanimelist"

def start_requests(self):

if not hasattr(self, "url"):

self.url = ""

url = self.url

yield scrapy.Request(url, callback = self.get_review)

def get_review(self, response):

soup = BeautifulSoup(response.text)

reviews = soup.find_all("div", class_="borderDark")

for rev in reviews:

extct = list(rev.stripped_strings)

rating_startat = 12 if rev.find("i") else 11

rating_endat = rating_startat + 11

rating_overall,rating_story,rating_animation,rating_sound,rating_character,rating_enjoyment = extct[rating_startat:rating_endat:2]

review = "n".join(extct[rating_endat:][:-5])

yield MyanimelistReview(

submission = extct[0],

progress = extct[1],

username = extct[4],

helpful = extct[8],

rating_overall = rating_overall,

rating_story = rating_story,

rating_animation = rating_animation,

rating_sound = rating_sound,

rating_character = rating_character,

rating_enjoyment = rating_enjoyment,

review = review

)

next_page = response.css("div.ml4 a[href*='reviews?p=']")

if len(reviews) != 0:

if next_page:

yield response.follow(next_page[-1], callback=self.get_review)

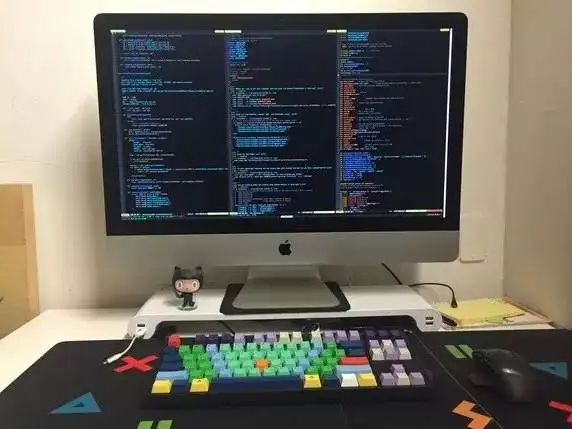

- 运行爬虫

scrapy crawl myanimelist -o shakugan_no_shana_reviews.json

-

最终结果

由 json 转化而成的 Excel 表格:

image.png

image.png

数据到这里就提取完毕了,之后可以根据具体需求做分析。

抽奖

5赞6赞

赞赏

更多好文

{"dataManager":"[]","props":{"isServer":true,"initialState":{"global":{"done":false,"artFromType":null,"fontType":"black","$modal":{"ContributeModal":false,"RewardListModal":false,"PayModal":false,"CollectionModal":false,"LikeListModal":false,"ReportModal":false,"QRCodeShareModal":false,"BookCatalogModal":false,"RewardModal":false},"$ua":{"value":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36","isIE11":false,"earlyIE":null,"chrome":"58.0","firefox":null,"safari":null,"isMac":false},"$diamondRate":{"displayable":false,"rate":0},"readMode":"day","locale":"zh-CN","seoList":[]},"note":{"data":{"is_author":false,"last_updated_at":1594575851,"public_title":"Scrapy入门:爬取动漫评论","purchased":false,"liked_note":false,"comments_count":0,"free_content":"u003cpu003eu003ccodeu003epythonu003c/codeu003e u003ccodeu003e原创u003c/codeu003e u003ccodeu003e转载注明出处u003c/codeu003e u003ccodeu003e代码仅为教学演示u003c/codeu003e u003ccodeu003e请勿恶意使用u003c/codeu003eu003c/pu003enu003cblockquoteu003enu003cpu003eScrapy是一个功能强大的python爬虫框架,非常适合搭建工程。u003cbru003enScrapy支持CSS和Xpath的语法,能够很方便地查找选取结点元素,从而提取结构化的数据。u003cbru003en同时,Scrapy支持非同步请求,也支持代理、COOKIE管理等高阶操作。因此,在上限和性能方面都相较另一个知名的包beautifulsoup更高。u003cbru003en对于一般数据提取的需求,通常只需在Spider类的基础上定义个人的爬虫即可完成目标。u003c/pu003enu003c/blockquoteu003enu003cpu003e文本主要展示使用scrapy爬取网站评论的过程,想要深入了解scrapy其他功能,可以阅读:u003ca href="https://links.jianshu.com/go?to=https%3A%2F%2Fdocs.scrapy.org%2Fen%2Flatest%2Fintro%2Ftutorial.html%23how-to-run-our-spider" target="_blank"u003e官方教程u003c/au003e 及相关文档。u003c/pu003enu003cpu003e命令行安装:u003c/pu003enu003cpreu003eu003ccodeu003epip install scrapynu003c/codeu003eu003c/preu003enu003cpu003e目标网站:myanimelist.netu003cbru003en任务:爬取用户的评分和评论u003cbru003en待提取项:用户名,评论提交时间,观看进度,整体评分,小项评分,文本评论u003cbru003en示例页面:u003ca href="https://links.jianshu.com/go?to=https%3A%2F%2Fmyanimelist.net%2Fanime%2F355%2FShakugan_no_Shana%2Freviews" target="_blank"u003e灼眼的夏娜评论页u003c/au003eu003c/pu003enu003cpu003e步骤:u003c/pu003enu003culu003enu003cliu003e熟悉scrapy shellu003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003escrapy shellnfetch("https://myanimelist.net/anime/355/Shakugan_no_Shana/reviews")nview(response) # 用默认浏览器打开网页nu003c/codeu003eu003c/preu003enu003cpu003eF12可进入开发者模式,观察网页的大致结构。u003cbru003en善用右键菜单Inspect Element,分析需要提取的数据结构。u003c/pu003enu003cbru003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-fa0262bc4461e511.png" data-original- data-original- data-original-format="image/png" data-original-filesize="28851"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-ea9e500fc1423cf9.png" data-original- data-original- data-original-format="image/png" data-original-filesize="17356"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003enu003cpu003e注意到用户的评论数据都在u003ccodeu003eu0026lt;divu0026gt;u003c/codeu003e标签下,可以通过u003ccodeu003eclass="borderDark"u003c/codeu003e做筛选。几个需要提取的项,用户名、上传时间都比较好看出,在u003ccodeu003eu0026lt;tdu0026gt;u003c/codeu003e标签下可以提取。一个难点是文本评论信息,网站对长评论进行了缩略,完整的评论需要通过read more展开u003cbru003enu003c/pu003eu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-ce034a0fe774dbd7.png" data-original- data-original- data-original-format="image/png" data-original-filesize="2781"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003eu003cbru003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-3d7269e3654c8d3e.png" data-original- data-original- data-original-format="image/png" data-original-filesize="20160"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003eu003cpu003eu003c/pu003enu003cpu003e由于这里涉及到u003ccodeu003echild stringu003c/codeu003e的遍历,直接用u003ccodeu003escrapyu003c/codeu003e的u003ccodeu003eSelectorListu003c/codeu003e是比较无力的,考虑使用u003ccodeu003ebeautifulsoupu003c/codeu003eu003c/pu003enu003cpreu003eu003ccodeu003efrom bs4 import BeautifulSoupnsoup = BeautifulSoup(response.text)nreviews = soup.find_all("div", class_="borderDark") # 注意不能直接用classn# 查看第一个评论body内的string元素nreviews[0].textnu003c/codeu003eu003c/preu003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-0a30cda3d5d28f56.png" data-original- data-original- data-original-format="image/png" data-original-filesize="66141"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003enu003cpu003e可以看到前面所要提取的几个数据也在里面,因此在u003ccodeu003estringsu003c/codeu003e或者u003ccodeu003estripped_stringsu003c/codeu003e返回的迭代器列表化之后,就能够利用下标统一地提取列表中不同位置的数据。u003c/pu003enu003cpreu003eu003ccodeu003elist(reviews[0].stripped_strings)nu003c/codeu003eu003c/preu003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-8be0c4458041ea2f.png" data-original- data-original- data-original-format="image/png" data-original-filesize="69522"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003enu003cpu003e例如时间是第1项,观看进度是第2项,用户名是第5项……依此类推,下面就可以开始建立具体的工程了。u003c/pu003enu003culu003enu003cliu003e工程初始化u003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003e# 自行决定工作路径nscrapy startproject MyanimelistSpidernu003c/codeu003eu003c/preu003enu003culu003enu003cliu003e创建爬虫脚本u003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003ecd MyanimelistSpidernscrapy genspider myanimelist "https://myanimelist.net/anime/355/Shakugan_no_Shana/reviews"nu003c/codeu003eu003c/preu003enu003culu003enu003cliu003e定义Item类u003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003e# items.pynfrom scrapy.item import Item,Fieldnclass MyanimelistReview(Item):n username = Field()n submission = Field()n progress = Field()n rating_overall = Field()n rating_story = Field()n rating_animation = Field()n rating_sound = Field()n rating_character = Field()n rating_enjoyment = Field()n helpful = Field()n review = Field()nu003c/codeu003eu003c/preu003enu003culu003enu003cliu003e定义Spider类u003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003e# spiders/myanimelist.pynimport scrapynfrom bs4 import BeautifulSoupnfrom WebSpiders.items import MyanimelistReviewnnclass MyanimelistSpider(scrapy.Spider):n name = "myanimelist"n n def start_requests(self):n if not hasattr(self, "url"):n self.url = ""n url = self.urln yield scrapy.Request(url, callback = self.get_review)n n def get_review(self, response):n soup = BeautifulSoup(response.text)n reviews = soup.find_all("div", class_="borderDark")n for rev in reviews:n extct = list(rev.stripped_strings)n rating_startat = 12 if rev.find("i") else 11n rating_endat = rating_startat + 11n rating_overall,rating_story,rating_animation,rating_sound,rating_character,rating_enjoyment = extct[rating_startat:rating_endat:2]n review = "\n".join(extct[rating_endat:][:-5])n yield MyanimelistReview(n submission = extct[0],n progress = extct[1],n username = extct[4],n helpful = extct[8],n rating_overall = rating_overall,n rating_story = rating_story,n rating_animation = rating_animation,n rating_sound = rating_sound,n rating_character = rating_character,n rating_enjoyment = rating_enjoyment,n review = reviewn )n next_page = response.css("div.ml4 a[href*='reviews?p=']")n if len(reviews) != 0:n if next_page:n yield response.follow(next_page[-1], callback=self.get_review)nu003c/codeu003eu003c/preu003enu003culu003enu003cliu003e运行爬虫u003c/liu003enu003c/ulu003enu003cpreu003eu003ccodeu003escrapy crawl myanimelist -o shakugan_no_shana_reviews.jsonnu003c/codeu003eu003c/preu003enu003culu003enu003cliu003enu003cpu003e最终结果u003cbru003en由 json 转化而成的 Excel 表格:u003c/pu003enu003cbru003enu003cdiv class="image-package"u003enu003cdiv class="image-container" u003enu003cdiv class="image-container-fill" u003eu003c/divu003enu003cdiv class="image-view" data- data-u003eu003cimg data-original-src="//upload-images.jianshu.io/upload_images/23320666-c91e5073bd3eaa90.png" data-original- data-original- data-original-format="image/png" data-original-filesize="71292"u003eu003c/divu003enu003c/divu003enu003cdiv class="image-caption"u003eimage.pngu003c/divu003enu003c/divu003enu003c/liu003enu003c/ulu003enu003cpu003e数据到这里就提取完毕了,之后可以根据具体需求做分析。u003c/pu003en","voted_down":false,"rewardable":true,"show_paid_comment_tips":false,"share_image_url":"https://upload-images.jianshu.io/upload_images/23320666-fa0262bc4461e511.png","slug":"4a00ac998115","user":{"liked_by_user":false,"following_count":9,"gender":0,"avatar_widget":null,"slug":"c7fd0084255d","intro":"在算法中找寻新的自我","likes_count":18,"nickname":"chiiyu","badges":[],"total_fp_amount":"1511679929473924550","wordage":2533,"avatar":"https://upload.jianshu.io/users/upload_avatars/23320666/48fa27a5-9a87-4d2f-8268-4bee2a287b0d.jpg","id":23320666,"liked_user":false},"likes_count":5,"paid_type":"free","show_ads":true,"paid_content_accessible":false,"hide_search_input":false,"total_fp_amount":"353000000000000000","trial_open":false,"reprintable":true,"bookmarked":false,"wordage":644,"featured_comments_count":0,"downvotes_count":0,"wangxin_trial_open":null,"guideShow":{"audit_user_nickname_spliter":0,"pc_note_bottom_btn":1,"pc_like_author_guidance":1,"ban_some_labels":1,"h5_real_name_auth_link":1,"audit_user_background_image_spliter":0,"audit_note_spliter":0,"new_user_no_ads":1,"launch_tab":0,"include_post":0,"pc_login_guidance":1,"audit_comment_spliter":0,"pc_note_bottom_qrcode":1,"audit_user_avatar_spliter":0,"audit_collection_spliter":0,"pc_top_lottery_guidance":2,"subscription_guide_entry":1,"creation_muti_function_on":1,"explore_score_searcher":0,"audit_user_spliter":0,"h5_ab_test":1,"reason_text":1,"pc_note_popup":2},"commentable":true,"total_rewards_count":0,"id":73490795,"notebook":{"name":""},"activity_collection_slug":null,"description":"python 原创 转载注明出处 代码仅为教学演示 请勿恶意使用 Scrapy是一个功能强大的python爬虫框架,非常适合搭建工程。Scrapy支持CSS和Xpath的语法...","first_shared_at":1594524788,"views_count":175,"notebook_id":46741464},"baseList":{"likeList":[],"rewardList":[]},"status":"success","statusCode":0},"user":{"isLogin":false,"userInfo":{}},"comments":{"list":[],"featuredList":[]}},"initialProps":{"pageProps":{"query":{"slug":"4a00ac998115"}},"localeData":{"common":{"jianshu":"简书","diamond":"简书钻","totalAssets":"总资产{num}","diamondValue":" (约{num}元)","login":"登录","logout":"注销","register":"注册","on":"开","off":"关","follow":"关注","followBook":"关注连载","following":"已关注","cancelFollow":"取消关注","publish":"发布","wordage":"字数","audio":"音频","read":"阅读","reward":"赞赏","zan":"赞","comment":"评论","expand":"展开","prevPage":"上一页","nextPage":"下一页","floor":"楼","confirm":"确定","delete":"删除","report":"举报","fontSong":"宋体","fontBlack":"黑体","chs":"简体","cht":"繁体","jianChat":"简信","postRequest":"投稿请求","likeAndZan":"喜欢和赞","rewardAndPay":"赞赏和付费","home":"我的主页","markedNotes":"收藏的文章","likedNotes":"喜欢的文章","paidThings":"已购内容","wallet":"我的钱包","setting":"设置","feedback":"帮助与反馈","loading":"加载中...","needLogin":"请登录后进行操作","trialing":"文章正在审核中...","reprintTip":"禁止转载,如需转载请通过简信或评论联系作者。"},"error":{"rewardSelf":"无法打赏自己的文章哟~"},"message":{"paidNoteTip":"付费购买后才可以参与评论哦","CommentDisableTip":"作者关闭了评论功能","contentCanNotEmptyTip":"回复内容不能为空","addComment":"评论发布成功","deleteComment":"评论删除成功","likeComment":"评论点赞成功","setReadMode":"阅读模式设置成功","setFontType":"字体设置成功","setLocale":"显示语言设置成功","follow":"关注成功","cancelFollow":"取消关注成功","copySuccess":"成功"},"header":{"homePage":"首页","download":"下载APP","discover":"发现","message":"消息","reward":"赞赏支持","editNote":"编辑文章","writeNote":"写文章","techarea":"IT技术"},"note":{},"noteMeta":{"lastModified":"最后编辑于 ","wordage":"字数 {num}","viewsCount":"阅读 {num}"},"divider":{"selfText":"以下内容为付费内容,定价 ¥{price}","paidText":"已付费,可查看以下内容","notPaidText":"还有 {percent} 的精彩内容","modify":"点击修改"},"paidPanel":{"buyNote":"支付 ¥{price} 继续阅读","buyBook":"立即拿下 ¥{price}","freeTitle":"该作品为付费连载","freeText":"购买即可永久获取连载内的所有内容,包括将来更新的内容","paidTitle":"还没看够?拿下整部连载!","paidText":"永久获得连载内的所有内容, 包括将来更新的内容"},"book":{"last":"已是最后","lookCatalog":"查看连载目录","header":"文章来自以下连载"},"action":{"like":"{num}人点赞","collection":"收入专题","report":"举报文章"},"comment":{"allComments":"全部评论","featuredComments":"精彩评论","closed":"评论已关闭","close":"关闭评论","open":"打开评论","desc":"按时间倒序","asc":"按时间正序","disableText1":"用户已关闭评论,","disableText2":"与Ta简信交流","placeholder":"写下你的评论...","publish":"发表","create":" 添加新评论","reply":" 回复","restComments":"还有{num}条评论,","expandImage":"展开剩余{num}张图","deleteText":"确定要删除评论么?"},"collection":{"title":"被以下专题收入,发现更多相似内容","putToMyCollection":"收入我的专题"},"seoList":{"title":"推荐阅读","more":"更多精彩内容"},"sideList":{"title":"推荐阅读"},"wxShareModal":{"desc":"打开微信“扫一扫”,打开网页后点击屏幕右上角分享按钮"},"bookChapterModal":{"try":"试读","toggle":"切换顺序"},"collectionModal":{"title":"收入到我管理的专题","search":"搜索我管理的专题","newCollection":"新建专题","create":"创建","nothingFound":"未找到相关专题","loadMore":"展开查看更多"},"contributeModal":{"search":"搜索专题投稿","newCollection":"新建专题","addNewOne":"去新建一个","nothingFound":"未找到相关专题","loadMore":"展开查看更多","managed":"我管理的专题","recommend":"推荐专题"},"QRCodeShow":{"payTitle":"微信扫码支付","payText":"支付金额"},"rewardModal":{"title":"给作者送糖","custom":"自定义","placeholder":"给Ta留言...","choose":"选择支付方式","balance":"简书余额","tooltip":"网站该功能暂时下线,如需使用,请到简书App操作","confirm":"确认支付","success":"赞赏成功"},"payModal":{"payBook":"购买连载","payNote":"购买文章","promotion":"优惠券","promotionFetching":"优惠券获取中...","noPromotion":"无可用优惠券","promotionNum":"{num}张可用","noUsePromotion":"不使用优惠券","validPromotion":"可用优惠券","invalidPromotion":"不可用优惠券","total":"支付总额","tip1":"· 你将购买的商品为虚拟内容服务,购买后不支持退订、转让、退换,请斟酌确认。","tip2":"· 购买后可在“已购内容”中查看和使用。","success":"购买成功"},"reportModal":{"abuse":"辱骂、人身攻击等不友善内容","minors_forbidden":"未成年违规内容","ad":"广告及垃圾信息","plagiarism":"抄袭或未授权转载","placeholder":"写下举报的详情情况(选填)","success":"举报成功"},"guidModal":{"modalAText":"相似文章推荐","subText":"下载简书APP,浏览更多相似文章","btnAText":"先不下载,下次再说","followOkText":"关注作者成功!","followTextTip":"下载简书APP,作者更多精彩内容更新及时提醒!","followBtn":"下次再说","downloadTipText":"更多精彩内容,就在简书APP","footerDownLoadText":"下载简书APP","modabTitle":"免费送你2次抽奖机会","modalbTip":"抽取10000收益加成卡,下载简书APP概率翻倍","modalbFooterTip":"下载简书APP,天天参与抽大奖","modalReward":"抽奖","scanQrtip":"扫码下载简书APP","downloadAppText":"下载简书APP,随时随地发现和创作内容","redText":"阅读","likesText":"赞","downLoadLeft":"更多好文","leftscanText":"把文字装进口袋"}},"currentLocale":"zh-CN","asPath":"/p/4a00ac998115"}},"page":"/p/[slug]","query":{"slug":"4a00ac998115"},"buildId":"uDDUKj4JBfqzrzemUN54X","assetPrefix":"https://cdn2.jianshu.io/shakespeare"}

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有