| node00 | 192.168.247.144 | node00 |

|---|---|---|

| node01 | 192.168.247.135 | node01 |

| node02 | 192.168.247.143 | node02 |

vmare在分配IP没有连续,没有关系继续吧

hostnamectl set-hostname node00

hostnamectl set-hostname node01

hostnamectl set-hostname node02

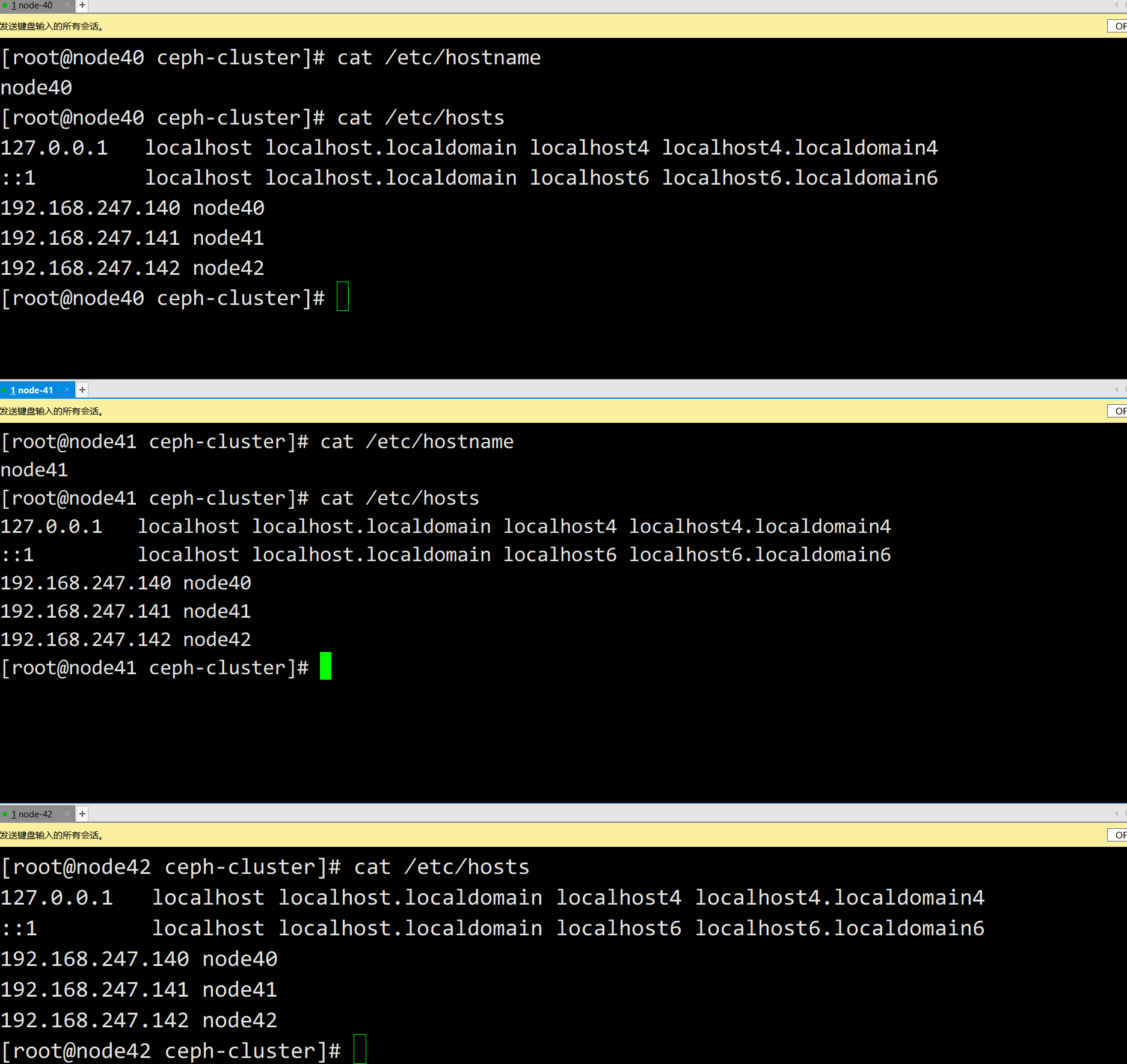

[root@linux30 ~]# vi /etc/hosts

192.168.247.144 node00

192.168.247.135 node01

192.168.247.143 node02

useradd -d /home/ceph_user -m ceph_user

passwd ceph_user

# 设置root 权限

echo "ceph_user ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/ceph_user

sudo chmod 0440 /etc/sudoers.d/ceph_user

ssh-copy-id ceph_user@node00

ssh-copy-id ceph_user@node01

ssh-copy-id ceph_user@node02

su root

vim /root/.ssh/config

复制一下内容即可

Host node00

Hostname node00

User ceph_user

Host node01

Hostname node01

User ceph_user

Host node02

Hostname node02

User ceph_user

注意修改文件权限, 不能采用777最大权限:

chmod 600 ~/.ssh/config

# 下载

yum install ntp ntpdate ntp-doc -y

# 确保时区是正确, 设置开机启动:

systemctl enable ntpd

# 将时间每隔1小时自动校准同步。编辑 vi /etc/rc.d/rc.local 追加:

echo "/usr/sbin/ntpdate ntp1.aliyun.com > /dev/null 2>&1; /sbin/hwclock -w" >> /etc/rc.d/rc.local

# 配置定时任务, 执行crontab -e 加入

crontab -e 0 */1 * * * ntpdate ntp1.aliyun.com > /dev/null 2>&1; /sbin/hwclock -w

rpm -ivh https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/ceph-release-1-1.el7.noarch.rpm

yum install epel-release

yum install ceph-deploy python-setuptools python2-subprocess32

并配置镜像加速源

echo '

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/x86_64/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

# 官方源

#baseurl=http://download.ceph.com/rpm-mimic/el7/noarch

# 清华源

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/noarch/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/SRPMS/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc' > /etc/yum.repos.d/ceph.repo

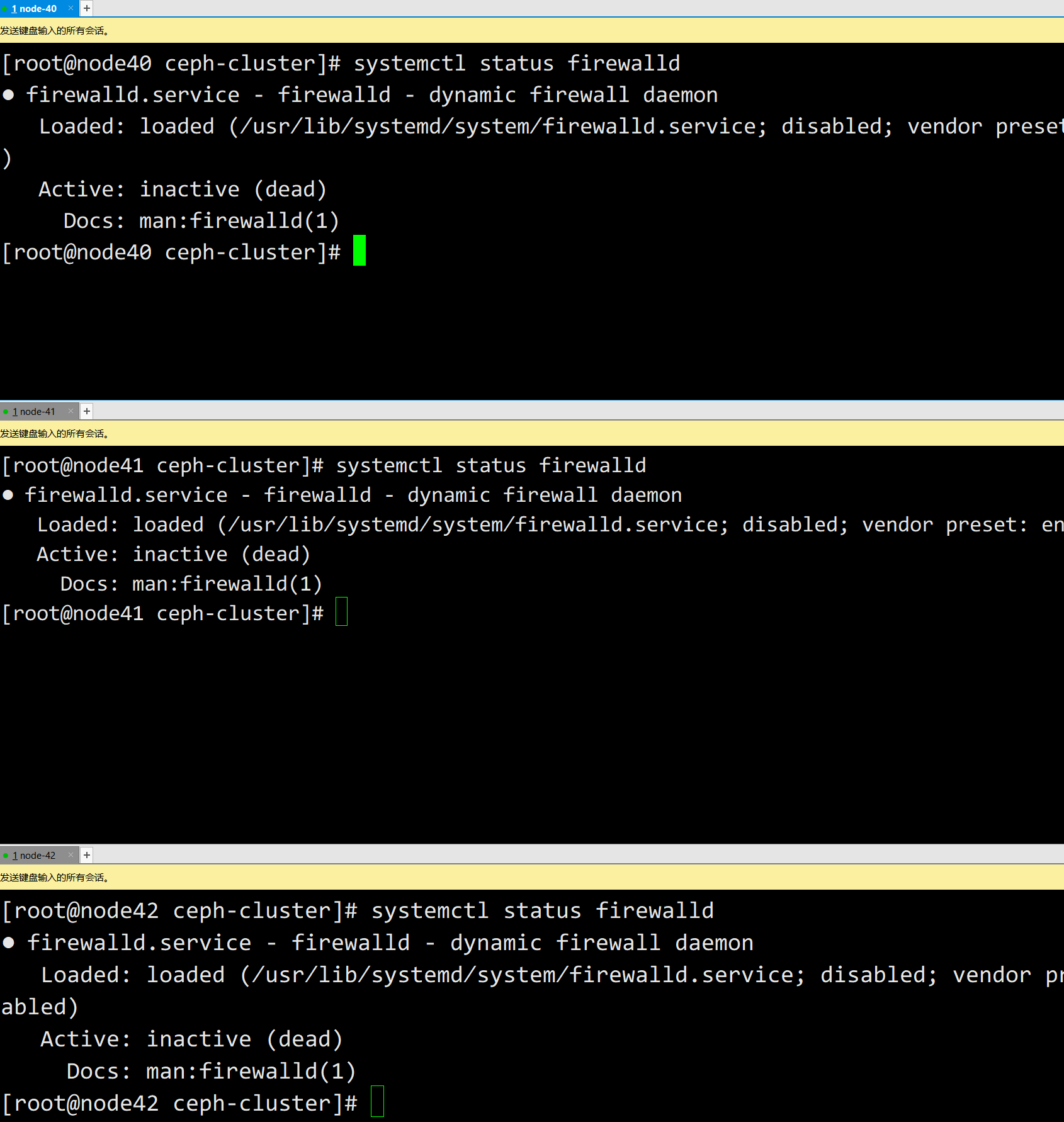

systemctl stop firewalld.service

systemctl disable firewalld.service

setenforce 0

永久生效:编辑 vi /etc/selinux/config修改:

SELINUX=disabled

yum update && yum -y install ceph ceph-deploy

也可通过如下方式安装:

mkdir -p /opt/ceph/ceph-cluster && cd /opt/ceph/ceph-cluster

ceph-deploy new node00 node01 node02

会生成配置文件;

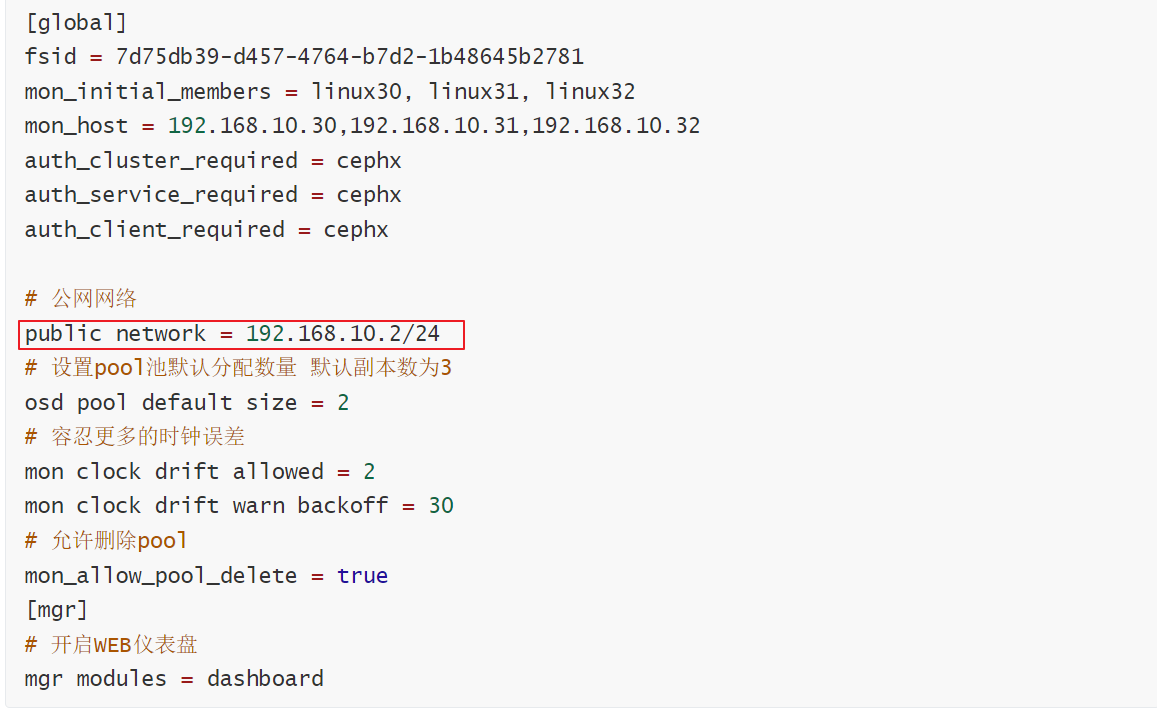

vi /opt/ceph/ceph-cluster/ceph.conf

[global]

fsid = 7d75db39-d457-4764-b7d2-1b48645b2781

mon_initial_members = linux30, linux31, linux32

mon_host = 192.168.10.30,192.168.10.31,192.168.10.32

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# 公网网络

public network = 192.168.247.100/24

# 设置pool池默认分配数量 默认副本数为3

osd pool default size = 2

# 容忍更多的时钟误差

mon clock drift allowed = 2

mon clock drift warn backoff = 30

# 允许删除pool

mon_allow_pool_delete = true

[mgr]

# 开启WEB仪表盘

mgr modules = dashboard

public network是其公网IP(虚拟机是vmnet8的网卡IP)

ceph-deploy install node00 node01 node02 --no-adjust-repos

--no-adjust-repos使用该命令可以实现加速效果;并且不改变镜像源。

ceph-deploy mon create-initial

## ceph-deploy --overwrite-conf mon create-initial

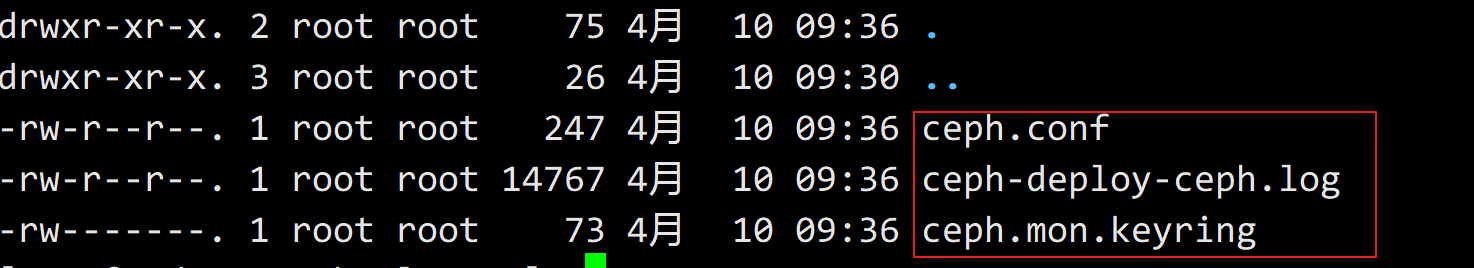

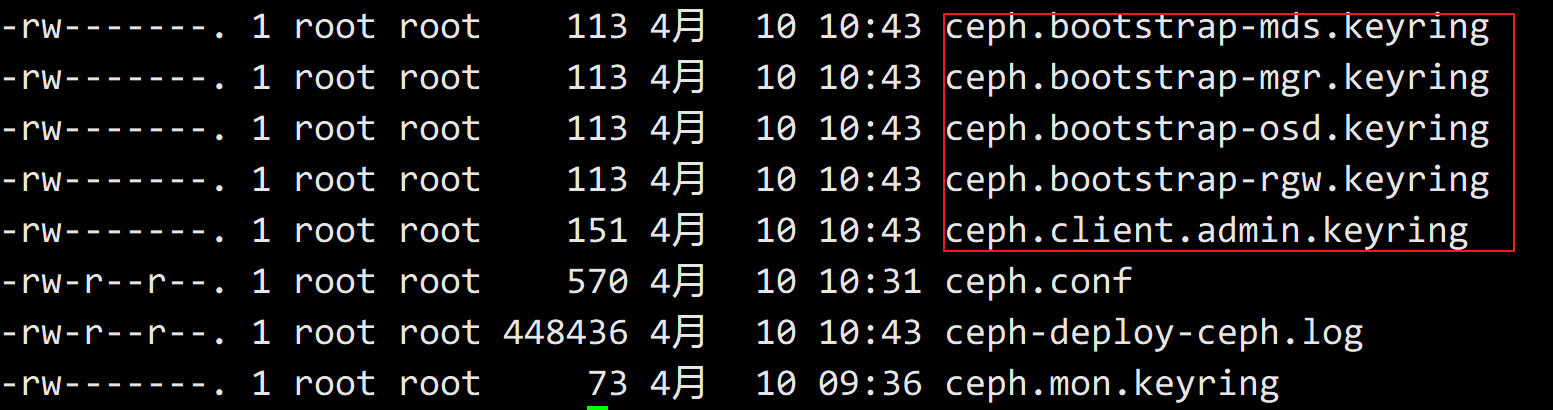

执行完之后,本地目录下会生成若干keyring结尾的密钥文件;如下图:

ceph-deploy admin node00 node01 node02

[root@node00 ceph-cluster]# ceph-deploy admin node00 node01 node02

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin node00 node01 node02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf :

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['node00', 'node01', 'node02']

[ceph_deploy.cli][INFO ] func :

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node00

[node00][DEBUG ] connected to host: node00

[node00][DEBUG ] detect platform information from remote host

[node00][DEBUG ] detect machine type

[node00][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node01

[node01][DEBUG ] connection detected need for sudo

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node02

[node02][DEBUG ] connection detected need for sudo

[node02][DEBUG ] connected to host: node02

[node02][DEBUG ] detect platform information from remote host

[node02][DEBUG ] detect machine type

[node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[root@node00 ceph-cluster]# vim /etc/ceph/

ceph.client.admin.keyring rbdmap tmphCOe3j

ceph.conf tmp4R_f2T

[root@node00 ceph-cluster]# vim /etc/ceph/

ceph.client.admin.keyring rbdmap tmphCOe3j

ceph.conf tmp4R_f2T

[root@node00 ceph-cluster]# vim /etc/ceph/ceph.conf

[root@node00 ceph-cluster]# clear

[root@node00 ceph-cluster]# ceph-deploy mgr create node00 node01 node02

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create node00 node01 node02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('node00', 'node00'), ('node01', 'node01'), ('node02', 'node02')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf :

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func :

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts node00:node00 node01:node01 node02:node02

[node00][DEBUG ] connected to host: node00

[node00][DEBUG ] detect platform information from remote host

[node00][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node00

[node00][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node00][WARNIN] mgr keyring does not exist yet, creating one

[node00][DEBUG ] create a keyring file

[node00][DEBUG ] create path recursively if it doesn't exist

[node00][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node00 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node00/keyring

[node00][INFO ] Running command: systemctl enable ceph-mgr@node00

[node00][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node00.service to /usr/lib/systemd/system/ceph-mgr@.service.

[node00][INFO ] Running command: systemctl start ceph-mgr@node00

[node00][INFO ] Running command: systemctl enable ceph.target

[node01][DEBUG ] connection detected need for sudo

[node01][DEBUG ] connected to host: node01

[node01][DEBUG ] detect platform information from remote host

[node01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node01

[node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node01][WARNIN] mgr keyring does not exist yet, creating one

[node01][DEBUG ] create a keyring file

[node01][DEBUG ] create path recursively if it doesn't exist

[node01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node01/keyring

[node01][INFO ] Running command: sudo systemctl enable ceph-mgr@node01

[node01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node01.service to /usr/lib/systemd/system/ceph-mgr@.service.

[node01][INFO ] Running command: sudo systemctl start ceph-mgr@node01

[node01][INFO ] Running command: sudo systemctl enable ceph.target

[node02][DEBUG ] connection detected need for sudo

[node02][DEBUG ] connected to host: node02

[node02][DEBUG ] detect platform information from remote host

[node02][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node02

[node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node02][WARNIN] mgr keyring does not exist yet, creating one

[node02][DEBUG ] create a keyring file

[node02][DEBUG ] create path recursively if it doesn't exist

[node02][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node02 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node02/keyring

[node02][INFO ] Running command: sudo systemctl enable ceph-mgr@node02

[node02][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node02.service to /usr/lib/systemd/system/ceph-mgr@.service.

[node02][INFO ] Running command: sudo systemctl start ceph-mgr@node02

[node02][INFO ] Running command: sudo systemctl enable ceph.target

ceph-deploy mgr create node00 node01 node02

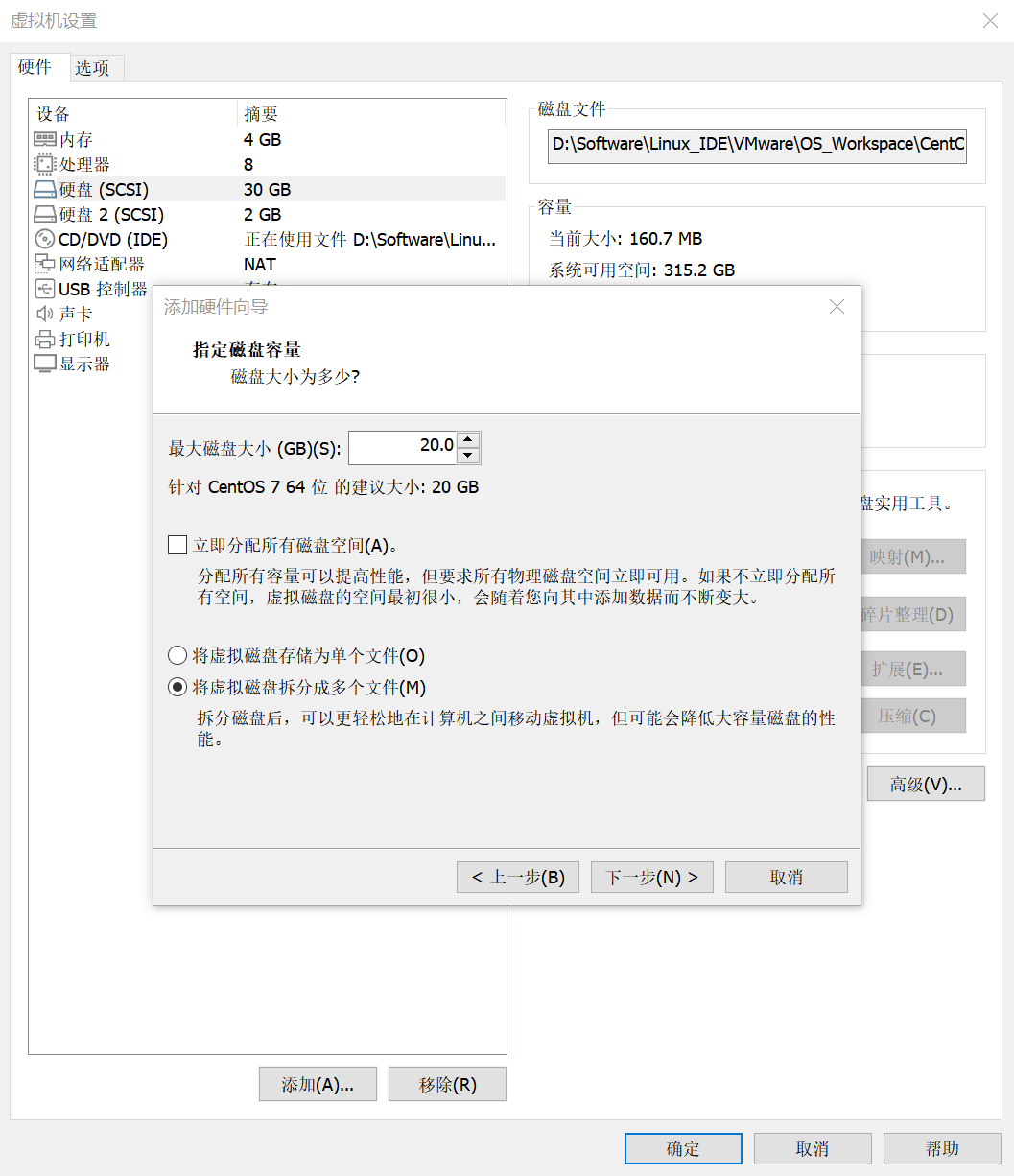

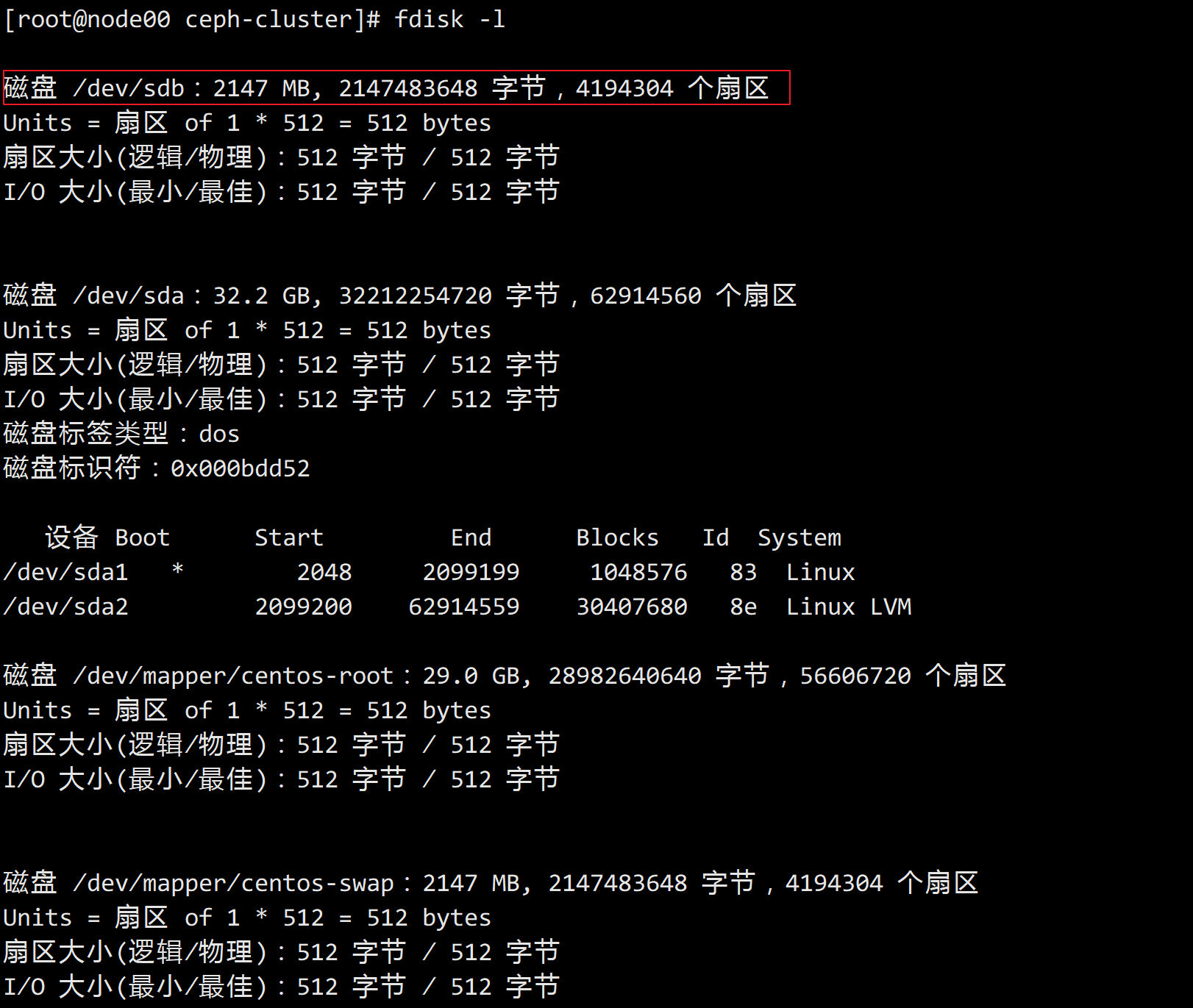

fdisk -l查看加挂磁盘

加挂节点:

ceph-deploy osd create --data /dev/sdb node00

ceph-deploy osd create --data /dev/sdb node01

ceph-deploy osd create --data /dev/sdb node02

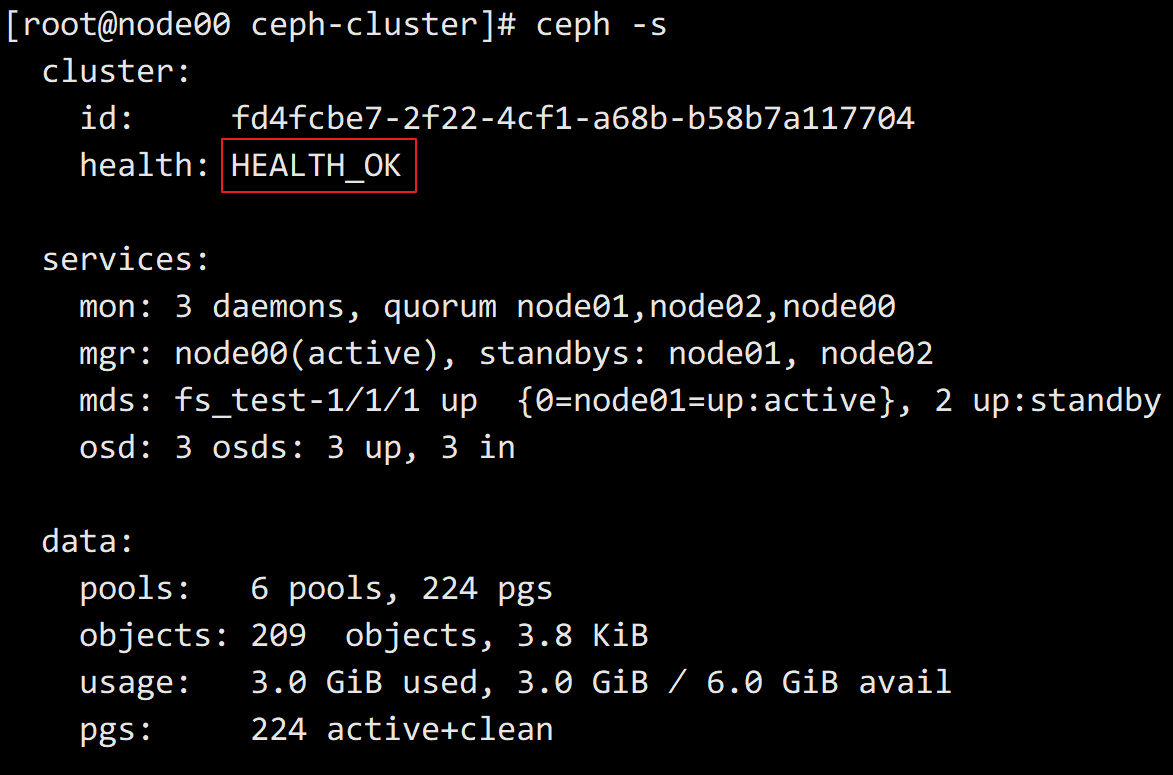

ceph-s

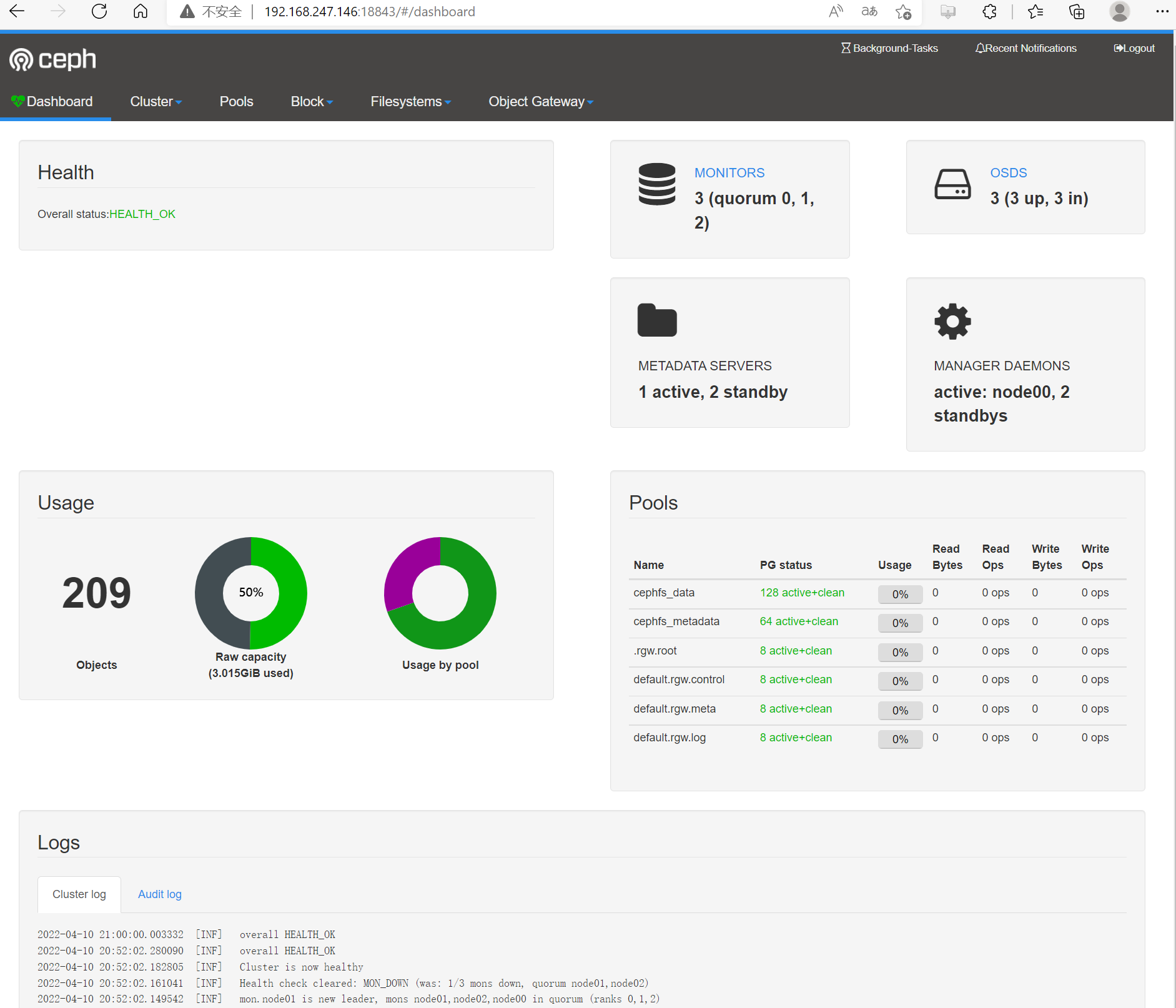

ceph config set mgr mgr/dashboard/server_addr 192.168.247.146

ceph config set mgr mgr/dashboard/server_port 18843

ceph config set mgr mgr/dashboard/server_addr node01

ceph mgr module enable dashboard

ceph dashboard create-self-signed-cert

mkdir mgr-dashboard&&cd mgr-dashboard

[root@node00 mgr-dashboard]# pwd

/opt/ceph/ceph-cluster/mgr-dashboard

cd /opt/ceph/ceph-cluster/mgr-dashboard

openssl req -new -nodes -x509 -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca

[root@linux30 mgr-dashboard]# ll

total 8

-rw-rw-r-- 1 ceph_user ceph_user 1155 Jul 14 02:26 dashboard.crt

-rw-rw-r-- 1 ceph_user ceph_user 1704 Jul 14 02:26 dashboard.key

ceph mgr module disable dashboard

ceph mgr module enable dashboard

ceph config set mgr mgr/dashboard/server_addr 192.168.247.146

ceph config set mgr mgr/dashboard/server_port 18843

ceph config set mgr mgr/dashboard/ssl false

[root@node00 ceph-cluster]# ceph mgr services

{

"dashboard": "http://192.168.247.146:18843/"

}

ceph config set mgr mgr/dashboard/server_addr node00

ceph dashboard set-login-credentials admin admin

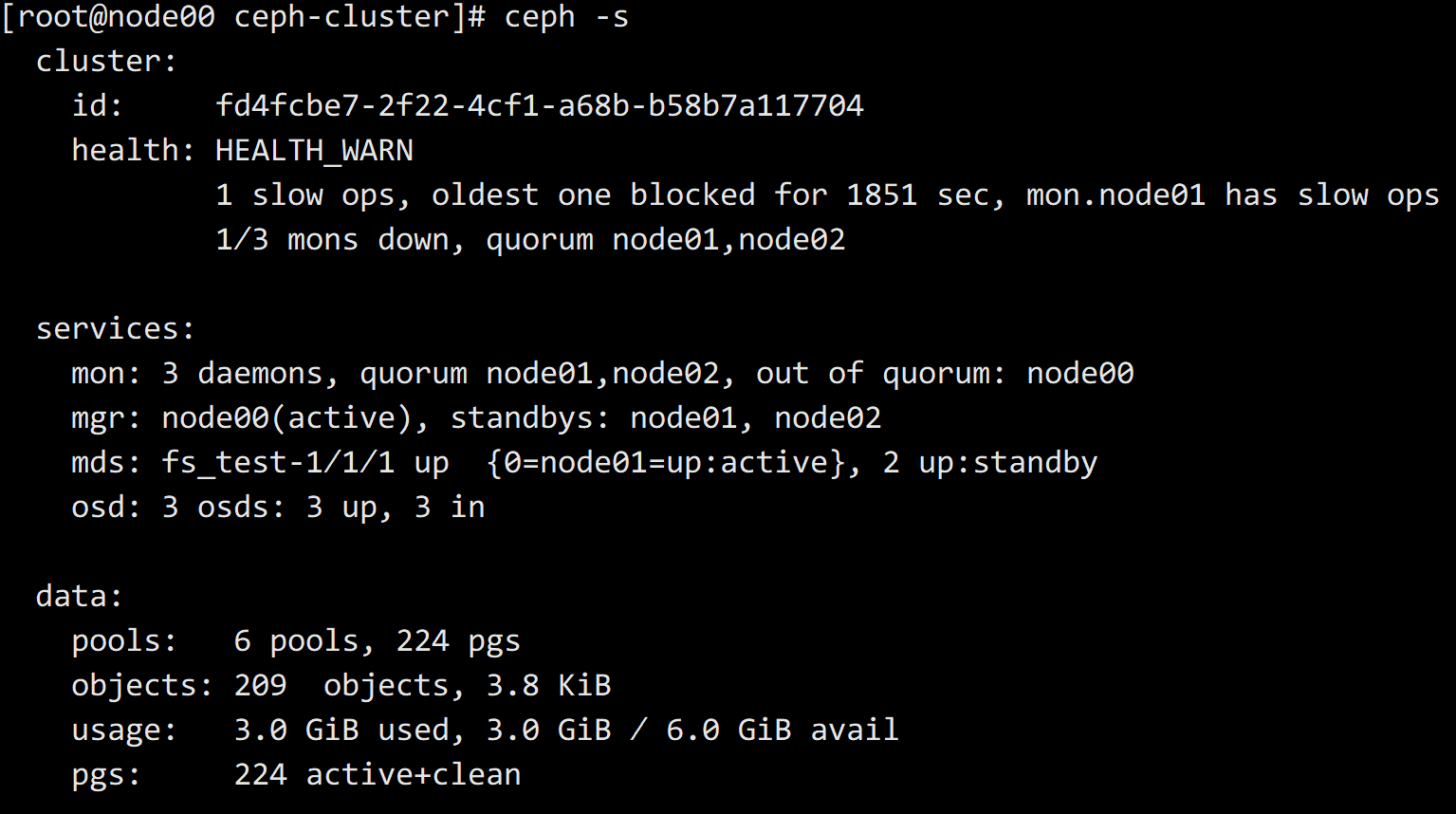

ceph -s出现问题:

可能是时间同步问题,

ystemctl start ntpd #新增的节点没有启动ntpd

systemctl restart ceph-mon.target

systemctl restart ceph-mon.target

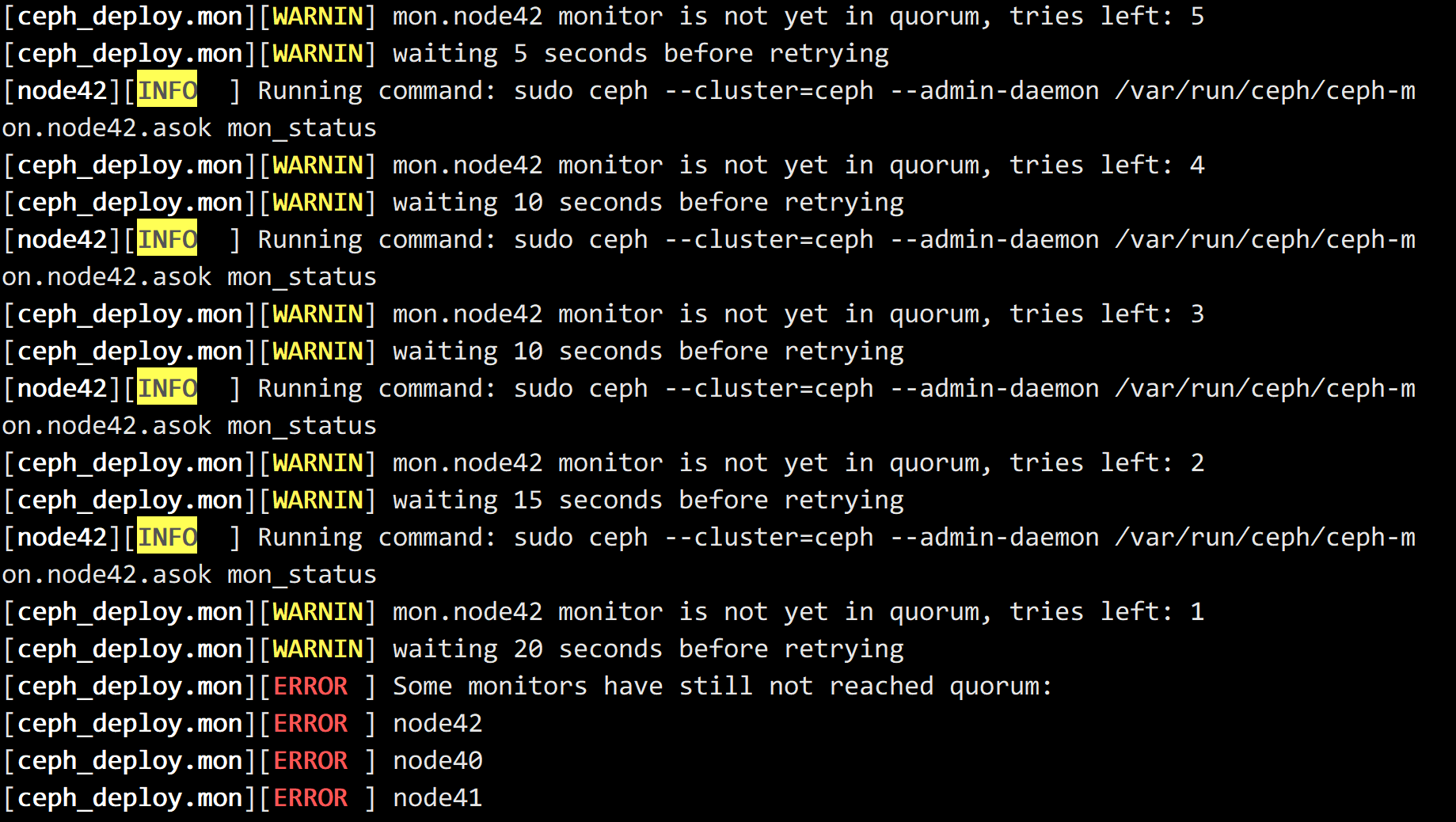

ceph-deploy mon相关问题汇总:ceph-deploy mon出现mon.node40 monitor is not yet in quorum, tries left: 5错误:

[root@node40 ceph-cluster]# ceph-deploy --overwrite-conf mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf :

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func :

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts node40 node41 node42

[ceph_deploy.mon][DEBUG ] detecting platform for host node40 ...

[node40][DEBUG ] connection detected need for sudo

[node40][DEBUG ] connected to host: node40

[node40][DEBUG ] detect platform information from remote host

[node40][DEBUG ] detect machine type

[node40][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.9.2009 Core

[node40][DEBUG ] determining if provided host has same hostname in remote

[node40][DEBUG ] get remote short hostname

[node40][DEBUG ] deploying mon to node40

[node40][DEBUG ] get remote short hostname

[node40][DEBUG ] remote hostname: node40

[node40][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node40][DEBUG ] create the mon path if it does not exist

[node40][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node40/done

[node40][DEBUG ] create a done file to avoid re-doing the mon deployment

[node40][DEBUG ] create the init path if it does not exist

[node40][INFO ] Running command: sudo systemctl enable ceph.target

[node40][INFO ] Running command: sudo systemctl enable ceph-mon@node40

[node40][INFO ] Running command: sudo systemctl start ceph-mon@node40

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[node40][DEBUG ] ********************************************************************************

[node40][DEBUG ] status for monitor: mon.node40

[node40][DEBUG ] {

[node40][DEBUG ] "election_epoch": 1,

[node40][DEBUG ] "extra_probe_peers": [

[node40][DEBUG ] {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.142:6789",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.141:3300",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v2"

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.141:6789",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.142:3300",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v2"

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.142:6789",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] }

[node40][DEBUG ] ],

[node40][DEBUG ] "feature_map": {

[node40][DEBUG ] "mon": [

[node40][DEBUG ] {

[node40][DEBUG ] "features": "0x3ffddff8ffecffff",

[node40][DEBUG ] "num": 1,

[node40][DEBUG ] "release": "luminous"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] "features": {

[node40][DEBUG ] "quorum_con": "0",

[node40][DEBUG ] "quorum_mon": [],

[node40][DEBUG ] "required_con": "0",

[node40][DEBUG ] "required_mon": []

[node40][DEBUG ] },

[node40][DEBUG ] "monmap": {

[node40][DEBUG ] "created": "2022-04-08 14:14:20.855876",

[node40][DEBUG ] "epoch": 0,

[node40][DEBUG ] "features": {

[node40][DEBUG ] "optional": [],

[node40][DEBUG ] "persistent": []

[node40][DEBUG ] },

[node40][DEBUG ] "fsid": "b3299c95-745f-467f-91e4-a3e30c490483",

[node40][DEBUG ] "min_mon_release": 0,

[node40][DEBUG ] "min_mon_release_name": "unknown",

[node40][DEBUG ] "modified": "2022-04-08 14:14:20.855876",

[node40][DEBUG ] "mons": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.140:6789/0",

[node40][DEBUG ] "name": "node40",

[node40][DEBUG ] "public_addr": "192.168.247.140:6789/0",

[node40][DEBUG ] "public_addrs": {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.140:3300",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v2"

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.140:6789",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] "rank": 0

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.142:6789/0",

[node40][DEBUG ] "name": "node42",

[node40][DEBUG ] "public_addr": "192.168.247.142:6789/0",

[node40][DEBUG ] "public_addrs": {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "192.168.247.142:6789",

[node40][DEBUG ] "nonce": 0,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] "rank": 1

[node40][DEBUG ] },

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "0.0.0.0:0/1",

[node40][DEBUG ] "name": "node41",

[node40][DEBUG ] "public_addr": "0.0.0.0:0/1",

[node40][DEBUG ] "public_addrs": {

[node40][DEBUG ] "addrvec": [

[node40][DEBUG ] {

[node40][DEBUG ] "addr": "0.0.0.0:0",

[node40][DEBUG ] "nonce": 1,

[node40][DEBUG ] "type": "v1"

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] "rank": 2

[node40][DEBUG ] }

[node40][DEBUG ] ]

[node40][DEBUG ] },

[node40][DEBUG ] "name": "node40",

[node40][DEBUG ] "outside_quorum": [

[node40][DEBUG ] "node40"

[node40][DEBUG ] ],

[node40][DEBUG ] "quorum": [],

[node40][DEBUG ] "rank": 0,

[node40][DEBUG ] "state": "probing",

[node40][DEBUG ] "sync_provider": []

[node40][DEBUG ] }

[node40][DEBUG ] ********************************************************************************

[node40][INFO ] monitor: mon.node40 is running

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host node41 ...

[node41][DEBUG ] connection detected need for sudo

[node41][DEBUG ] connected to host: node41

[node41][DEBUG ] detect platform information from remote host

[node41][DEBUG ] detect machine type

[node41][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.9.2009 Core

[node41][DEBUG ] determining if provided host has same hostname in remote

[node41][DEBUG ] get remote short hostname

[node41][DEBUG ] deploying mon to node41

[node41][DEBUG ] get remote short hostname

[node41][DEBUG ] remote hostname: node41

[node41][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node41][DEBUG ] create the mon path if it does not exist

[node41][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node41/done

[node41][DEBUG ] create a done file to avoid re-doing the mon deployment

[node41][DEBUG ] create the init path if it does not exist

[node41][INFO ] Running command: sudo systemctl enable ceph.target

[node41][INFO ] Running command: sudo systemctl enable ceph-mon@node41

[node41][INFO ] Running command: sudo systemctl start ceph-mon@node41

[node41][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node41.asok mon_status

[node41][DEBUG ] ********************************************************************************

[node41][DEBUG ] status for monitor: mon.node41

[node41][DEBUG ] {

[node41][DEBUG ] "election_epoch": 117,

[node41][DEBUG ] "extra_probe_peers": [],

[node41][DEBUG ] "feature_map": {

[node41][DEBUG ] "mon": [

[node41][DEBUG ] {

[node41][DEBUG ] "features": "0x3ffddff8ffecffff",

[node41][DEBUG ] "num": 1,

[node41][DEBUG ] "release": "luminous"

[node41][DEBUG ] }

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "features": {

[node41][DEBUG ] "quorum_con": "0",

[node41][DEBUG ] "quorum_mon": [],

[node41][DEBUG ] "required_con": "2449958747315912708",

[node41][DEBUG ] "required_mon": [

[node41][DEBUG ] "kraken",

[node41][DEBUG ] "luminous",

[node41][DEBUG ] "mimic",

[node41][DEBUG ] "osdmap-prune",

[node41][DEBUG ] "nautilus"

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "monmap": {

[node41][DEBUG ] "created": "2022-04-08 14:02:08.362899",

[node41][DEBUG ] "epoch": 1,

[node41][DEBUG ] "features": {

[node41][DEBUG ] "optional": [],

[node41][DEBUG ] "persistent": [

[node41][DEBUG ] "kraken",

[node41][DEBUG ] "luminous",

[node41][DEBUG ] "mimic",

[node41][DEBUG ] "osdmap-prune",

[node41][DEBUG ] "nautilus"

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "fsid": "b3299c95-745f-467f-91e4-a3e30c490483",

[node41][DEBUG ] "min_mon_release": 14,

[node41][DEBUG ] "min_mon_release_name": "nautilus",

[node41][DEBUG ] "modified": "2022-04-08 14:02:08.362899",

[node41][DEBUG ] "mons": [

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "192.168.247.141:6789/0",

[node41][DEBUG ] "name": "node41",

[node41][DEBUG ] "public_addr": "192.168.247.141:6789/0",

[node41][DEBUG ] "public_addrs": {

[node41][DEBUG ] "addrvec": [

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "192.168.247.141:6789",

[node41][DEBUG ] "nonce": 0,

[node41][DEBUG ] "type": "v1"

[node41][DEBUG ] }

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "rank": 0

[node41][DEBUG ] },

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "192.168.247.142:6789/0",

[node41][DEBUG ] "name": "node42",

[node41][DEBUG ] "public_addr": "192.168.247.142:6789/0",

[node41][DEBUG ] "public_addrs": {

[node41][DEBUG ] "addrvec": [

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "192.168.247.142:6789",

[node41][DEBUG ] "nonce": 0,

[node41][DEBUG ] "type": "v1"

[node41][DEBUG ] }

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "rank": 1

[node41][DEBUG ] },

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "0.0.0.0:0/1",

[node41][DEBUG ] "name": "node40",

[node41][DEBUG ] "public_addr": "0.0.0.0:0/1",

[node41][DEBUG ] "public_addrs": {

[node41][DEBUG ] "addrvec": [

[node41][DEBUG ] {

[node41][DEBUG ] "addr": "0.0.0.0:0",

[node41][DEBUG ] "nonce": 1,

[node41][DEBUG ] "type": "v1"

[node41][DEBUG ] }

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "rank": 2

[node41][DEBUG ] }

[node41][DEBUG ] ]

[node41][DEBUG ] },

[node41][DEBUG ] "name": "node41",

[node41][DEBUG ] "outside_quorum": [

[node41][DEBUG ] "node41"

[node41][DEBUG ] ],

[node41][DEBUG ] "quorum": [],

[node41][DEBUG ] "rank": 0,

[node41][DEBUG ] "state": "probing",

[node41][DEBUG ] "sync_provider": []

[node41][DEBUG ] }

[node41][DEBUG ] ********************************************************************************

[node41][INFO ] monitor: mon.node41 is running

[node41][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node41.asok mon_status

[ceph_deploy.mon][DEBUG ] detecting platform for host node42 ...

[node42][DEBUG ] connection detected need for sudo

[node42][DEBUG ] connected to host: node42

[node42][DEBUG ] detect platform information from remote host

[node42][DEBUG ] detect machine type

[node42][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.9.2009 Core

[node42][DEBUG ] determining if provided host has same hostname in remote

[node42][DEBUG ] get remote short hostname

[node42][DEBUG ] deploying mon to node42

[node42][DEBUG ] get remote short hostname

[node42][DEBUG ] remote hostname: node42

[node42][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node42][DEBUG ] create the mon path if it does not exist

[node42][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node42/done

[node42][DEBUG ] create a done file to avoid re-doing the mon deployment

[node42][DEBUG ] create the init path if it does not exist

[node42][INFO ] Running command: sudo systemctl enable ceph.target

[node42][INFO ] Running command: sudo systemctl enable ceph-mon@node42

[node42][INFO ] Running command: sudo systemctl start ceph-mon@node42

[node42][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node42.asok mon_status

[node42][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory

[node42][WARNIN] monitor: mon.node42, might not be running yet

[node42][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node42.asok mon_status

[node42][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory

[node42][WARNIN] monitor node42 does not exist in monmap

[ceph_deploy.mon][INFO ] processing monitor mon.node40

[node40][DEBUG ] connection detected need for sudo

[node40][DEBUG ] connected to host: node40

[node40][DEBUG ] detect platform information from remote host

[node40][DEBUG ] detect machine type

[node40][DEBUG ] find the location of an executable

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node40 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node40 monitor is not yet in quorum, tries left: 4

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node40 monitor is not yet in quorum, tries left: 3

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node40 monitor is not yet in quorum, tries left: 2

[ceph_deploy.mon][WARNIN] waiting 15 seconds before retrying

[node40][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node40.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node40 monitor is not yet in quorum, tries left: 1

[ceph_deploy.mon][WARNIN] waiting 20 seconds before retrying

[ceph_deploy.mon][INFO ] processing monitor mon.node41

[node41][DEBUG ] connection detected need for sudo

[node41][DEBUG ] connected to host: node41

[node41][DEBUG ] detect platform information from remote host

[node41][DEBUG ] detect machine type

[node41][DEBUG ] find the location of an executable

[node41][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node41.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node41 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[node41][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node41.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node41 monitor is not yet in quorum, tries left: 4

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

错误的含义是:mon.node40 监视器尚未达到仲裁状态,经过多轮尝试后失败。

网络参考可能原因:

防火墙:

hosts配置和hostname 不一致

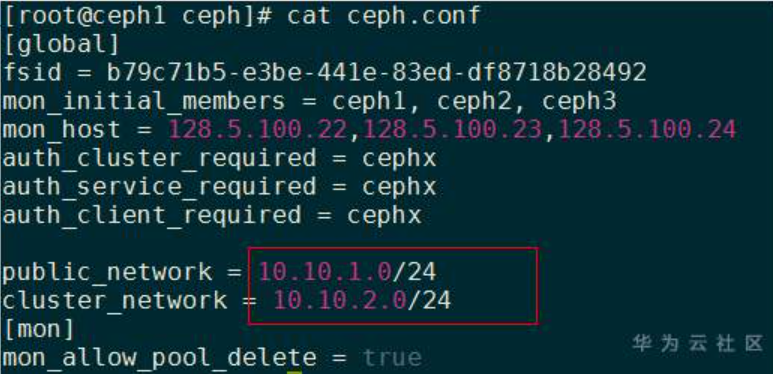

public_network配置问题

一些其他的文档说明是地址是 public_network如下图:

[分布式存储]Ceph环境部署失败问题总结

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有