[每日一氵]

好兄弟们看看是不是这个错:

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

先在上边儿导入 os 库,把那个环境变量导入:

import os

os.environ['CUDA_LAUNCH_BLOCKING'] = '1' # 下面老是报错 shape 不一致

这样再出错了,打印的信息就比较详细了

这是原来的报错信息,这个报错信息,参考价值不大,好兄弟可以看后面:

torch.Size([4, 1, 96, 96, 96]) torch.Size([4, 1, 96, 96, 96])

Training (0 / 20 Steps) (loss&#061;4.11153): 2%|▏ | 1/58 [00:14<13:44, 14.47s/it]

torch.Size([4, 1, 96, 96, 96]) torch.Size([4, 1, 96, 96, 96])

Training (1 / 20 Steps) (loss&#061;4.06208): 2%|▏ | 1/58 [00:27<13:44, 14.47s/it]

Validate (X / X Steps) (dice&#061;X.X): 0%| | 0/5 [00:00torch.Size([2, 321, 307, 178]) torch.Size([2, 321, 307, 178])

----------------------------------------

/pytorch/aten/src/ATen/native/cuda/ScatterGatherKernel.cu:312: operator(): block: [189,0,0], thread: [1,0,0] Assertion &#096;idx_dim >&#061; 0 && idx_dim /pytorch/aten/src/ATen/native/cuda/ScatterGatherKernel.cu:312: operator(): block: [63,0,0], thread: [60,0,0] Assertion &#096;idx_dim >&#061; 0 && idx_dim /pytorch/aten/src/ATen/native/cuda/ScatterGatherKernel.cu:312: operator(): block: [149,0,0], thread: [6,0,0] Assertion &#096;idx_dim >&#061; 0 && idx_dim /pytorch/aten/src/ATen/native/cuda/ScatterGatherKernel.cu:312: operator(): block: [149,0,0], thread: [12,0,0] Assertion &#096;idx_dim >&#061; 0 && idx_dim Validate (X / X Steps) (dice&#061;X.X): 0%| | 0/5 [00:27Training (1 / 20 Steps) (loss&#061;4.06208): 2%|▏ | 1/58 [00:55<53:07, 55.92s/it]

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Input In [7], in ()

96 metric_values &#061; []

97 while global_step ---> 98 global_step, dice_val_best, global_step_best &#061; train(

99 global_step, train_loader, dice_val_best, global_step_best

100 )

101 model.load_state_dict(torch.load(os.path.join(root_dir, "best_metric_model.pth")))

Input In [7], in train(global_step, train_loader, dice_val_best, global_step_best)

56 if (

57 global_step % eval_num &#061;&#061; 0 and global_step !&#061; 0

58 ) or global_step &#061;&#061; max_iterations:

59 epoch_iterator_val &#061; tqdm(

60 val_loader, desc&#061;"Validate (X / X Steps) (dice&#061;X.X)", dynamic_ncols&#061;True

61 )

---> 62 dice_val &#061; validation(epoch_iterator_val)

63 epoch_loss /&#061; step

64 epoch_loss_values.append(epoch_loss)

Input In [7], in validation(epoch_iterator_val)

17 # print(val_output_convert[1].shape, val_labels_convert[1].shape)

18 print("-"*40)

---> 19 print(val_output_convert[0].cpu().numpy().max(),

20 val_labels_convert[0].cpu().numpy().max())

21 print(val_output_convert[0].cpu().numpy().min(),

22 val_labels_convert[0].cpu().numpy().min())

23 # print(val_labels_convert.max(), val_labels_convert.min())

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING&#061;1.

|

这是我错误的地方&#xff1a;

x, y &#061; (batch["image"].cuda(), batch["label"].cuda())

print(x.shape, y.shape)

logit_map &#061; model(x)

print(logit_map.shape, "FUCKCKKCKCKCCK")

torch.Size([4, 1, 96, 96, 96]) torch.Size([4, 1, 96, 96, 96])

torch.Size([4, 14, 96, 96, 96]) FUCKCKKCKCKCCK

稍微看一下程序&#xff0c;x 显然就是输出的图片&#xff0c;而 y 就是对应的label&#xff0c;logit_map 就是对应的预测map

好兄弟们可能猜到了&#xff0c;我这个是3D的分割&#xff0c;所以维度是5&#xff0c;后面的[96, 96, 96] 是输出的shape

那个4是batch_size&#xff0c;1 那一维&#xff0c;是输出的类别

我这个是只有前景和背景&#xff0c;所以只要分两类就可以了&#xff0c;这里应该改成2

话说如果真的就这么简单&#xff0c;我就不氵这篇博客&#xff0c;碰到这个问题的老铁们&#xff0c;一定是拿来改别人代码&#xff0c;没改完整&#xff0c;才遇到这个问题的&#xff0c;今儿咱们就说叨说叨

改写自己的数据集&#xff0c;嗯&#xff0c;一般就是新写一个Dataset类&#xff0c;要是他的数据集格式和你的一样&#xff0c;那直接改路径就好了

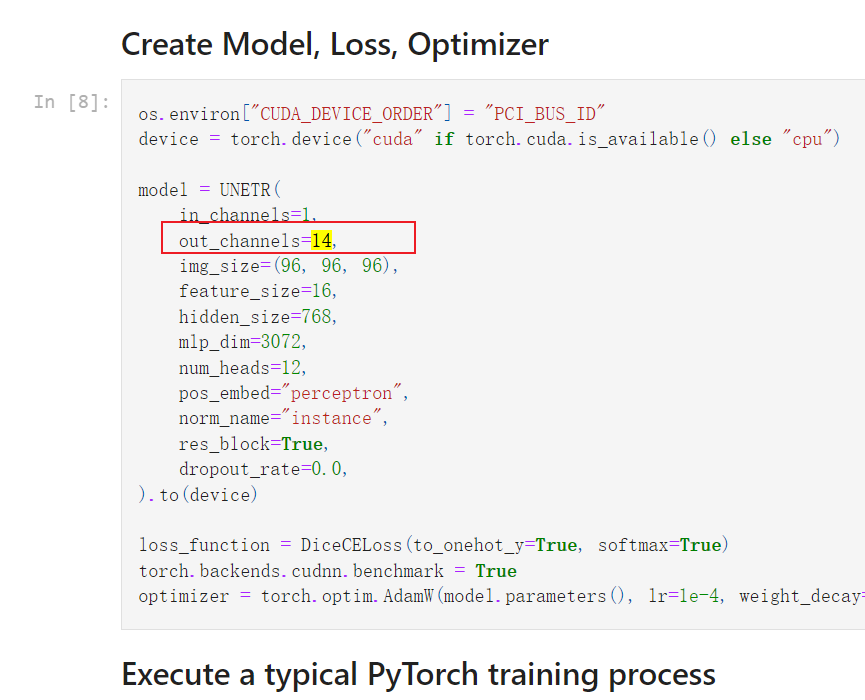

改写输出的模型&#xff0c;一般你的输入都是三通道&#xff0c;输入参数 input_channel 一般不用改&#xff0c;但是输出的类别要改啊&#xff0c;你是输出几类&#xff0c;就是改几类

(分割这里有个问题&#xff0c;有的模型会包括背景&#xff0c;有的会不包括背景&#xff0c;涉及到一个 &#043;1 或者 -1 的问题)

一般来说&#xff0c;模型的输入或者输出通道数&#xff0c;都会在模型的构造函数最开始定义&#xff0c;下边的例子就是改一下out_channels 就行

model &#061; UNETR(

in_channels&#061;1,

out_channels&#061;2, # <------------ 改这里

img_size&#061;(96, 96, 96),

feature_size&#061;16,

hidden_size&#061;768,

mlp_dim&#061;3072,

num_heads&#061;2, # 这里这个类别要改的

pos_embed&#061;"perceptron",

norm_name&#061;"instance",

res_block&#061;True,

dropout_rate&#061;0.0,

).to(device)

- 改前处理&#xff0c;这个也可以看做数据增强的一部分&#xff0c;这里一般不涉及通道数或者类别的改动&#xff0c;但是某些域的照片&#xff0c;可能不适合另一个域的数据增强方法&#xff0c;比如医学图像一般只用&#xff1a;

Randomly adjust intensity for data augmentation

而如果你用随机旋转就不是很合适

- 后处理&#xff0c;一般有NMS什么的&#xff0c;不用改

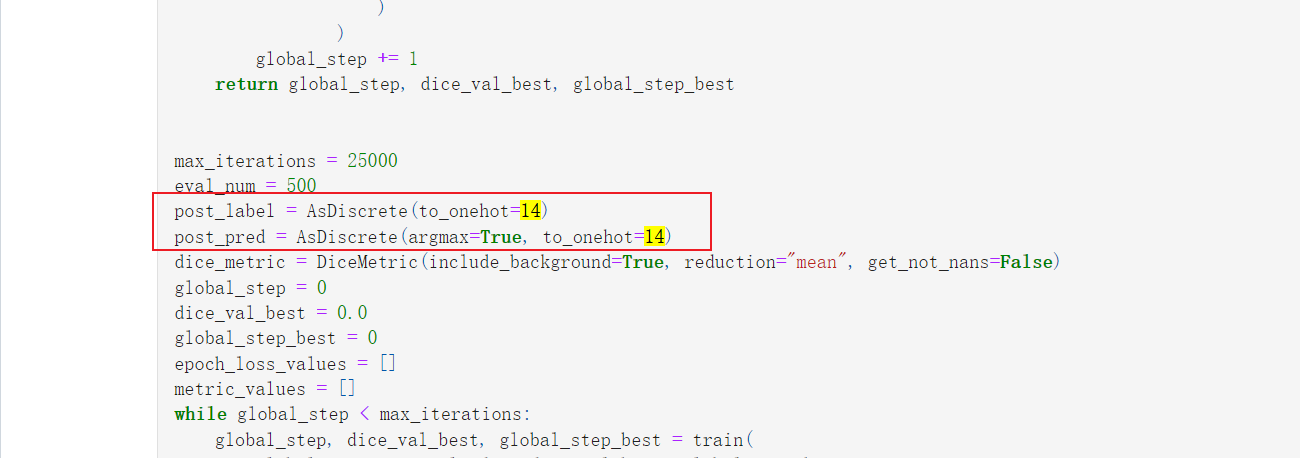

但是在我遇到的问题中&#xff0c;有这个

post_label &#061; AsDiscrete(to_onehot&#061;2) # 这里是需要改的

post_pred &#061; AsDiscrete(argmax&#061;True, to_onehot&#061;2) # 这里是需要改的

官网的解释&#xff1a;

Execute after model forward to transform model output to discrete values.

It can complete below operations:

- execute &#096;argmax&#096; for input logits values.

- threshold input value to 0.0 or 1.0.

- convert input value to One-Hot format.

- round the value to the closest integer.

反正就是把你的结果离散化&#xff0c;你看到 one_hot 眼睛其实就有光了(因为这个东西的长度会随着需求的变化而改变)&#xff0c;所以这里也要改

后面的 loss 和 optimizer 一般不用改&#xff0c;看心情吧

一个没什么用的trick&#xff0c;我还是拿例子说

https://github.com/Project-MONAI/research-contributions/tree/master/UNETR/BTCV

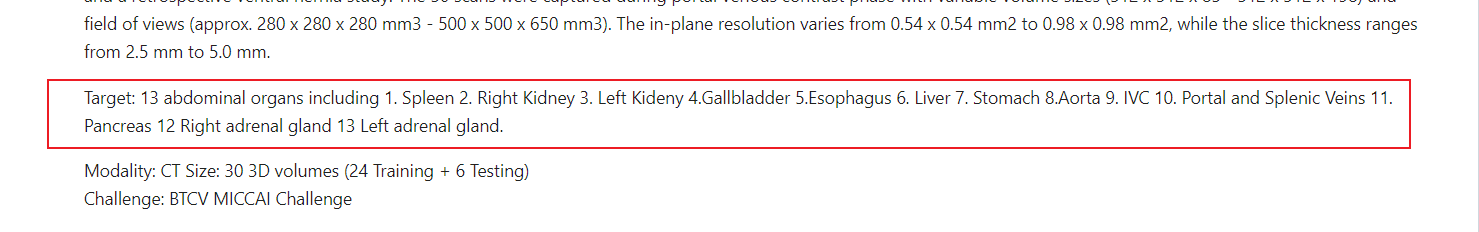

我这个问题是器官分割&#xff0c;一个13个器官&#xff0c;加上一个背景&#xff0c;一共14类

所以要改的地方有&#xff1a;

只有这三个&#xff0c;在那个页面&#xff0c;按住 ctrl &#043; F &#xff0c;输入 14 一个一个看&#xff0c;是不是需要改的

这么憨憨的方法&#xff0c;我最开始咋没想到呢。。。。。。

有参考自&#xff1a;

https://blog.csdn.net/Penta_Kill_5/article/details/118085718