点击关注上方“知了小巷”,

设为“置顶或星标”,第一时间送达干货。

Apache Atlas源码编译部署和配置运行

Apache Atlas源码地址:

https://github.com/apache/atlas.git

官方文档:

https://atlas.apache.org/#/

接上篇【Apache Atlas元数据管理入门】

内容提要:

1.大数据组件准备

2.Apache Atlas源码编译和打包

3.Apache Atlas集成外部组件

| 名称 | 版本 |

|---|---|

| Hadoop | 3.1.1 |

| Zookeeper | 3.4.12 |

| Kafka | 2.11-0.11.0.2 |

| HBase | 2.1.0 |

| Solr | 7.5.0 |

| Hive | 3.1.1 |

Hadoop集群(本地单机)

$ hadoop version

Hadoop 3.1.1

$ start-dfs.sh

$ start-yarn.sh

$ jps -l

62000 org.apache.hadoop.hdfs.server.namenode.NameNode

62305 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

62194 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

62389 org.apache.hadoop.yarn.server.nodemanager.NodeManager

27495 org.apache.hadoop.util.RunJar

62092 org.apache.hadoop.hdfs.server.datanode.DataNode

Zookeeper集群(本地单机)

$ zkServer.sh start

$ jps -l|grep zookeeper

62593 org.apache.zookeeper.server.quorum.QuorumPeerMain

Kafka集群(本地单机)

$ kafka-server-start.sh -daemon kafka_2.11-0.11.0.2/config/server.properties

$ jps -l|grep kafka

63383 kafka.Kafka

HBase集群(本地单机)

$ start-hbase.sh

$ jps -l|grep hbase

63608 org.apache.hadoop.hbase.regionserver.HRegionServer

63512 org.apache.hadoop.hbase.master.HMaster

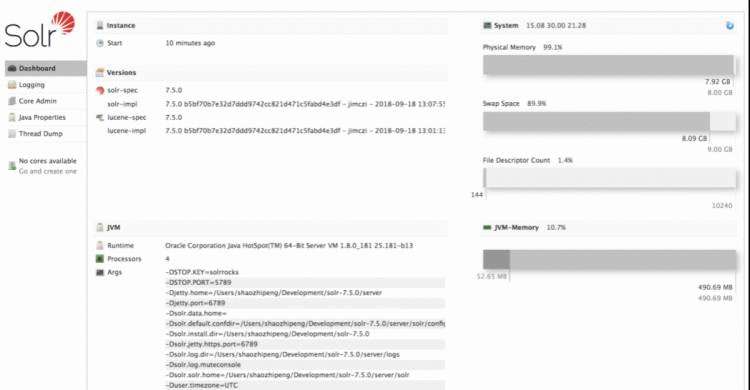

Solr(本地单机)

这里是Atlas2.1源码pom.xml中的solr版本7.5.0,其他组件版本没有全部保持一致:

<solr.version>7.5.0solr.version>

solr安装包地址:

http://archive.apache.org/dist/lucene/solr/

直接下载编译好的包:

$ wget http://archive.apache.org/dist/lucene/solr/7.5.0/solr-7.5.0.zip

$ unzip solr-7.5.0.zip

$ cd solr-7.5.0

$ ./bin/solr start -p 8983

$ lsof -i:8983

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 80795 shaozhipeng 129u IPv6 0x7ecef7d3412b64e9 0t0 TCP *:smc-https (LISTEN)

页面访问:

http://127.0.0.1:8983

Hive

基于MySQL的元数据启动起来

$ hive --version

Hive 3.1.1

$ hive --service metastore &

$ lsof -i:9083

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 27495 shaozhipeng 491u IPv6 0x7ecef7d340adef29 0t0 TCP *:9083 (LISTEN)

# 27495 org.apache.hadoop.util.RunJar

有关apache-atlas的热点问题列表

https://stackoverflow.com/questions/tagged/apache-atlas?sort=votes

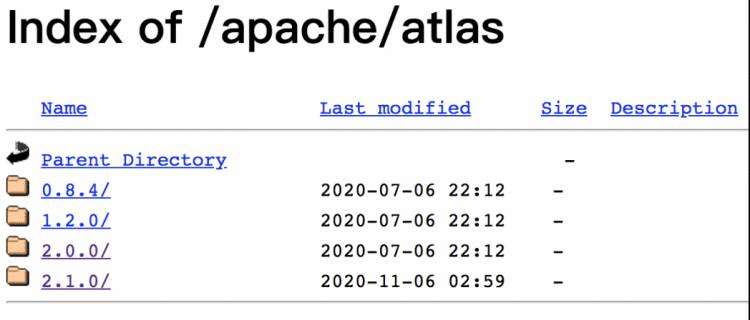

下载源码包:

https://mirrors.tuna.tsinghua.edu.cn/apache/atlas/

$ wget https://mirrors.tuna.tsinghua.edu.cn/apache/atlas/2.1.0/apache-atlas-2.1.0-sources.tar.gz

$ tar zxvf apache-atlas-2.1.0-sources.tar.gz

$ ls

apache-atlas-2.1.0-sources.tar.gz apache-atlas-sources-2.1.0

$ cd apache-atlas-sources-2.1.0/

$ mvn --version

Apache Maven 3.6.2 (40f52333136460af0dc0d7232c0dc0bcf0d9e117; 2019-08-27T23:06:16+08:00)

Maven home: /Users/shaozhipeng/Development/apache-maven-3.6.2

Java version: 1.8.0_181, vendor: Oracle Corporation, runtime: /Library/Java/JavaVirtualMachines/jdk1.8.0_181.jdk/Contents/Home/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "mac os x", version: "10.13.6", arch: "x86_64", family: "mac"

maven conf/settings.xml

添加阿里云的仓库镜像

<mirror>

<id>aliyunmavenid>

<mirrorOf>*mirrorOf>

<name>aliname>

<url>https://maven.aliyun.com/repository/publicurl>

mirror>

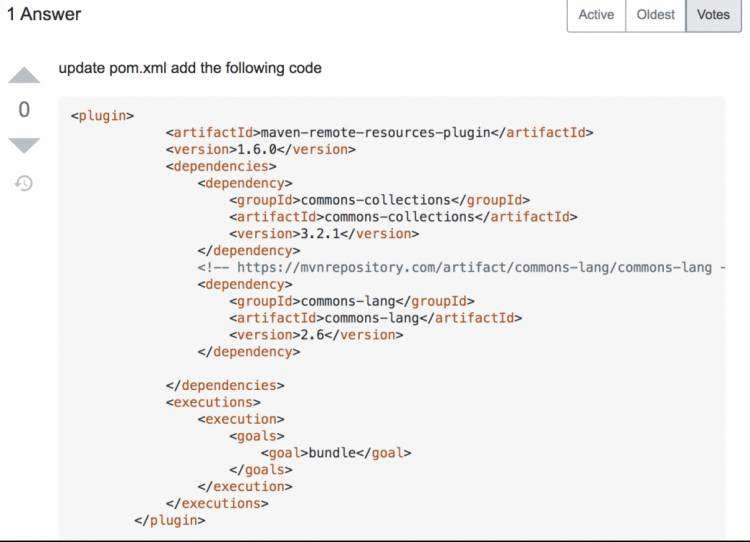

可能会由于Caused by: java.lang.ClassNotFoundException: org.apache.commons.collections.ExtendedProperties

报错导致编译失败,解决方案:

https://stackoverflow.com/questions/51957221/process-resource-bundles-maven-error-calssnotfoundexception-org-apache-commons-c

类似报错org.apache.maven.plugins:maven-site-plugin:3.7:site: org/apache/commons/lang/StringUtils

:

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-site-plugin:3.7:site (default) on project hdfs-model: Execution default of goal org.apache.maven.plugins:maven-site-plugin:3.7:site failed: A required class was missing while executing org.apache.maven.plugins:maven-site-plugin:3.7:site: org/apache/commons/lang/StringUtils

阿里云仓库找不到sqoop的jar包Could not find artifact org.apache.sqoop:sqoop:jar:1.4.6.2.3.99.0-195 in aliyunmaven

:

[ERROR] Failed to execute goal on project sqoop-bridge-shim: Could not resolve dependencies for project org.apache.atlas:sqoop-bridge-shim:jar:2.1.0: Could not find artifact org.apache.sqoop:sqoop:jar:1.4.6.2.3.99.0-195 in aliyunmaven (https://maven.aliyun.com/repository/public) -> [Help 1]

修改pom.xml文件,分别找到maven-remote-resources-plugin

和maven-site-plugin

两个插件的位置:

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-remote-resources-pluginartifactId>

<version>1.6.0version>

<dependencies>

<dependency>

<groupId>commons-collectionsgroupId>

<artifactId>commons-collectionsartifactId>

<version>3.2.2version>

dependency>

<dependency>

<groupId>commons-langgroupId>

<artifactId>commons-langartifactId>

<version>2.6version>

dependency>

dependencies>

<executions>

<execution>

<goals>

<goal>bundlegoal>

goals>

execution>

executions>

<configuration>

<excludeGroupIds>org.restlet.jeeexcludeGroupIds>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-site-pluginartifactId>

<version>${maven-site-plugin.version}version>

<dependencies>

<dependency>

<groupId>commons-langgroupId>

<artifactId>commons-langartifactId>

<version>2.6version>

dependency>

dependencies>

plugin>

sqoop依赖jar包下载失败的问题,下载地址,百度网盘:链接: https://pan.baidu.com/s/1FWHK7AGPQQI0OdSFbTE7tg 密码: gjfn

<sqoop.version>1.4.6.2.3.99.0-195sqoop.version>

安装到本地maven仓库

$ mvn install:install-file -Dfile=sqoop-1.4.6.2.3.99.0-195.jar -DgroupId=org.apache.sqoop -DartifactId=sqoop -Dversion=1.4.6.2.3.99.0-195 -Dpackaging=jar

...

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 0.644 s

...

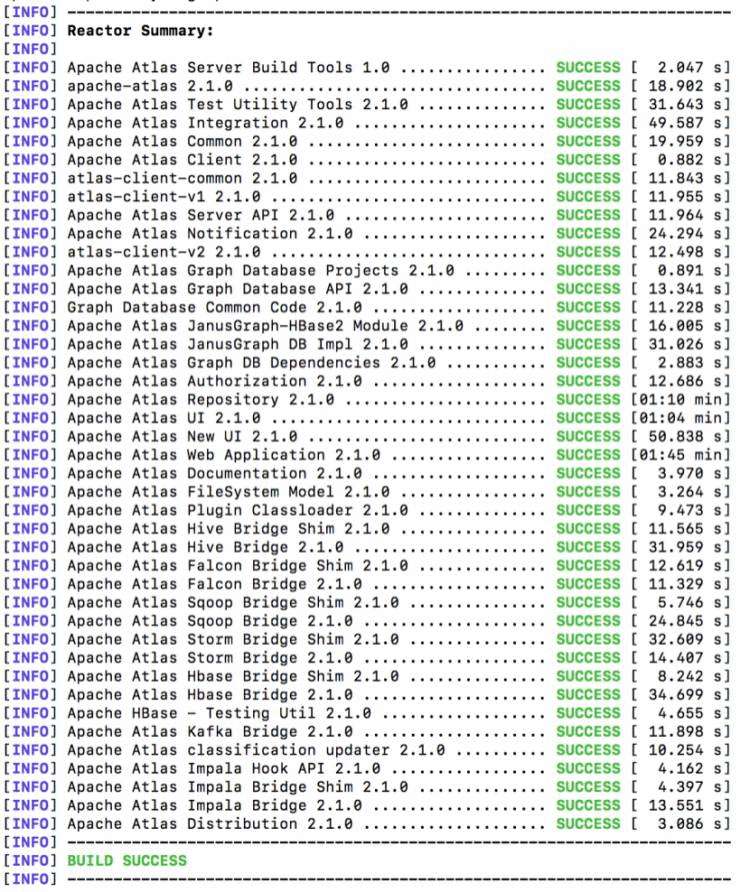

源码编译

$ mvn clean -DskipTests install

打包(可以直接打包)

$ mvn clean -DskipTests package -Pdist

不到8分钟就可以打包完成,查看打包后的文件

$ cd distro/

$ ls

pom.xml src target

$ ls target/

META-INF apache-atlas-2.1.0-hive-hook.tar.gz apache-atlas-2.1.0-storm-hook

apache-atlas-2.1.0-bin apache-atlas-2.1.0-impala-hook apache-atlas-2.1.0-storm-hook.tar.gz

apache-atlas-2.1.0-bin.tar.gz apache-atlas-2.1.0-impala-hook.tar.gz archive-tmp

apache-atlas-2.1.0-classification-updater apache-atlas-2.1.0-kafka-hook atlas-distro-2.1.0.jar

apache-atlas-2.1.0-classification-updater.zip apache-atlas-2.1.0-kafka-hook.tar.gz bin

apache-atlas-2.1.0-falcon-hook apache-atlas-2.1.0-server conf

apache-atlas-2.1.0-falcon-hook.tar.gz apache-atlas-2.1.0-server.tar.gz maven-archiver

apache-atlas-2.1.0-hbase-hook apache-atlas-2.1.0-sources.tar.gz maven-shared-archive-resources

apache-atlas-2.1.0-hbase-hook.tar.gz apache-atlas-2.1.0-sqoop-hook rat.txt

apache-atlas-2.1.0-hive-hook apache-atlas-2.1.0-sqoop-hook.tar.gz test-classes

Apache Atlas依赖Solr(或ES)

、HBase(或Cassandra)

和Kafka

。

将压缩包apache-atlas-2.1.0-server.tar.gz

拷贝出来并解压:

$ ls

apache-atlas-2.1.0

$ ls apache-atlas-2.1.0

DISCLAIMER.txt LICENSE NOTICE bin conf models server tools

$ cd apache-atlas-2.1.0/conf/

$ ls

atlas-application.properties atlas-log4j.xml cassandra.yml.template solr zookeeper

atlas-env.sh atlas-simple-authz-policy.json hbase users-credentials.properties

atlas.graph.storage.hostname$ vi atlas-application.properties

atlas.graph.storage.backend=hbase2

atlas.graph.storage.hbase.table=apache_atlas_janus

#Hbase

#For standalone mode , specify localhost

#for distributed mode, specify zookeeper quorum here

atlas.graph.storage.hostname=localhost:2181

atlas.graph.storage.hbase.regions-per-server=1

atlas.graph.storage.lock.wait-time=10000

hbase-site.xmlconf/hbase$ cp ~/Development/hbase-2.1.0/conf/hbase-site.xml hbase/

$ ls hbase/

hbase-site.xml hbase-site.xml.template

$ vi atlas-env.sh

export HBASE_CONF_DIR=/Users/shaozhipeng/Development/project/java/atlas-download/apache-atlas-2.1.0/conf/hbase

# Graph Search Index

atlas.graph.index.search.backend=solr

#Solr 这里的注释掉

#Solr cloud mode properties

#atlas.graph.index.search.solr.mode=cloud

#atlas.graph.index.search.solr.zookeeper-url=

#atlas.graph.index.search.solr.zookeeper-connect-timeout=60000

#atlas.graph.index.search.solr.zookeeper-session-timeout=60000

#atlas.graph.index.search.solr.wait-searcher=true

#Solr http mode properties

atlas.graph.index.search.solr.mode=http

atlas.graph.index.search.solr.http-urls=http://localhost:8983/solr

atlas_conf$ ls solr/

currency.xml lang protwords.txt schema.xml solrconfig.xml stopwords.txt synonyms.txt

$ cp -rf solr ~/Development/solr-7.5.0/atlas_conf

$ ls ~/Development/solr-7.5.0/atlas_conf/

currency.xml lang protwords.txt schema.xml solrconfig.xml stopwords.txt synonyms.txt

$ ~/Development/solr-7.5.0/bin/solr create -c vertex_index -d ~/Development/solr-7.5.0/atlas_conf

INFO - 2020-12-16 23:36:52.930; org.apache.solr.util.configuration.SSLCredentialProviderFactory; Processing SSL Credential Provider chain: env;sysprop

Created new core 'vertex_index'

Notification Configs$ vi atlas-application.properties

######### Notification Configs #########

atlas.notification.embedded=false

atlas.kafka.data=/Users/shaozhipeng/Development/pseudo/kafka/kafka-logs

atlas.kafka.zookeeper.cOnnect=localhost:2181

atlas.kafka.bootstrap.servers=localhost:9092

atlas.kafka.zookeeper.session.timeout.ms=4000

$ kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --partitions 3 --topic _HOATLASOK

Created topic "_HOATLASOK".

$ kafka-topics.sh --zookeeper localhost:2181 --create --replication-factor 1 --partitions 3 --topic ATLAS_ENTITIES

Created topic "ATLAS_ENTITIES".

$ kafka-topics.sh --zookeeper localhost:2181 --list

ATLAS_ENTITIES

_HOATLASOK

__consumer_offsets

修改Server Properties

$ vi atlas-application.properties

######### Server Properties #########

atlas.rest.address=http://localhost:21000

# If enabled and set to true, this will run setup steps when the server starts

atlas.server.run.setup.on.start=false

######### Entity Audit Configs #########

atlas.audit.hbase.tablename=apache_atlas_entity_audit

atlas.audit.zookeeper.session.timeout.ms=1000

atlas.audit.hbase.zookeeper.quorum=localhost:2181

修改atlas-log4j.xml

日志输出,找到org.apache.atlas.perf

,去掉注释

$ vi atlas-log4j.xml

<appender name="perf_appender" class="org.apache.log4j.DailyRollingFileAppender">

<param name="file" value="${atlas.log.dir}/atlas_perf.log" />

<param name="datePattern" value="'.'yyyy-MM-dd" />

<param name="append" value="true" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d|%t|%m%n" />

layout>

appender>

<logger name="org.apache.atlas.perf" additivity="false">

<level value="debug" />

<appender-ref ref="perf_appender" />

logger>

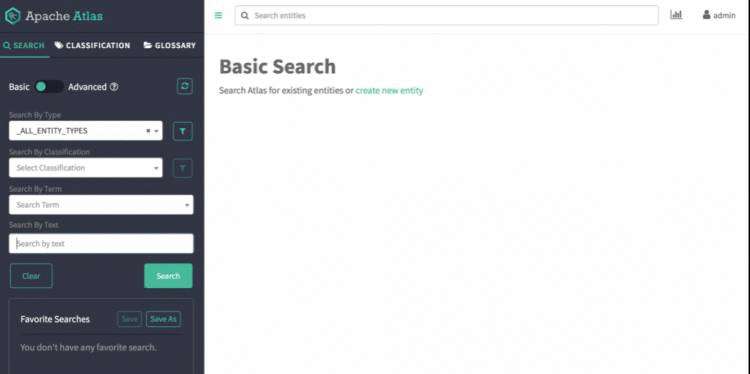

atlas_start.py

是Python2的语法,Mac环境直接运行即可。

$ ./bin/atlas_start.py

starting atlas on host localhost

starting atlas on port 21000

..............................................................................................................................................................................................................................................................................................................

Apache Atlas Server started!!!

$ jps -l |grep atlas

27180 org.apache.atlas.Atlas

页面访问:

http://localhost:21000

用户名密码:admin/admin

猜你喜欢

Apache Atlas元数据管理入门

Hadoop YARN日志查看方式

Impala使用md5函数对数据加密

ClouderaManager6.3.1+CDH6.3.2+PHOENIX-5.0.0集成部署

大数据基础:Linux操作系统(下)

大数据基础:Linux操作系统(上)

Apache Kafka客户端KafkaProducer

点一下,代码无 Bug

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有