2019独角兽企业重金招聘Python工程师标准>>>

可能是不同版本不同吧,按网友的最终改为:

export --connect jdbc:mysql://172.16.5.100:3306/dw_test --username testuser --password ****** --table che100kv --export-dir /user/hive/warehouse/che100kv0/000000_0 --input-fields-terminated-by \001 -m 1

报错: Error during export:

Export job failed!

at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:439)

at org.apache.sqoop.manager.SqlManager.exportTable(SqlManager.java:931)

at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:

<<< Invocation of Sqoop command completed <<<

Hadoop Job IDs executed by Sqoop: job_1534936991079_0934

Intercepting System.exit(1)

<<< Invocation of Main class completed <<<

Failing Oozie Launcher, Main class [org.apache.oozie.action.hadoop.SqoopMain], exit code [1]

Oozie Launcher failed, finishing Hadoop job gracefully

Oozie Launcher, uploading action data to HDFS sequence file: hdfs://master:8020/user/hue/oozie-oozi/0000099-180903155753468-oozie-oozi-W/sqoop-4411--sqoop/action-data.seq

后必须 加--columns且表名 字段等对应&#xff1a;

export --connect jdbc:mysql://172.16.5.100:3306/dw_test --username testuser --password *** --table che100kv --export-dir /user/hive/warehouse/che100kv0 --input-fields-terminated-by \001 -m 1 --columns db_t_f,k,v --update-key db_t_f --update-mode allowinsert --batch

后又报null转化错误&#xff1a;

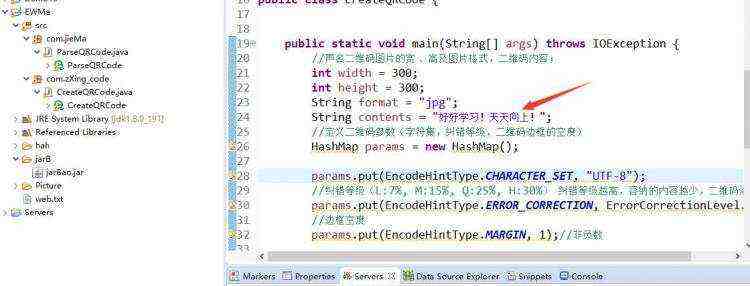

sqoop export --connect "jdbc:mysql://172.16.5.100:3306/dw_test?useUnicode&#61;true&characterEncoding&#61;utf-8" --username testuser --password ********* --table dimbrandstylemoudle --export-dir &#39;/user/hive/warehouse/dimbrandstylemoudle/&#39; --input-null-string "\\\\N" --input-null-non-string "\\\\N" --input-fields-terminated-by "\001" --input-lines-terminated-by "\\n" -m 1

然后在HUE里引号转码bug无法同时兼备--&#xff01;

Sqoop查看更多调式信息&#xff0c; 增加关键字--verbose

sqoop export --connect jdbc:mysql://192.168.119.129:3306/student?characterEncoding&#61;utf8 --username li72 --password 123 --verbose --table dm_trlog --export-dir /home/bigdata/hive/data/db1.db/trlog --input-fields-terminated-by &#39;\t&#39; --null-non-string &#39;0&#39; --null-string &#39;0&#39;;

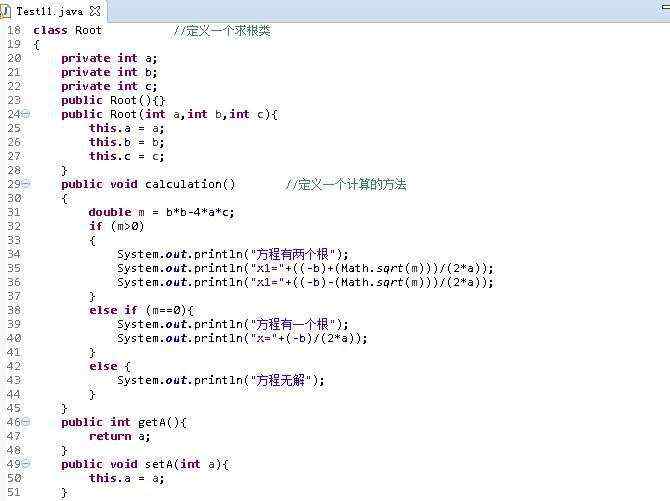

类型转换最终方法&#xff0c;修改生成的Java类&#xff0c;重新打包。

每次通过sqoop导入MySql的时&#xff0c;都会生成一个以MySql表命名的.java文件&#xff0c;然后打成JAR包&#xff0c;给sqoop提交给hadoop 的MR来解析Hive表中的数据。那可以根据报的错误&#xff0c;找到对应的行&#xff0c;改写该文件&#xff0c;编译&#xff0c;重新打包&#xff0c;sqoop可以通过 -jar-file &#xff0c;--class-name 组合让我们指定运行自己的jar包中的某个class。来解析该hive表中的每行数据。脚本如下&#xff1a;一个完整的例子如下&#xff1a;

./bin/sqoop export --connect "jdbc:mysql://192.168.119.129:3306/student?useUnicode&#61;true&characterEncoding&#61;utf-8"

--username li72 --password 123 --table dm_trlog

--export-dir /hive/warehouse/trlog --input-fields-terminated-by &#39;\t&#39;

--input-null-string &#39;\\N&#39; --input-null-non-string &#39;\\N&#39;

--class-name com.li72.trlog

--jar-file /tmp/sqoopTempjar/trlog.jar

上面--jar-file 参数指定jar包的路径。--class-name 指定jar包中的class。

这样就可以解决所有解析异常了。

京公网安备 11010802041100号

京公网安备 11010802041100号