scrapy中多个pipeline作用:

一个项目可能需要爬取多个网站,根据每个网站的数据量(处理方式)不同,可创建多个管道 pipeline

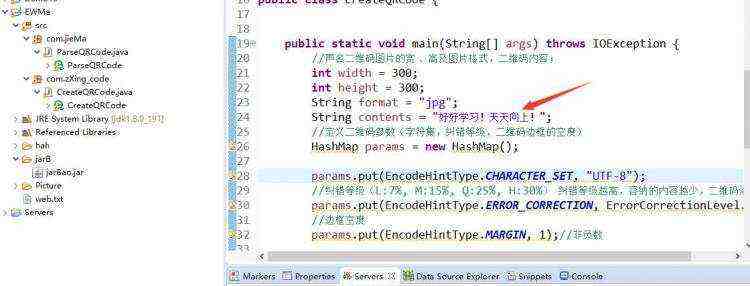

class SpideranythingPipeline(object):def process_item(self, item, spider):if spider.name == 'itcast': # spider为爬虫实例 itcast是爬虫的名字,, 由此可区分多个爬虫print(item)return item

pipeline的方法

mysql

class SpiderSuningBookPipeline(object):def process_item(self, item, spider):# collection.insert(dict(item))sql = """insert into book(title,author,download_text,new) values('%s','%s','%s','%s')"""\%(item['title'],item['author'],item['download_text'],item['new']

)print(sql)self.cursor.execute(sql)return itemdef open_spider(self, spider):# 连接数据库self.connect = pymysql.connect(host='127.0.0.1',port=3306,db='study',user='root',passwd='123456',charset='utf8',use_unicode=True)# 通过cursor执行增删查改self.cursor = self.connect.cursor()self.connect.autocommit(True)def close_spider(self, spider):self.cursor.close()self.connect.close()

mongodb

from pymongo import MongoClientclass PracticePipeline(object):def process_item(self, item, spider):''' 接受爬虫返回的数据 '''passdef open_spider(self, spider):''' 爬虫启动的时候调用 '''spider.hello = 'world' # 可以给spider添加属性# 初始化数据库连接client = MongoClient()spider.collection = client['SpiderAnything']['hr']def close_spider(self, spider):''' 爬虫关闭的时候调用 '''pass

京公网安备 11010802041100号

京公网安备 11010802041100号