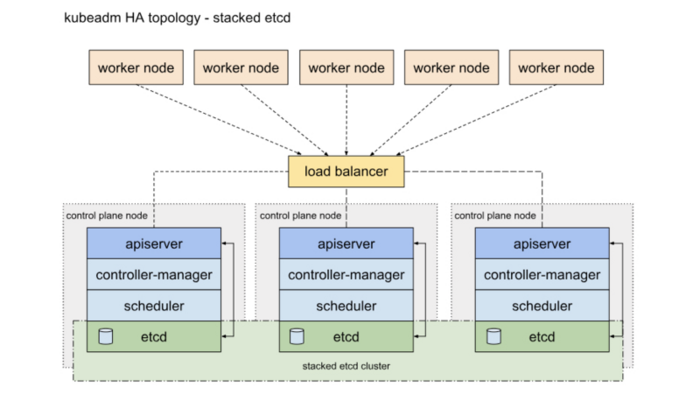

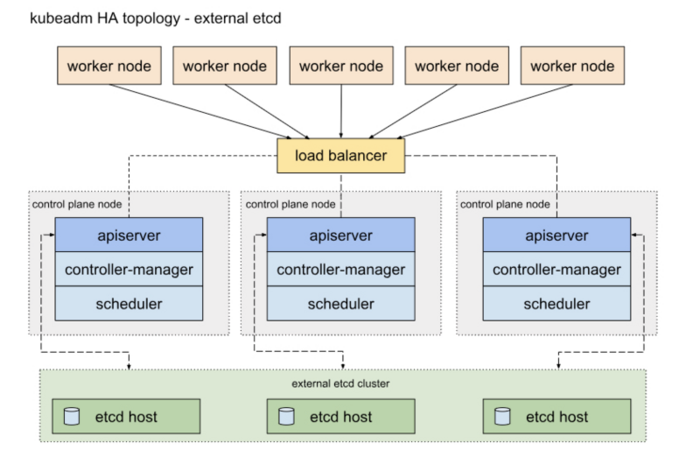

Kubernetes的高可用主要指的是控制平面的高可用,即有多套Master节点组件和Etcd组件,工作节点通过负载均衡连接到各Master。

一种是将etcd与Master节点组件混布在一起

另外一种方式是,使用独立的Etcd集群,不与Master节点混布

两种方式的相同之处在于都提供了控制平面的冗余,实现了集群高可以用,区别在于:

所需机器资源少

部署简单,利于管理

容易进行横向扩展

风险大,一台宿主机挂了,master和etcd就都少了一套,集群冗余度受到的影响比较大。

所需机器资源多(按照Etcd集群的奇数原则,这种拓扑的集群关控制平面最少就要6台宿主机了)。

部署相对复杂,要独立管理etcd集群和和master集群。

解耦了控制平面和Etcd,集群风险小健壮性强,单独挂了一台master或etcd对集群的影响很小。

服务器

master1 192.168.0.101 (master节点1)

master2 192.168.0.102 (master节点2)

master3 192.168.0.103 (master节点3)

haproxy 192.168.0.100 (haproxy节点,做3个master节点的负载均衡器)

master-1 192.168.0.104 (node节点)

| 主机 | IP | 备注 |

|---|---|---|

| master1 | 192.168.0.101 | master节点1 |

| master2 | 192.168.0.102 | master节点2 |

| master3 | 192.168.0.103 | master节点3 |

| haproxy | 192.168.0.100 | haproxy节点,做3个master节点的负载均衡器 |

| node | 192.168.0.104 | node节点 |

环境

主机:CentOS Linux release 7.7.1908 (Core)

core:3.10.0-1062.el7.x86_64

docker:19.03.7

kubeadm:1.17.3

| 资源 | 配置 |

|---|---|

| 主机 | CentOS Linux release 7.7.1908 (Core) |

| 主机core | 3.10.0-1062.el7.x86_64 |

| docker | 19.03.7 |

| kubeadm | 1.17.3 |

在所有节点上操作

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

systemctl stop firewalld

systemctl disable firewalld

swapoff -a

vim /etc/fstab

# 注释掉swap行

hostnamectl set-hostname [master|node]{X}

vim /etc/hosts

192.168.0.101 master1

192.168.0.102 master2

192.168.0.103 master3

192.168.0.104 node

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

在除了haproxy以外所有节点上操作

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

systemctl start docker

systemctl enable docker

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet

systemctl start kubelet

在haproxy节点操作

yum install haproxy -y

cat < /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

defaults

mode tcp

log global

retries 3

timeout connect 10s

timeout client 1m

timeout server 1m

frontend kube-apiserver

bind *:6443 # 指定前端端口

mode tcp

default_backend master

backend master # 指定后端机器及端口,负载方式为轮询

balance roundrobin

server master1 192.168.0.101:6443 check maxconn 2000

server master2 192.168.0.102:6443 check maxconn 2000

server master3 192.168.0.103:6443 check maxconn 2000

EOF

systemctl enable haproxy

systemctl start haproxy

ss -tnlp | grep 6443

LISTEN 0 128 *:6443 *:* users:(("haproxy",pid=1107,fd=4))

在master1节点操作

kubeadm config print init-defaults > kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.0.101 ##宿主机IP地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1 ##当前节点在k8s集群中名称

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.0.100:6443" ##前段haproxy负载均衡地址和端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers ##使用阿里的镜像地址,否则无法拉取镜像

kind: ClusterConfiguration

kubernetesVersion: v1.17.0

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16" ##此处填写后期要安装网络插件flannel的默认网络地址

serviceSubnet: 10.96.0.0/12

scheduler: {}

# 通过阿里源预先拉镜像

kubeadm config images pull --config kubeadm-config.yaml

kubeadm init --cOnfig=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

# master节点用以下命令加入集群:

kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8 \

--control-plane --certificate-key 8d3f96830a1218b704cb2c24520186828ac6fe1d738dfb11199dcdb9a10579f8

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

# 工作节点用以下命令加入集群

kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8

原来的kubeadm版本,join命令只用于工作节点的加入,而新版本加入了 --contaol-plane 参数后,控制平面(master)节点也可以通过kubeadm join命令加入集群了。

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

kubectl get no

NAME STATUS ROLES AGE VERSION

master1 Ready master 4h12m v1.17.3

# 在master(2|3)操作:

kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8 \

--control-plane --certificate-key 8d3f96830a1218b704cb2c24520186828ac6fe1d738dfb11199dcdb9a10579f8

# 在node操作

kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8

kubectl get no

NAME STATUS ROLES AGE VERSION

node Ready 3h37m v1.17.3

master1 Ready master 4h12m v1.17.3

master2 Ready master 4h3m v1.17.3

master3 Ready master 3h54m v1.17.3

以便k8s集群启动有问题时排查问题

安装rsyslog服务

yum install rsyslog

配置rsyslog采集日志

vim /etc/rsyslog.conf

# 修改配置

$ModLoad imudp

$UDPServerRun 514

# 新增配置

local2.* /var/log/haproxy.log

重启rsyslog

systemctl restart rsyslog

systemctl enable rsyslog

安装nginx

yum install nginx

systemctl start nginx

systemctl enable nginx

配置nginx文件

vim /etc/nginx/nginx.conf

# 在http{}段外面添加

stream {

server {

listen 6443;

proxy_pass kube_apiserver;

}

upstream kube_apiserver {

server 192.168.0.101:6443 max_fails=3 fail_timeout=5s;

server 192.168.0.102:6443 max_fails=3 fail_timeout=5s;

server 192.168.0.103:6443 max_fails=3 fail_timeout=5s;

}

log_format proxy '$remote_addr [$time_local] '

'$protocol $status $bytes_sent $bytes_received '

'$session_time "$upstream_addr" '

'"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"';

access_log /var/log/nginx/proxy-access.log proxy;

}

重启nginx

systemctl restart nginx

https://segmentfault.com/a/1190000018741112

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有

京公网安备 11010802041100号 | 京ICP备19059560号-4 | PHP1.CN 第一PHP社区 版权所有