When I was first introduced to Long Short-Term Memory networks (LSTMs), it was hard to look past their complexity. I didn’t understand why they were designed they way they were designed, just that they worked. It turns out that LSTMs can be understood, and that, despite their superficial complexity, LSTMs are actually based on a couple incredibly simple, even beautiful, insights into neural networks. This post is what I wish I had when first learning about recurrent neural networks (RNNs).

In this post, we do a few things:

- We’ll define and describe RNNs generally, focusing on the limitations of vanilla RNNs that led to the development of the LSTM.

- We’ll describe the intuitions behind the LSTM architecture, which will enable us to build up to and derive the LSTM. Along the way we will derive the GRU. We’ll also derive a pseudo LSTM, which we’ll see is better in principle and performance to the standard LSTM.

- We’ll then extend these intuitions to show how they lead directly to a few recent and exciting architectures: highway and residual networks, and Neural Turing Machines.

This is a post about theory, not implementations. For how to implement RNNs using Tensorflow, check out my posts Recurrent Neural Networks in Tensorflow I and Recurrent Neural Networks in Tensorflow II.

Contents / quick links:

- Recurrent neural networks

- What RNNs can do; choosing the time step

- The vanilla RNN

- Information morphing and vanishing and exploding sensitivity

- A mathematically sufficient condition for vanishing sensitivity

- A minimum weight initialization for avoid vanishing gradients

- Backpropagation through time and vanishing sensitivity

- Dealing with vanishing and exploding gradients

- Written memories: the intuition behind LSTMs

- Using selectivity to control and coordinate writing

- Gates as a mechanism for selectivity

- Gluing gates together to derive a prototype LSTM

- Three working models: the normalized prototype the GRU and the pseudo LSTM

- Deriving the LSTM

- The LSTM with peepholes

- An empirical comparison of the basic LSTM and the pseudo LSTM

- Extending the LSTM

Prerequisites1

This post assumes the reader is already familiar with:

- Feedforward neural networks

- Backpropagation

- Basic linear algebra

We’ll review everything else, starting with RNNs in general.

Recurrent neural networks

From one moment to the next, our brain operates as a function: it accepts inputs from our senses (external) and our thoughts (internal) and produces outputs in the form of actions (external) and new thoughts (internal). We see a bear and then think “bear”. We can model this behavior with a feedforward neural network: we can teach a feedforward neural network to think “bear” when it is shown an image of a bear.

But our brain is not a one-shot function. It runs repeatedly through time. We see a bear, then think “bear”, then think “run”.2 Importantly, the very same function that transforms the image of a bear into the thought “bear” also transforms the thought “bear” into the thought “run”. It is a recurring function, which we can model with arecurrent neural network (RNN).

An RNN is a composition of identical feedforward neural networks, one for each moment, or step in time, which we will refer to as “RNN cells”. Note that this is a much broader definition of an RNN than that usually given (the “vanilla” RNN is covered later on as a precursor to the LSTM). These cells operate on their own output, allowing them to be composed. They can also operate on external input and produce external output. Here is a diagram of a single RNN cell:

Here is a diagram of three composed RNN cells:

You can think of the recurrent outputs as a “state” that is passed to the next timestep. Thus an RNN cell accepts a prior state and an (optional) current input and produces a current state and an (optional) current output.

Here is the algebraic description of the RNN cell:

where:

s t s t − 1 o t x t f ">ff is our recurrent function.

Our brain operates in place: current neural activity takes the place of past neural activity. We can see RNNs as operating in place as well: because RNN cells are identical, they can all be viewed as the same object, with the “state” of the RNN cell being overwritten at each time step. Here is a diagram of this framing:

Most introductions to RNNs start with this “single cell loop” framing, but I think you’ll find the sequential frame more intuitive, particularly when thinking about backpropagation. When starting with the single cell loop framing, RNN’s are said to “unrolled” to obtain the sequential framing above.

What RNNs can do; choosing the time step

The RNN structure described above is incredibly general. In theory, it can do anything: if we give the neural network inside each cell at least one hidden layer, each cell becomes a universal function approximator.3 This means that an RNN cell can emulate any function, from which it follows that an RNN could, in theory, emulate our brain perfectly. Though we know that the brain can theoretically be modeled this way, it’s an entirely different matter to actually design and train an RNN to do this. We are, however, making good progress.

With this analogy of the brain in mind, all we need to do to see how we can use an RNN to handle a task is to ask how a human would handle the same task.

Consider, for example, English-to-French translation. A human reads an English sentence (“the cat sat on the mat”), pauses, and then writes out the French translation (“le chat s’assit sur le tapis”). To emulate this behavior with an RNN, the only choice we have to make (other than designing the RNN cell itself, which for now we treat as a black box) is deciding what the time steps used should be, which determines the form the inputs and outputs, or how the RNN interacts with the external world.

One option is to set the time step according to the content. That is, we might use the entire sentence as a time step, in which case our RNN is just a feed-forward network:

The final state does not matter when translating a single sentence. It might matter, however, if the sentence were part of a paragraph being translated, since it would contain information about the prior sentences. Note that the intial state is indicated above as blank, but when evaluating individual sequences, it can useful to train the initial state as a variable. It may be that the best “a sequence is starting” state representation might not be the blank zero state.

Alternatively, we might say that each word or each character is a time step. Here is an illustration of what an RNN translating “the cat sat” on a per word basis might look like:

After the first time step, the state contains an internal representation of “the”; after the second, of “the cat”; after the the third, “the cat sat”. The network does not produce any outputs at the first three time steps. It starts producing outputs when it receives a blank input, at which point it knows the input has terminated. When it is done producing outputs, it produces a blank output to signal that it’s finished.

In practice, even powerful RNN architectures like deep LSTMs might not perform well on multiple tasks (here there are two: reading, then translating). To accomodate this, we can split the network into multiple RNNs, each of which specializes in one task. In this example, we would use an “encoder” network that reads in the English (blue) and a separate “decoder” network that reads in the French (orange):

Additionally, as shown in the above diagram, the decoder network is being fed in the last true value (i.e., the target value during training, and the network’s prior choice of translated word during testing). For an example of an RNN encoder-decoder model, see Cho et al. (2014).

Notice that having two separate networks still fits the definition of a single RNN: we can define the recurring function as a split function that takes, alongside its other inputs, an input specifying which split of the function to use.

The time step does not have to be content-based; it can be an actual unit of time. For example, we might consider the time step to be one second, and enforce a reading rate of 5 characters per second. The inputs for the first three time steps would be the c, at sa and t on.

We could also do something more interesting: we can let the RNN decide when its ready to move on to the next input, and even what that input should be. This is similar to how a human might focus on certain words or phrases for an extended period of time to translate them or might double back through the source. To do this, we use the RNN’s output (an external action) to determine its next input dynamically. For example, we might have the RNN output actions like “read the last input again”, “backtrack 5 timesteps of input”, etc. Successful attention-based translation models are a play on this: they accept the entire English sequence at each time step and their RNN cell decides which parts are most relevant to the current French word they are producing.

There is nothing special about this English-to-French translation example. Whatever the human task we choose, we can build different RNN models by choosing different time steps. We can even reframe something like handwritten digit recognition, for which a one-shot function (single time step) is the typical approach, as a many-time step task. Indeed, take a look at some of the MNIST digits yourself and observe how you need to focus on some longer than others. Feedforward neural networks cannot exhibit that behavior; RNNs can.

The vanilla RNN

Now that we’ve covered the big picture, lets take a look inside the RNN cell. The most basic RNN cell is a single layer neural network, the output of which is used as both the RNN cell’s current (external) output and the RNN cell’s current state:

Note how the prior state vector is the same size as the current state vector. As discussed above, this is critical for composition of RNN cells. Here is the algebraic description of the vanilla RNN cell:

where:

ϕ ">ϕϕ is the activation function (e.g., sigmoid, tanh, ReLU),s t ∈ R n s t − 1 ∈ R n x t ∈ R m W ∈ R n × n U ∈ R m × n b ∈ R n n ">nn andm ">mm are the state and input sizes.

Even this basic RNN cell is quite powerful. Though it does not meet the criteria for universal function approximation within a single cell, it is known that a series of composed vanilla RNN cells is Turing complete and can therefore implement any algorithm. See Siegelmann and Sontag (1992). This is nice, in theory, but there is a problem in practice: training vanilla RNNs with backpropagation algorithm turns out to be quite difficult, even more so than training very deep feedforward neural networks. This difficulty is due to the problems of information morphing and vanishing and exploding sensitivity caused by repeated application of the same nonlinear function.

Information morphing and vanishing and exploding sensitivity4

Instead of the brain, consider modeling the entire world as an RNN: from each moment to the next, the state of the world is modified by a fantastically complex recurring function called time. Now consider how a small change today will affect the world in one hundred years. It could be that something as small as the flutter of a butterfly’s wing will ultimately cause a typhoon halfway around the world.5 But it could also be that our actions today ultimately do not matter. So Einstein wasn’t around to discover relativity? This would have made a difference in the 1950s, but maybe then someone else discovers relativity, so that the difference becomes smaller by the 2000s, and ultimately approaches zero by the year 2050. Finally, it could be that the importance of a small change fluctuates: perhaps Einstein’s discovery was in fact caused by a comment his wife made in response to a butteryfly that happened to flutter by, so that the butterfly exploded into a big change during the 20th century that then quickly vanished.

In the Einstein example, note that the past change is the introduction of new information (the theory of relativity), and more generally that the introduction of this new information was a direct result of our recurring function (the flow of time). Thus, we can consider information itself as a change that is morphed by the recurring function such that its effects vanish, explode or simply fluctuate.

This discussion shows that the state of the world (or an RNN) is constantly changing and that the present can be either extremely sensitive or extremely insensitive to past changes: effects can compound or dissolve. These are problems, and they extend to RNNs (and feedforward neural networks) in general:

-

Information Morphing

First, if information constantly morphs, it is difficult to exploit past information properly when we need it. The best usable state of the information may have occured at some point in the past. On top of learning how to exploit the information today (if it were around in its original, usable form), we must also learn how to decode the original state from the current state, if that is even possible. This leads to difficult learning and poor results.6

It’s very easy to show that information morphing occurs in a vanilla RNN. Indeed, suppose it were possible for an RNN cell to maintain its prior state completely in the absence of external inputs. Then

F ( x ) = ϕ ( W s t − 1 + b ) ">F(x)=ϕ(Wst−1+b)F(x)=ϕ(Wst−1+b) is the identity function with respect tos t − 1 F ( x ) ">F(x)F(x) is nonlinear, so we have a contradiction. Therefore, an RNN cell inevitably morphs the state from one time step to the next. Even the trivial task of outputtings t = x t This is the root cause of what is known in some circles as the degradation problem. See, e.g., He et al. (2015). The authors of He et al. claims this is “unexpected” and “counterintuitive”, but I hope this discussion shows that the degradation problem, or information morphing, is actually quite natural (and in many cases desirable). We’ll see below that although information morphing was not among the original motivations for introducing LSTMs, the principle behind LSTMs happens to solve the problem effectively. In fact, the effectiveness of the residual networks used by He at al. (2015) is a result of the fundamental principle of LSTMs.

-

Vanishing and Exploding Gradients

Second, we train RNNs using the backpropagation algorithm. But backpropagation is a gradient-based algorithm, and vanishing and exploding “sensitivity” is just another way of saying vanishing and exploding gradients (the latter is the accepted term, but I find the former more descriptive). If the gradients explode, we can’t train our model. If they vanish, it’s difficult for us to learn long-term dependencies, since backpropagation will be too sensitive to recent distractions. This makes training difficult.

I’ll come back to the difficulty of training RNNs via backpropagation in a second, but first I’d like to give a short mathematical demonstration of how easy it is for the vanilla RNN to suffer from the vanishing gradients and what we can do to help avoid this at the start off training.

A mathematically sufficient condition for vanishing sensitivity

In this section I give a mathematical proof of a sufficient condition for vanishing sensitivity in vanilla RNNs. It is essentially the same as the proof of the similar result in Pascanu et al. (2013), but I think you will find this presentation easier to follow. The proof here also takes advantage of the mean value theorem to go one step further than Pascanu et al. and reach a slightly stronger result, effectively showing vanishing causation rather than vanishing sensitivity.7 Note that mathematical analyses of vanishing and exploding gradients date back to the early 1990s, in Bengio et al. (1994) and Hochreiter (1991) (original in German, relevant portions summarized in Hochreiter and Schmidhuber (1997)).

Let

By constrast, Pascanu et al. (2013) proved the same sufficient condition for the following result, which can easily be extended to obtain the above:

To begin, from our definition of a vanilla RNN cell, we have:

Applying the mean value theorem in several variables, we get that there exists

Now let

-

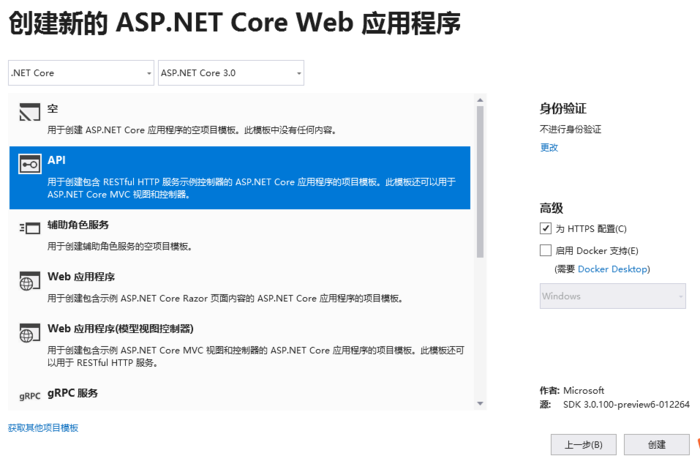

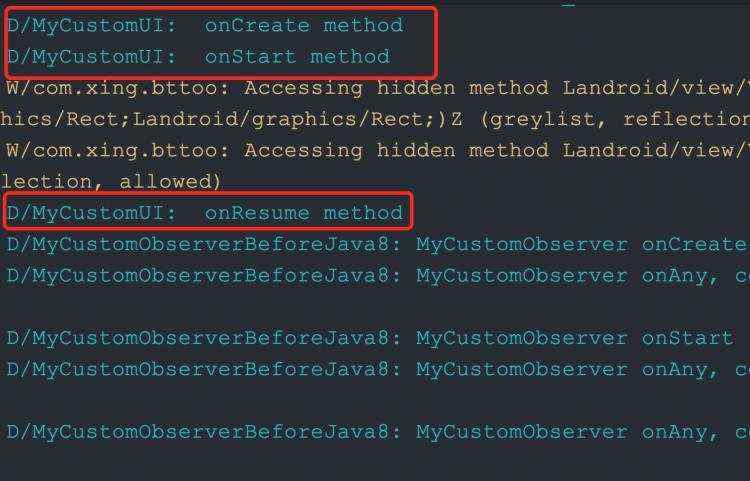

com.sun.javadoc.PackageDoc.exceptions()方法的使用及代码示例 ... [详细]Ihavetwomethodsofgeneratingmdistinctrandomnumbersintherange[0..n-1]我有两种方法在范围[0.n-1]中生 ... [详细]在最近的一个项目中,我们使用了JQuery DataTable进行数据展示,虽然使用起来非常方便,但在测试过程中发现了一个问题:当查询条件改变时,有时查询结果的数据不正确。通过FireBug调试发现,点击搜索按钮时,会发送两次Ajax请求,一次是原条件的请求,一次是新条件的请求。 ... [详细]本文介绍了如何使用Net Core 3.0和Web API进行前后端分离开发,并重点探讨了Vue.js在前端的应用。后端采用MySQL数据库和EF Core框架进行数据操作,开发环境为Windows 10和Visual Studio 2019,MySQL服务器版本为8.0.16。文章详细描述了API项目的创建过程、启动步骤以及必要的插件安装,为开发者提供了一套完整的开发指南。 ... [详细]本文深入探讨了如何将微服务升级为OAuth2资源服务器,以订单服务为例,详细介绍了在POM文件中添加 `spring-cloud-starter-oauth2` 依赖,并配置Spring Security以实现对微服务的保护。通过这一过程,不仅增强了系统的安全性,还提高了资源访问的可控性和灵活性。文章还讨论了最佳实践,包括如何配置OAuth2客户端和资源服务器,以及如何处理常见的安全问题和错误。 ... [详细]在Linux系统中,网络配置是至关重要的任务之一。本文详细解析了Firewalld和Netfilter机制,并探讨了iptables的应用。通过使用`ip addr show`命令来查看网卡IP地址(需要安装`iproute`包),当网卡未分配IP地址或处于关闭状态时,可以通过`ip link set`命令进行配置和激活。此外,文章还介绍了如何利用Firewalld和iptables实现网络流量控制和安全策略管理,为系统管理员提供了实用的操作指南。 ... [详细]本文将详细介绍 Android Jetpack 中 Lifecycle 组件的实现原理,帮助开发者更好地理解和使用 Lifecycle,避免常见的内存泄漏问题。 ... [详细]在JavaWeb开发中,文件上传是一个常见的需求。无论是通过表单还是其他方式上传文件,都必须使用POST请求。前端部分通常采用HTML表单来实现文件选择和提交功能。后端则利用Apache Commons FileUpload库来处理上传的文件,该库提供了强大的文件解析和存储能力,能够高效地处理各种文件类型。此外,为了提高系统的安全性和稳定性,还需要对上传文件的大小、格式等进行严格的校验和限制。 ... [详细]在关系型数据库中,表的设计与SQL语句的编写对性能的影响至关重要,甚至可占到90%以上。本文将重点探讨MySQL中Decimal类型的最大值及其在数据处理中的应用技巧,通过实例分析和优化建议,帮助读者深入理解并掌握这一重要知识点。 ... [详细]在Android 4.4中,Fence机制是处理缓冲区交换和同步问题的关键技术。该机制广泛应用于生产者-消费者模式中,确保了不同组件之间高效、安全的数据传输。通过深入解析Fence机制的工作原理和应用场景,本文探讨了其在系统性能优化和资源管理中的重要作用。 ... [详细]Keepalived 提供了多种强大且灵活的后端健康检查机制,包括 HTTP_GET、SSL_GET、TCP_CHECK、SMTP_CHECK 和 MISC_CHECK 等多种检测方法。这些健康检查功能确保了高可用性环境中的服务稳定性和可靠性。通过合理配置这些检查方式,可以有效监测后端服务器的状态,及时发现并处理故障,从而提高系统的整体性能和可用性。 ... [详细]在Android平台中,播放音频的采样率通常固定为44.1kHz,而录音的采样率则固定为8kHz。为了确保音频设备的正常工作,底层驱动必须预先设定这些固定的采样率。当上层应用提供的采样率与这些预设值不匹配时,需要通过重采样(resample)技术来调整采样率,以保证音频数据的正确处理和传输。本文将详细探讨FFMpeg在音频处理中的基础理论及重采样技术的应用。 ... [详细]数字图书馆近期展出了一批精选的Linux经典著作,这些书籍虽然部分较为陈旧,但依然具有重要的参考价值。如需转载相关内容,请务必注明来源:小文论坛(http://www.xiaowenbbs.com)。 ... [详细]在C#开发过程中,经常需要对系统的配置文件进行读写操作,如系统初始化参数的修改或运行时参数的更新。本文将详细介绍如何在C#中正确配置和修改app.config文件,包括其结构、常见用法以及最佳实践。此外,还将探讨exe.config文件的生成机制及其在不同环境下的应用,帮助开发者更好地管理和维护应用程序的配置信息。 ... [详细]本文探讨了如何在C#应用程序中通过选择ComboBox项从MySQL数据库中检索数据值。具体介绍了在事件处理方法 `comboBox2_SelectedIndexChanged` 中可能出现的常见错误,并提供了详细的解决方案和优化建议,以确保数据能够正确且高效地从数据库中读取并显示在界面上。此外,还讨论了连接字符串的配置、SQL查询语句的编写以及异常处理的最佳实践,帮助开发者避免常见的陷阱并提高代码的健壮性。 ... [详细]Tags | 热门标签RankList | 热门文章

- 1如何用 Python 打印上标和下标?

- 2Dependency Walker使用说明 转载

- 3kubeadm添加新master或node、unknown flag –experimentaluploadcerts

- 4Vue项目打包并部署nginx服务器的详细步骤

- 5判断集合关系(自反,反自反,对称,反对称,传递)

- 6redis专题:使用redis实现分布式锁

- 7大神你好,可以帮我P张图吗?

- 8C语言总结——结构体(struct)使用方法

- 9367有效的完全平方数(牛顿迭代法)

- 10org.mule.util.IOUtils类的使用及代码示例

- 11解决:ubuntu 里文件夹带锁

- 12深入理解Major GC, Full GC, CMS

- 13i3 处理器_旧机型安装 Linux_64位操作系统

- 14VsCode 配置 python 开发环境

- 15C#学习教程:如何处理一个密封在.NET库中的要扩展的类?分享

京公网安备 11010802041100号

京公网安备 11010802041100号